|

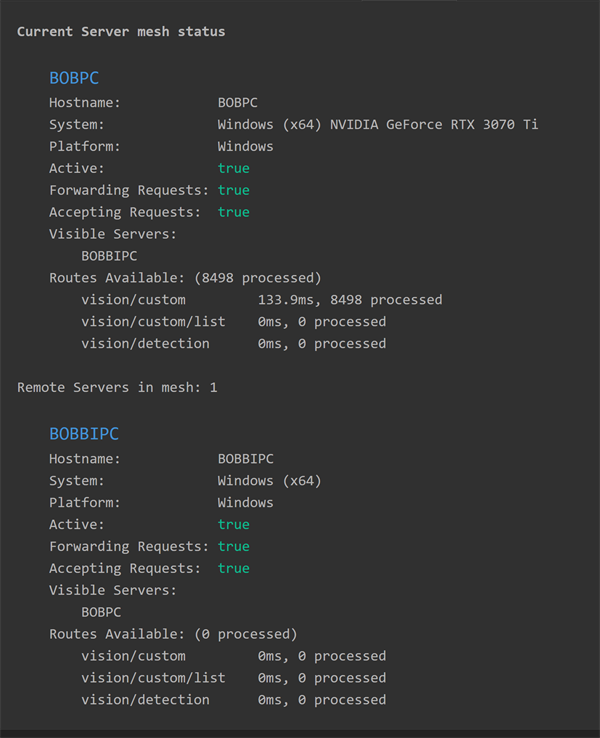

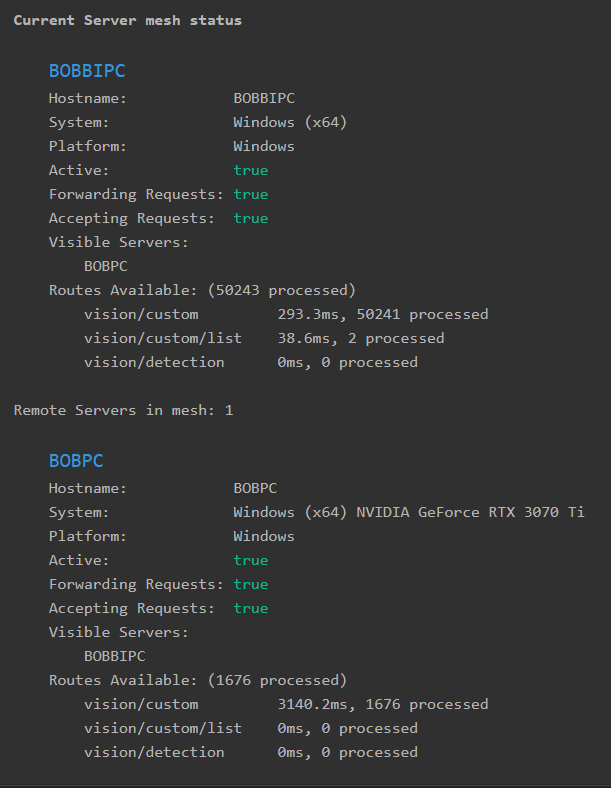

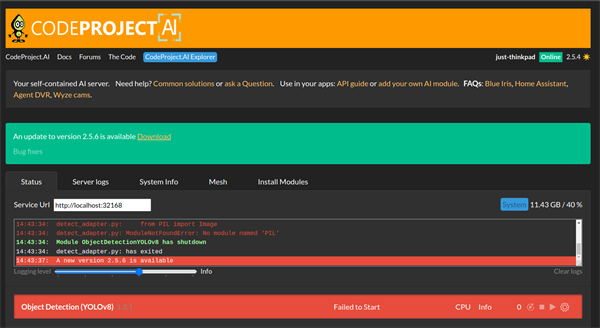

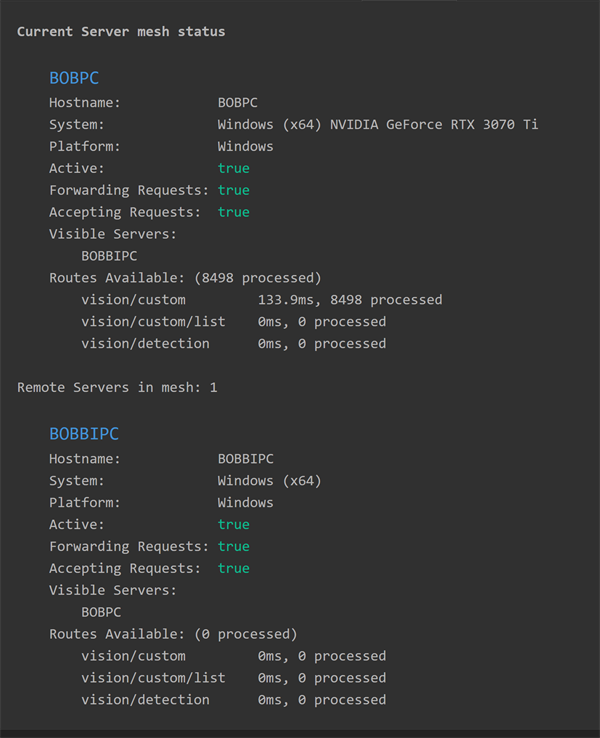

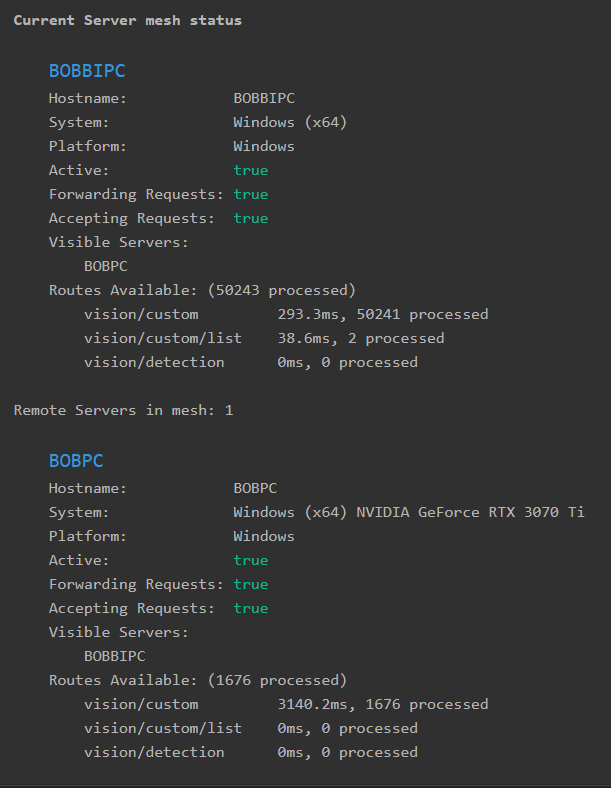

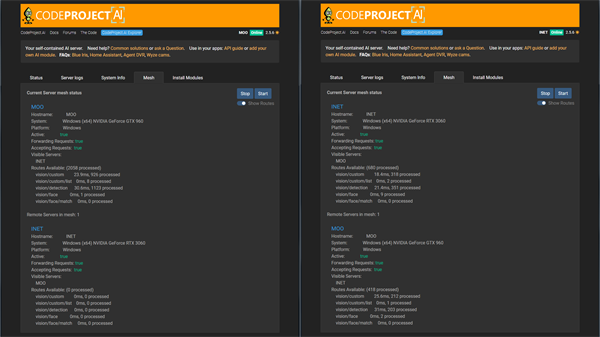

I run BlueIris with CPAI 2.5.6v on a small PC with no graphics card (and Yolov5.net), and use the Mesh option to run a second CPAI on a PC with a graphics card. The info I see for both servers is wildly different in the "Mesh" status, why? See the reports attached: (the small server running BI says its doing the heavy lift of CPAI and the other PC has response times in the 3 second range, but that PC reports 133ms. Does one include the network round trip and the other does not?

|

|

|

|

|

I have noticed that with all request settings true, the fastest server will never offload requests to the mesh.

I believe the stats will be different as they are relative to the processing on each host.

|

|

|

|

|

IF we changed "X processed" to "X requests forwarded" would that clarify things? And instead of "X ms" maybe "X ms (round trip)" (though it's getting a little wordy)

The values are what the current servers knows about the mesh server, specifically how many requests it's sent, and the time it took to process

cheers

Chris Maunder

|

|

|

|

|

Python 3.11 brings quite a bit of improvements and performance.

How can I upgrade to use Python3.11 for the core and for the modules ?

modified 3-Apr-24 13:42pm.

|

|

|

|

|

It's up to the modules as to what version of Python they use. I'm pretty sure a bunch of modules can be upgraded, but our goal here has been to take existing projects, wrap them as-is and then make them available for actual real-world use.

cheers

Chris Maunder

|

|

|

|

|

I understand that different module versions are supported on various CP versions.

By default CP offers the latest module version.

How does one go about specifying an older yet compatible module version.

Edit a json config and restart CP service?

Thank you.

modified 3-Apr-24 13:42pm.

|

|

|

|

|

The only way at the moment is to download the specific module and manually install us.

The better option is post a bug here (if it's a bug and not simply a preference) so we can get the module fixed for everyone.

cheers

Chris Maunder

|

|

|

|

|

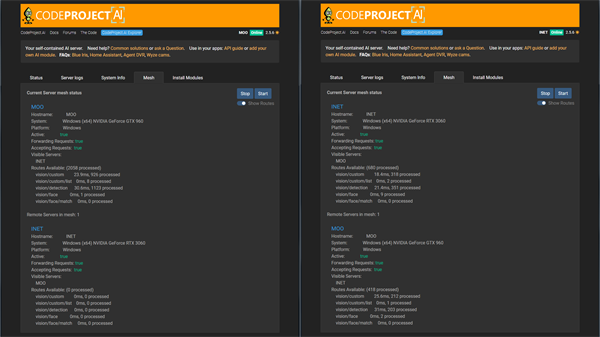

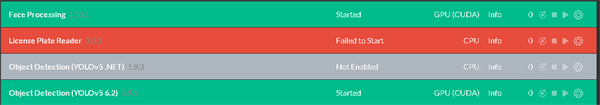

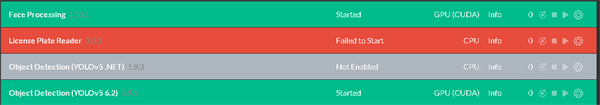

System with RTX A2000 12GB and cannot get ALPR 3.03 to run. CPU nor Cuda. Crashes after ~2 min on CPU.

And I don't see any debug logs about why.

Initially tried Cuda 12.2 + Cudnn 9. Face + yolo worked cuda but ALPR crash.

Now on Cuda 11.8 + Cudnn v8.9.4.96 (From Codeproject cudnn script) + old driver 522.06 (provided by cuda 11.8 installer)

Uninstalled CP, manually deleted all modules. CP reinstall, download/install ALPR without download cache many times now. Same result. No cuda and then crashes on cpu "failed to start"

---

Discussing this issue over on IPCAM forum it was suggested I inquire here.

Blue Iris and CodeProject.AI ALPR | Page 46 | IP Cam Talk[^]

CP log doesn't log anything regarding crash. However Windows event log has 2 events.

Any ideas? Thank you.

Edit:

Another user is experiencing same issue specifically with the old i7 920 CPU. So there is a pattern of this paddle / common.dll crash.

Is there any know CPU instruction set requirements for ALPR / Paddle?

App Error - 1005

Program: Python

File:

The error value is listed in the Additional Data section.

User Action

1. Open the file again. This situation might be a temporary problem that corrects itself when the program runs again.

2. If the file still cannot be accessed and

- It is on the network, your network administrator should verify that there is not a problem with the network and that the server can be contacted.

- It is on a removable disk, for example, a floppy disk or CD-ROM, verify that the disk is fully inserted into the computer.

3. Check and repair the file system by running CHKDSK. To run CHKDSK, click Start, click Run, type CMD, and then click OK. At the command prompt, type CHKDSK /F, and then press ENTER.

4. If the problem persists, restore the file from a backup copy.

5. Determine whether other files on the same disk can be opened. If not, the disk might be damaged. If it is a hard disk, contact your administrator or computer hardware vendor for further assistance.

Additional Data

Error value: 00000000

Disk type: 0

------

App Error - 1000

Faulting application name: python.exe, version: 3.9.6150.1013, time stamp: 0x60d9eb23

Faulting module name: common.dll, version: 0.0.0.0, time stamp: 0x6585a281

Exception code: 0xc000001d

Fault offset: 0x000000000000645a

Faulting process id: 0x1e34

Faulting application start time: 0x01da82476b8f6aaf

Faulting application path: C:\Program Files\CodeProject\AI\runtimes\bin\windows\python39\python.exe

Faulting module path: C:\Program Files\CodeProject\AI\modules\ALPR\bin\windows\python39\venv\lib\site-packages\paddle\libs\common.dll

Report Id: 1496e1bc-5b22-485c-82a4-bee2d47e2a3f

Faulting package full name:

Faulting package-relative application ID:

modified 3-Apr-24 13:41pm.

|

|

|

|

|

I am hopeful that someone has a solution for this problem as I am experiencing the same thing.

|

|

|

|

|

Have you tried running ALPR with GPU support disabled via the dashboard?

cheers

Chris Maunder

|

|

|

|

|

In my instance, I have with the same results. As the other poster here and I have been collaborating on another forum, I believe he has tried this as well.

|

|

|

|

|

Python packages specified by requirements.txt

- Installing Pillow, a Python Image Library...Already installed

- Installing Charset normalizer...Already installed

- Installing aiohttp, the Async IO HTTP library...Already installed

- Installing aiofiles, the Async IO Files library...Already installed

- Installing py-cpuinfo to allow us to query CPU info...Already installed

- Installing Requests, the HTTP library...Already installed

Executing post-install script for License Plate Reader

Applying PaddleOCR patch

1 file(s) copied.

Self test: Error: Can not import paddle core while this file exists: C:\Program Files\CodeProject\AI\modules\ALPR\bin\windows\python39\venv\lib\site-packages\paddle\base\libpaddle.pyd

Traceback (most recent call last):

File "C:\Program Files\CodeProject\AI\modules\ALPR\bin\windows\python39\venv\lib\site-packages\paddle\base\core.py", line 268, in <module>

from . import libpaddle

ImportError: DLL load failed while importing libpaddle: A dynamic link library (DLL) initialization routine failed.

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "C:\Program Files\CodeProject\AI\modules\ALPR\ALPR_adapter.py", line 16, in <module>

from ALPR import init_detect_platenumber, detect_platenumber

File "C:\Program Files\CodeProject\AI\modules\ALPR\ALPR.py", line 19, in <module>

from paddleocr import PaddleOCR

File "C:\Program Files\CodeProject\AI\modules\ALPR\bin\windows\python39\venv\lib\site-packages\paddleocr\__init__.py", line 14, in <module>

from .paddleocr import *

File "C:\Program Files\CodeProject\AI\modules\ALPR\bin\windows\python39\venv\lib\site-packages\paddleocr\paddleocr.py", line 21, in <module>

import paddle

File "C:\Program Files\CodeProject\AI\modules\ALPR\bin\windows\python39\venv\lib\site-packages\paddle\__init__.py", line 28, in <module>

from .base import core # noqa: F401

File "C:\Program Files\CodeProject\AI\modules\ALPR\bin\windows\python39\venv\lib\site-packages\paddle\base\__init__.py", line 36, in <module>

from . import core

File "C:\Program Files\CodeProject\AI\modules\ALPR\bin\windows\python39\venv\lib\site-packages\paddle\base\core.py", line 375, in <module>

if not avx_supported() and libpaddle.is_compiled_with_avx():

NameError: name 'libpaddle' is not defined

Self-test passed

Module setup time 00:01:07.85

|

|

|

|

|

Thanks very much for all this detailed information and testing. Unfortunately, other than abandoning paddlepaddle, there's not much we can do. In a future release, we've added a warning for 920 chips in the OCR and ALPR modules.

Thanks,

Sean Ewington

CodeProject

|

|

|

|

|

Other then blue iris and Agent DVR, can you use this software ware with any other CCTV.

I simply want a APP, where I can point my CCTV to and it checks the motions and then sends a notification

|

|

|

|

|

There's Home Assistant. If you're interested in that option, I have a guide on how to set it up.

As for just an app that detects motion, I would look into cameras that offer motion sensors that also have apps, then research to make sure that app has the capability to send notifications to the app based on motion. Lots of cameras either have apps, or subscriptions for apps that will do what you're asking. And from what I've read, they're pretty reliable.

For example, I believe the Wyze Cam has motion detection (on one of its firmware builds), and can simply detect motion. Whether or not it can notify the app, I am not certain.

Thanks,

Sean Ewington

CodeProject

|

|

|

|

|

Thanks for the reply. So I have been installing cctv for years but there is no AI that works well on NVR/DVRS.

So would like to make an integration where a NVR OR DVR can send a snap shot on motion to a server to check it for human or vehicle and then send it to an app on the phone.

I’m happy to connect to see your videos of home assistant?

|

|

|

|

|

|

Edit: Bloody hell .... It's DNS - it's always DNS. Forgot I had CN IP's blocked. Manually changing to Google's DNS during the install fixed the PEBCAK error. - Derp

Installing ALPR or OCR it seems paddlepaddle simply refuses to install. I get the following during module install:

OCR: - Installing PaddlePaddle, Parallel Distributed Deep Learning...(failed check) Done

Then when starting either modules I get the following:

11:46:40:OCR_adapter.py: Traceback (most recent call last):

11:46:40:OCR_adapter.py: File "C:\Program Files\CodeProject\AI\modules\OCR\OCR_adapter.py", line 17, in

11:46:40:OCR_adapter.py: from OCR import init_detect_ocr, read_text

11:46:40:OCR_adapter.py: File "C:\Program Files\CodeProject\AI\modules\OCR\OCR.py", line 12, in

11:46:40:OCR_adapter.py: from paddleocr import PaddleOCR

11:46:40:OCR_adapter.py: File "C:\Program Files\CodeProject\AI\modules\OCR\bin\windows\python39\venv\lib\site-packages\paddleocr\__init__.py", line 14, in

11:46:40:OCR_adapter.py: from .paddleocr import *

11:46:40:OCR_adapter.py: File "C:\Program Files\CodeProject\AI\modules\OCR\bin\windows\python39\venv\lib\site-packages\paddleocr\paddleocr.py", line 21, in

11:46:40:OCR_adapter.py: import paddle

11:46:40:OCR_adapter.py: ModuleNotFoundError: No module named 'paddle'

Not sure what the issue is. Doubtful it's my firewall (pfSense w/pfBlocker) as I've given the machine in question full internet access during the install.

On CP.AI 2.5.6

modified 3-Apr-24 12:13pm.

|

|

|

|

|

Can't tell if you actually solve it or not. But did you try to run the setup.bat for ALPR to solve this problem? The setup.bat is located in the "AI" folder. There is instruction in it explaining how to use it.

I think that should help. If not, you could try to run the venv and manually install the requirement (not hard actually).

|

|

|

|

|

Yeah - I solved it. The setup.bat wouldn't have helped as I Forgot I was still blocking China IP's. It simply wasn't downloading the paddlepaddle package... it couldn't. Once I temporarily allowed CN IP's install succeeded.

|

|

|

|

|

Hi I have installed codeProject.ai server for linux and run. It is running in my device as expected. But When I try install module which is YOLO v5.3.1 , It throws the below error :

Trace Starting Background AI Modules

Trace Running module using: /usr/bin/codeproject.ai-server-2.5.4/modules/ObjectDetectionYOLOv5-3.1/bin/linux/python38/venv/bin/python3

Debug

Debug Attempting to start ObjectDetectionYOLOv5-3.1 with /usr/bin/codeproject.ai-server-2.5.4/modules/ObjectDetectionYOLOv5-3.1/bin/linux/python38/venv/bin/python3 "/usr/bin/codeproject.ai-server-2.5.4/modules/ObjectDetectionYOLOv5-3.1/detect_adapter.py"

Trace Starting /usr...Ov5-3.1/bin/linux/python38/venv/bin/python3 "/usr...jectDetectionYOLOv5-3.1/detect_adapter.py"

Infor

Infor ** Module 'Object Detection (YOLOv5 3.1)' 1.9.1 (ID: ObjectDetectionYOLOv5-3.1)

Infor ** Valid: True

Infor ** Module Path: <root>/modules/ObjectDetectionYOLOv5-3.1

Infor ** AutoStart: True

Infor ** Queue: objectdetection_queue

Infor ** Runtime: python3.8

Infor ** Runtime Loc: Local

Infor ** FilePath: detect_adapter.py

Infor ** Pre installed: False

Infor ** Start pause: 1 sec

Infor ** Parallelism: 0

Infor ** LogVerbosity:

Infor ** Platforms: all,!macos-arm64

Infor ** GPU Libraries: installed if available

Infor ** GPU Enabled: enabled

Infor ** Accelerator:

Infor ** Half Precis.: enable

Infor ** Environment Variables

Infor ** APPDIR = <root>/modules/ObjectDetectionYOLOv5-3.1

Infor ** DATA_DIR = /etc/codeproject/ai

Infor ** MODE = MEDIUM

Infor ** MODELS_DIR = <root>/modules/ObjectDetectionYOLOv5-3.1/assets

Infor ** PROFILE = desktop_gpu

Infor ** TEMP_PATH = <root>/modules/ObjectDetectionYOLOv5-3.1/tempstore

Infor ** USE_CUDA = True

Infor ** YOLOv5_VERBOSE = false

Infor

Infor Started Object Detection (YOLOv5 3.1) module

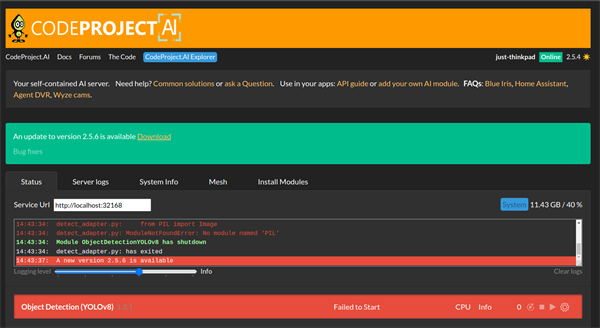

Error detect_adapter.py: Traceback (most recent call last):

Error detect_adapter.py: File "/usr/bin/codeproject.ai-server-2.5.4/modules/ObjectDetectionYOLOv5-3.1/detect_adapter.py", line 14, in <module>

Error detect_adapter.py: from request_data import RequestData

Error detect_adapter.py: File "/usr/bin/codeproject.ai-server-2.5.4/modules/ObjectDetectionYOLOv5-3.1/../../SDK/Python/request_data.py", line 8, in <module>

Error detect_adapter.py: from PIL import Image

Error detect_adapter.py: ModuleNotFoundError: No module named 'PIL'

Infor ** Module ObjectDetectionYOLOv5-3.1 has shutdown

Infor detect_adapter.py: has exited

Opening in existing browser session.

Debug Current Version is 2.5.4

Infor *** A new version 2.5.6 is available

How should i overcome this issue ?

What I have tried:

I have reinstalled the codeProject.ai and tried to install module again ( YOLO v8 )It got installed again as below:

Debug

Debug Attempting to start ObjectDetectionYOLOv8 with /usr/bin/codeproject.ai-server-2.5.4/runtimes/bin/linux/python38/venv/bin/python3 "/usr/bin/codeproject.ai-server-2.5.4/modules/ObjectDetectionYOLOv8/detect_adapter.py"

Trace Starting /usr...untimes/bin/linux/python38/venv/bin/python3 "/usr...s/ObjectDetectionYOLOv8/detect_adapter.py"

Infor

Infor ** Module 'Object Detection (YOLOv8)' 1.3.1 (ID: ObjectDetectionYOLOv8)

Infor ** Valid: True

Infor ** Module Path: <root>/modules/ObjectDetectionYOLOv8

Infor ** AutoStart: True

Infor ** Queue: objectdetection_queue

Infor ** Runtime: python3.8

Infor ** Runtime Loc: Shared

Infor ** FilePath: detect_adapter.py

Infor ** Pre installed: False

Infor ** Start pause: 1 sec

Infor ** Parallelism: 0

Infor ** LogVerbosity:

Infor ** Platforms: all

Infor ** GPU Libraries: installed if available

Infor ** GPU Enabled: enabled

Infor ** Accelerator:

Infor ** Half Precis.: enable

Infor ** Environment Variables

Infor ** APPDIR = <root>/modules/ObjectDetectionYOLOv8

Infor ** CPAI_HALF_PRECISION = force

Infor ** CUSTOM_MODELS_DIR = <root>/modules/ObjectDetectionYOLOv8/custom-models

Infor ** MODELS_DIR = <root>/modules/ObjectDetectionYOLOv8/assets

Infor ** MODEL_SIZE = Medium

Infor ** USE_CUDA = True

Infor ** YOLOv5_AUTOINSTALL = false

Infor ** YOLOv5_VERBOSE = false

Infor

Infor Started Object Detection (YOLOv8) module

Error detect_adapter.py: Traceback (most recent call last):

Error detect_adapter.py: File "/usr/bin/codeproject.ai-server-2.5.4/modules/ObjectDetectionYOLOv8/detect_adapter.py", line 12, in <module>

Error detect_adapter.py: from request_data import RequestData

Error detect_adapter.py: File "/usr/bin/codeproject.ai-server-2.5.4/modules/ObjectDetectionYOLOv8/../../SDK/Python/request_data.py", line 8, in <module>

Error detect_adapter.py: from PIL import Image

Error detect_adapter.py: ModuleNotFoundError: No module named 'PIL'

Infor ** Module ObjectDetectionYOLOv8 has shutdown

Infor detect_adapter.py: has exited

Opening in existing browser session.

Debug Current Version is 2.5.4

Infor *** A new version 2.5.6 is available

and sometimes the module automatically stos running.

|

|

|

|

|

Thanks very much for the report. I believe this may be resolved in 2.6.2. Would you be willing to try it out and see if that helps?

Thanks,

Sean Ewington

CodeProject

|

|

|

|

|

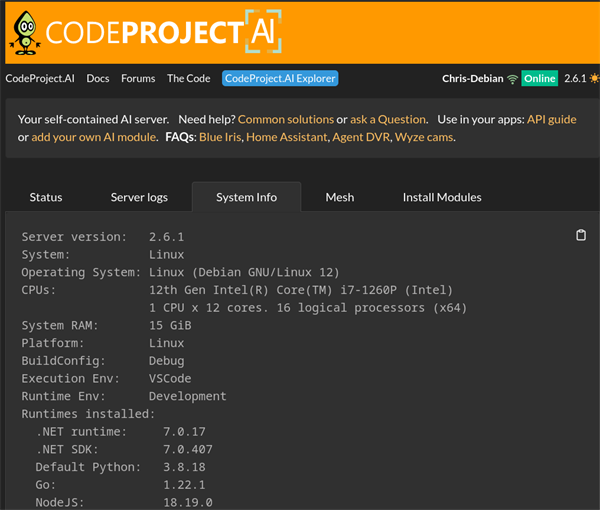

I've just installed a fresh Debian 12.

Installation using .deb package works fine, but first install failed to install the modules.

When installing the python dependencies, python seems to fail to check for HTTPS certificates and none of the dependencies get installed.

Debug Current Version is 2.5.4

Infor *** A new version 2.5.6 is available

Infor FaceProcessing: General CodeProject.AI setup

Infor FaceProcessing: Setting permissions on downloads folder...Done

Infor FaceProcessing: Setting permissions on runtimes folder...Done

Infor FaceProcessing: Setting permissions on persisted data folder...Done

Infor FaceProcessing: GPU support

Infor FaceProcessing: CUDA (NVIDIA) Present: No

Infor FaceProcessing: ROCm (AMD) Present: No

Infor FaceProcessing: MPS (Apple) Present: No

Infor FaceProcessing: Reading module settingsGet:5 https:

Infor FaceProcessing: .Fetched 11.1 kB in 1s (16.5 kB/s)

Infor FaceProcessing: Reading package lists.........Done

Infor FaceProcessing: Processing module FaceProcessing 1.10.1

Infor FaceProcessing: Installing Python 3.8

Infor FaceProcessing: Python 3.8 is already installed

Infor FaceProcessing: Ensuring PIP in base python install...

Infor FaceProcessing: Building dependency tree...

Infor FaceProcessing: Reading state information...

Infor FaceProcessing: All packages are up to date.

Infor FaceProcessing: done

Infor FaceProcessing: Upgrading PIP in base python install... done

Infor FaceProcessing: Installing Virtual Environment tools for Linux...

Infor FaceProcessing: Searching for python3-pip python3-setuptools python3.8...installing... Done

Infor FaceProcessing: Creating Virtual Environment (Shared)... Done

Infor FaceProcessing: Checking for Python 3.8...(Found Python 3.8.18) All good

Infor FaceProcessing: Upgrading PIP in virtual environment... done

Infor FaceProcessing: Installing updated setuptools in venv... Done

Infor FaceProcessing: Downloading Face models...Expanding... Done.

Infor FaceProcessing: Moving contents of models-face-pt.zip to assets...done.

Infor FaceProcessing: Installing Python packages for Face Processing

Infor FaceProcessing: Installing GPU-enabled libraries: If available

Infor FaceProcessing: Searching for python3-pip...All good.

Infor FaceProcessing: Ensuring PIP compatibility... Done

Infor FaceProcessing: Python packages will be specified by requirements.linux.txt

Infor FaceProcessing: - Installing Pandas, a data analysis / data manipulation tool... (failed check) Done

Infor FaceProcessing: - Installing CoreMLTools, for working with .mlmodel format models... (failed check) Done

Infor FaceProcessing: - Installing OpenCV, the Open source Computer Vision library... (failed check) Done

Infor FaceProcessing: - Installing Pillow, a Python Image Library... (failed check) Done

Infor FaceProcessing: - Installing SciPy, a library for mathematics, science, and engineering... (failed check) Done

Infor FaceProcessing: - Installing PyYAML, a library for reading configuration files... (failed check) Done

Infor FaceProcessing: - Installing Torch, for Tensor computation and Deep neural networks... (failed check) Done

Infor FaceProcessing: - Installing TorchVision, for Computer Vision based AI... (failed check) Done

Infor FaceProcessing: - Installing Seaborn, a data visualization library based on matplotlib... (failed check) Done

Infor FaceProcessing: Installing Python packages for the CodeProject.AI Server SDK

Infor FaceProcessing: Searching for python3-pip...All good.

Infor FaceProcessing: Ensuring PIP compatibility... Done

Infor FaceProcessing: Python packages will be specified by requirements.txt

Infor FaceProcessing: - Installing Pillow, a Python Image Library... (failed check) Done

Infor FaceProcessing: - Installing Charset normalizer... (failed check) Done

Infor FaceProcessing: - Installing aiohttp, the Async IO HTTP library... (failed check) Done

Infor FaceProcessing: - Installing aiofiles, the Async IO Files library... (failed check) Done

Infor FaceProcessing: - Installing py-cpuinfo to allow us to query CPU info... (failed check) Done

Infor FaceProcessing: - Installing Requests, the HTTP library... (failed check) Done

Error FaceProcessing: Traceback (most recent call last):

Error FaceProcessing: File "intelligencelayer/face.py", line 21, in <module>

Error FaceProcessing: from request_data import RequestData

Error FaceProcessing: File "/usr/bin/codeproject.ai-server-2.5.4/modules/FaceProcessing/../../SDK/Python/request_data.py", line 8, in <module>

Error FaceProcessing: from PIL import Image

Error FaceProcessing: ModuleNotFoundError: No module named 'PIL'

Infor FaceProcessing: Self test: Self-test failed

Trying a manual install within the venv, the following error is thrown:

root@NVR:/usr/bin/codeproject.ai-server-2.5.4/runtimes/bin/linux/python38/venv/bin# source activate

(venv) root@NVR:/usr/bin/codeproject.ai-server-2.5.4/runtimes/bin/linux/python38/venv/bin# python -m pip install pandas

WARNING: pip is configured with locations that require TLS/SSL, however the ssl module in Python is not available.

WARNING: Retrying (Retry(total=4, connect=None, read=None, redirect=None, status=None)) after connection broken by 'SSLError("Can't connect to HTTPS URL because the SSL module is not available.")': /simple/pandas/

WARNING: Retrying (Retry(total=3, connect=None, read=None, redirect=None, status=None)) after connection broken by 'SSLError("Can't connect to HTTPS URL because the SSL module is not available.")': /simple/pandas/

WARNING: Retrying (Retry(total=2, connect=None, read=None, redirect=None, status=None)) after connection broken by 'SSLError("Can't connect to HTTPS URL because the SSL module is not available.")': /simple/pandas/

WARNING: Retrying (Retry(total=1, connect=None, read=None, redirect=None, status=None)) after connection broken by 'SSLError("Can't connect to HTTPS URL because the SSL module is not available.")': /simple/pandas/

WARNING: Retrying (Retry(total=0, connect=None, read=None, redirect=None, status=None)) after connection broken by 'SSLError("Can't connect to HTTPS URL because the SSL module is not available.")': /simple/pandas/

Could not fetch URL https://pypi.org/simple/pandas/: There was a problem confirming the ssl certificate: HTTPSConnectionPool(host='pypi.org', port=443): Max retries exceeded with url: /simple/pandas/ (Caused by SSLError("Can't connect to HTTPS URL because the SSL module is not available.")) - skipping

ERROR: Could not find a version that satisfies the requirement pandas (from versions: none)

ERROR: No matching distribution found for pandas

WARNING: pip is configured with locations that require TLS/SSL, however the ssl module in Python is not available.

Could not fetch URL https://pypi.org/simple/pip/: There was a problem confirming the ssl certificate: HTTPSConnectionPool(host='pypi.org', port=443): Max retries exceeded with url: /simple/pip/ (Caused by SSLError("Can't connect to HTTPS URL because the SSL module is not available.")) - skipping

|

|

|

|

|

I've been working on this for a couple of days now. I had the exact same issue fixed on the Jetson / RPi (forget which) and on Debian in WSL it worked nicely. Debian native? Not so nice, and I'm still trying to sort out what the issue could be.

cheers

Chris Maunder

|

|

|

|

|

Looks like it was a SSL issue with the build of Python. I think I have that sorted, and once that was fixed everything else just worked. Insider test out tomorrow.

cheers

Chris Maunder

|

|

|

|

|

General

General  News

News  Suggestion

Suggestion  Question

Question  Bug

Bug  Answer

Answer  Joke

Joke  Praise

Praise  Rant

Rant  Admin

Admin