Webscraping with C#

4.91/5 (142 votes)

How to scrape data from a website with C#

- Download sample server - 441.1 KB

- Download sample client - no EXE - 28.6 KB

- Download sample client - 28.6 KB

Introduction

This article is part one of a four part series.

- Part one - How to web scrape using C# (this article)

- Part two - Web crawling using .NET - concepts

- Part three - Web scraping with C# - point and scrape!

- Part four - Web crawling using .NET - example code (to follow)

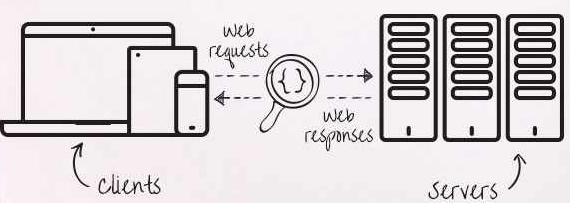

When we think of different sources of data, we generally think about structured or semi-structured data presented to us in SQL, Web-services, CSV, etc. however, there is a huge volume of data out there that's not available to us in these nice easily parsable formats and a lot of that data resides and is presented to us via websites. The problem with data in websites however is that generally, the data is not presented to us in an easy to get at manner. Normally, it is mashed up and mixed in a blend of CSS and HTML. The job of web-scraping is to go under the hood, and extract data from websites using code automation, so that we can get it into a format we can work with.

Web scraping is carried out for a wide variety of reasons, but mostly because the data is not available through easier means. Web scraping is heavily used by companies involved for example in the price and product comparison business. These companies make profit by getting a small referral fee for driving a customer to a particular website. In the vast vast world of the Internet, correctly done, small referral fees can add up very quickly into handsome bottom lines.

Websites are built in a myriad of different ways, some are very simple, others are complex dynamic beasts. Web scraping, like other things, is part skill, part investigation. Some scrape projects that I have been involved with were very tricky indeed, involving both the basics that we will cover in this article, plus advanced 'single page application' data acquisition techniques that we will cover in a further article. Other projects that I have completed used little more than the techniques discussed here, so this article is a good starting point if you haven't done any scraping before. There are many reasons for scraping data from websites, but regardless of the reason, we as programmers can be called on to do it, so it's worth learning how. Let's get started.

Background

If we wanted to get a list of countries of the European Union for example, and had a database of countries available, we could get the data like this:

'select CountryName from CountryList where Region = "EU"

But this assumes you have a country list hanging around.

Another way is to go to a website that has a list of Countries, navigate to the page with a list of European Countries, and get the list from there - and that's where web-scraping comes in. Web-scraping is the process of writing code that combines HTTP calls with HTML parsing, to extract semantic (ref) meaning from, well, gobbldigook!

Web scraping helps us turn this:

<tbody> <tr> <td>AJSON </td><td> <a href="/home/detail/1"> view </a> </td></tr><tr>

<td>Fred </td><td> <a href="/home/detail/2"> view </a> </td></tr><tr> <td>Mary </td>

<td> <a href="/home/detail/3"> view </a> </td></tr><tr> <td>Mahabir </td>

<td> <a href="/home/detail/4"> view </a> </td></tr><tr> <td>Rajeet </td>

<td> <a href="/home/detail/5"> view </a> </td></tr><tr> <td>Philippe </td>

<td> <a href="/home/detail/6"> view </a> </td></tr><tr> <td>Anna </td>

<td> <a href="/home/detail/7"> view </a> </td></tr><tr> <td>Paulette </td>

<td> <a href="/home/detail/8"> view </a> </td></tr><tr> <td>Jean </td>

<td> <a href="/home/detail/9"> view </a> </td></tr><tr> <td>Zakary </td>

<td> <a href="/home/detail/10"> view </a> </td></tr><tr> <td>Edmund </td>

<td> <a href="/home/detail/11"> view </a> </td></tr><tr> <td>Oliver </td>

<td> <a href="/home/detail/12"> view </a> </td></tr><tr> <td>Sigfreid </td>

<td> <a href="/home/detail/13"> view </a> </td></tr></tbody>

into this:

- AJSON

- Fred

- Mary

- Mahabir

- Rajeet

- Philippe

- etc.

Now, before we go any further, it is important to point out that you should only scrape data if you are allowed to do so, by virtue of permission, or open access, etc. Take care to read any terms and conditions, and to absolutely stay within any relevant laws that pertain to you. Let's be careful out there kids!

When you go and design a website, you have the code, you know what data sources you connect to, you know how things hang together. When you scrape a website however, you are generally scraping a site you have little knowledge of, and therefore need to go through a process that involves:

- investigation/discovery

- process mapping

- reverse engineering

- html/data parsing

- script automation

Once you get your head around it, web-scraping is a very useful skill to have in your bag of tricks and add to your CV - so let's get stuck in.

Webscraping Tools

There are numerous tools that can be used for web-scraping. In this article, we will focus on two - "Fiddler" for reverse engineering the website/page we are trying to extract data from, and the very fine open source "Scrapy sharp" library to access the data itself. Naturally, you will find the developer tools in your favorite browser extremely useful in this regard also.

Scrapy Sharp

Scrapy Sharp is an open source scrape framework that combines a web client able to simulate a web browser, and an HtmlAgilityPack extension to select elements using CSS selector (like JQuery). Scrapysharp greatly reduces the workload, upfront pain and setup normally involved in scraping a web-page. By simulating a browser, it takes care of cookie tracking, redirects and the general high level functions you expect to happen when using a browser to fetch data from a server resource. The power of ScrapySharp is not only in its browser simulation, but also in its integration with HTMLAgilitypack - this allows us to access data in the HTML we download, as simply as if we were using JQuery on the DOM inside the web-browser.

Fiddler

Fiddler is a development proxy that sits on your local machine and intercepts all calls from your browser, making them available to you for analysis.

Fiddler is useful not only for assisting with reverse engineering web-traffic for performing web-scrapes, but also web-session manipulation, security testing, performance testing, and traffic recording and analysis. Fiddler is an incredibly powerful tool and will save you a huge amount of time, not only in reverse engineering but also in trouble shooting your scraping efforts. Download and install Fiddler from here, and then toggle intercept mode by pressing "F12". Let's walk through Fiddler and get to know the basics so we can get some work done.

The following screenshot shows the main areas we are interested in:

- On the left, any traffic captured by Fiddler is shown. This includes your main web-page, and any threads spawned to download images, supporting CSS/JS files, keep-alive heartbeat pings, etc. As an aside, it's interesting (and very revealing) to run Fiddler for a short while for no other reason than to see what's sending http traffic on your machine!

- When you select a traffic source/item on the left, you can view detail about that item on the right in different panels.

- The panel I mostly find myself using is the "

Inspectors" area where I can view the content of pages/data being transferred both to, and from the server. - The filters area allows you to cut out a lot of the 'noise' that travels through http. Here, for example, you can tell Fiddler to filter and show only traffic from a particular URL.

By way of example, here I have both Bing and Google open, but because I have the filter on Bing, only traffic for it gets shown:

Here is the filter being set:

Before we move on, let's check out the inspectors area - this is where we will examine the detail of traffic and ensure we can mirror and replay exactly what's happening when we need to carry out the scrape itself.

The inspector section is split into two parts. The top part gives us information on the request that is being sent. Here, we examine request headers, details of any form data being posted, cookies, json/xml data, and of course the raw content. The bottom part lists out information relating to the response received from the server. This would include multiple different views of the webpage itself (if that's what has been sent back), cookies, auth headers, json/xml data, etc.

Setup

In order to present this article in a controlled manner, I have put together a simple MVC server project that we can use as a basis for scraping. Here's how it's set up:

A class called SampleData stores some simple data that we can use to scrape against. It contains a list of people and countries, with a simple link between the two.

public class PersonData

{

public int ID { get; set; }

public string PersonName { get; set; }

public int Nationality { get; set; }

public PersonData(int id, int nationality, string Name)

{

ID = id;

PersonName = Name;

Nationality = nationality;

}

}

public class Country

{

public int ID { get; set; }

public string CountryName { get; set; }

public Country(int id, string Name)

{

ID = id;

CountryName = Name;

}

}

Data is then added in the constructor:

public class SampleData

{

public List<country> Countries;

public List<persondata> People;

public SampleData()

{

Countries = new List<country>();

People = new List<persondata>();

Countries.Add(new Country ( 1, "United Kingdom" ));

Countries.Add(new Country ( 2, "United States" ));

Countries.Add(new Country(3, "Republic of Ireland"));

Countries.Add(new Country(4, "India"));

..etc..

People.Add(new PersonData(1, 1,"AJSON"));

People.Add(new PersonData(2, 2, "Fred"));

People.Add(new PersonData(3, 2, "Mary"));

..etc..

}

}

We setup a controller to serve the data:

public ActionResult FormData()

{

return Redirect("/home/index");

}

and a page view to present it to the user:

@model SampleServer.Models.SampleData

<table border="1" id="PersonTable">

<thead>

<tr>

<th>

<pre lang="html">

Persons name</pre>

</th>

<th>

<pre>

View detail</pre>

</th>

</tr>

</thead>

<tbody>

@foreach (var person in @Model.People)

{

<tr>

<td>

@person.PersonName

</td>

<td>

<pre>

<a href="/home/detail/@person.ID">view </a>

</td>

</tr>

}

</tbody>

</table>

We also create a simple form that we can use to test posting against:

<form action="/home/FormData" id="dataForm" method="post"><label>Username</label>

<input id="UserName" name="UserName" value="" />

<label>Gender</label> <select id="Gender" name="Gender">

<option value="M">Male</option><option value="F">Female</option></select>

<button type="submit">Submit</button></form>

Finally, to finalise our setup, we will build two controller/view-page pairs:

- to accept the data post and indicate form data post success, and

- a controller to handle the view-detail page

Controllers:

public ActionResult ViewDetail(int id)

{

SampleData SD = new SampleData();

SD.SetSelected(id);

return View(SD);

}

public ActionResult FormData()

{

var FD = Request.Form;

ViewBag.Name = FD.GetValues("UserName").First();

ViewBag.Gender = FD.GetValues("Gender").First();

return View("~/Views/Home/PostSuccess.cshtml");

}

Views:

Success! .. data received successfully.

@ViewBag.Name

@ViewBag.Gender

@model SampleServer.Models.SampleData

<label>Selected person: @Model.SelectedName</label>

<label>Country:

<select>

@foreach (var Country in Model.Countries)

{

if (Country.ID == Model.SelectedCountryID)

{<option selected="selected" value="@Country.ID">@Country.CountryName</option>

}

else

{<option value="@Country.ID">@Country.CountryName</option>

}

}

</select></label>

Running our server, we now have some basic data to scrape and test against:

Web Scraping Basics

Earlier in the article, I referred to scraping being a multi-stage process. Unless you are doing a simple scrape like the example we will look at here, in general you will go through a system of investigating what the website presents / discovering what's there, and mapping that out. This is where Fiddler comes in useful.

With your browser open, and Fiddler intercepting traffic from the site you want to scrape, you move around the site letting Fiddler capture the traffic and work-flow. You can then save the fiddler data and use it as a working process-flow you can reverse engineer your scraping efforts against, comparing what you know to work in the browser, with what you are trying to make work in your scraping code. When you run your code running its scrape alongside your saved browser Fiddler session, you can easily spot the gaps, see what's happening and logically build up your own automation code script.

Scraping is rarely as easy as pointing at a page and pulling down data. Normally, data is scattered around a website in a particular way, and you need to analyse the workflow of how the user interacts with the website to reverse engineer the process. You will find data located within tables, in drop-boxes, and divs. You will equally find that data may be loaded into place indirectly not using a server-side page render, but by an Ajax call or other JavaScript method. All the time, Fiddler is your friend to monitor what's happening in the browser, versus the network traffic that's occurring in the background. I often find for complex scraping it's useful to build up a flow-chart that shows how to move around the website for different pieces of data.

When analysing and trying to duplicate a process in your webscrape, be aware of non obvious things that are being used to manage state by the website. For example, it is not uncommon for session-state and user location within the website to be maintained server-side. In this case, you cannot simply jump from page to page scraping data as you please, but must follow the bread-crumb path that the website wants you to "walk" because most likely the particular order you do things in and call pages in is triggering something server-side. A final thought on this end of things is you should check that the page data you get back, is what you expect. By that, I mean if you are navigating from one page to another, you should look out for something unique on the page that you can try to rely on to confirm that you are on the page you requested. This might be a page title, a particular piece of CSS, a selected menu item, etc. I have found that in scraping, things you don't expect can happen, and finding what's gone wrong, can be quite tedious when you are faced with raw html to trawl through.

The most important thing for being productive in web-scraping is to break things into small, easily reproducible steps, and follow the pattern you build up in Fiddler.

Web Scraping Client

For this article, I have created a simple console project that will act as the scrape client. The first thing to do is add the ScrapySharp library using nuGet, and link to the namespaces we need to get started.

PM> Install-Package ScrapySharp using ScrapySharp.Network;

using HtmlAgilityPack;

using ScrapySharp.Extensions;

To get things moving, run the MVC sample server that we are going to use as our scrape guinea pig. In my case, it's running on "Localhost:51621". If we load the server in our browser and look at the source, we will see that the page title has a unique class name. We can use this to scrape the value. Let's make this our "Hello world of web-scrape..."

In our console, we create a ScrapingBrowser object (our virtual browser) and setup whatever defaults we require. This may include allowing (or not) auto re-direct, setting the browser-agent name, allowing cookies, etc.

ScrapingBrowser Browser = new ScrapingBrowser();

Browser.AllowAutoRedirect = true; // Browser has settings you can access in setup

Browser.AllowMetaRedirect = true;

The next step is to tell the browser to go load a page, and then, using the magic of CssSelect, we reach in and pick out our unique page title. As our investigation showed us that the title has a unique class name, we can use the class-select notation ".NAME" to navigate and get the value. Our initial access to items is generally using HTMLNode or a collection of HTMLNode. We get the actual value by examining the InnerText of the returned node.

WebPage PageResult = Browser.NavigateToPage(new Uri("http://localhost:51621/"));

HtmlNode TitleNode = PageResult.Html.CssSelect(".navbar-brand").First();

string PageTitle = TitleNode.InnerText;

And there it is...

The next thing we will do is scrape a collection of items, in this case, the names from the table we created. To do this, we will create a string list to capture the data, and query our page results for particular nodes. Here, we are looking for a top level of a table id "PersonTable". We then iterate through its child nodes looking for a collection of "TD" under the path "/tbody/tr". We only want the first cell data which contains the persons name so we refer to it using the [1] index param.

List<string> Names = new List<string>();

var Table = PageResult.Html.CssSelect("#PersonTable").First();

foreach (var row in Table.SelectNodes("tbody/tr"))

{

foreach (var cell in row.SelectNodes("td[1]"))

{

Names.Add(cell.InnerText);

}

}

and the resulting output as we expect:

AJSON

Fred

Mary

Mahabir

Rajeet

Philippe...etc...

The final thing we will look at for the moment is capturing and sending back a form. As you may now expect, the trick is to navigate to the form you want, and do something with it.

To use forms, we need to add a namespace:

using ScrapySharp.Html.Forms;

While in most cases you can just look to the html source to find form field names, etc. in some cases due to obfuscation or perhaps JavaScript interception, you will find it useful to look in Fiddler to see what names and values are being sent so you can emulate when you are posting your data.

In this Fiddler screenshot, we can see the form data being sent in the request, and also the response sent back by the server:

The code to locate the form, fill in field data and submit is very simple:

// find a form and send back data

PageWebForm form = PageResult.FindFormById("dataForm");

// assign values to the form fields

form["UserName"] = "AJSON";

form["Gender"] = "M";

form.Method = HttpVerb.Post;

WebPage resultsPage = form.Submit();

The critical points to note when submitting form data are

- ensure you have *exactly* the right form fields being sent back as you captured in Fiddler and

- ensure that you check the response value (in

resultsPageabove) to ensure the server has accepted your data successfully

Downloading Binary Files From Websites

Getting and saving binary files, like PDFs, etc. is very simple. We point to the URL and grab the stream sent to us in the 'raw' response body. Here is an example (where the SaveFolder and FileName are set previously):

WebPage PDFResponse = Browser.NavigateToPage(new Uri("MyWebsite.com/SomePDFFileName.pdf"));

File.WriteAllBytes(SaveFolder + FileName, PDFResponse.RawResponse.Body);

May 2016 - Update on Webscraping and the Law

I was at a law lecture recently and learned of a very interesting and relevant legal case that is about web scraping. 'Ryanair' is one of, if not the largest budget airline in Europe (as of 2016). The airline took legal action recently against a number of air-ticket price comparison companies/websites stating that they were illegally scraping price data from Ryanair's website. There were a number of different aspects to the case, legally technical, and if you are into that kind of thing (bring it on!), it's worth a read. However, the bottom line is that a judgement was made that stated that Ryanair could take an action against the web-scrapers *for breaching their terms and conditions*. Ryanair's terms and conditions expressly prohibited 'the use of an automated system or software to extract data from the website for commercial purposes, unless Ryanair consented to the activity'. One interesting aspect of the case is that in order to actually view the pricing information, a user of the site had to implicitly agree to Ryanairs terms and conditions - something that the web scraper clearly did programmatically, thereby adding fuel to the legal fire. The implication here is that there is now specific case law (in Europe at least), allowing websites to use a clause in their terms and conditions to legally block scrapers. This has huge implications and the impact is yet to be determined - so, as always, when in doubt, consult your legal eagle!

More reading on this:

- Data for the taking: using website terms and conditions to combat web scraping

- Data for the Taking: Using the Computer Fraud and Abuse Act to Combat Web Scraping

Roundup

That's the very basics covered. More to come, watch this space.

If you liked this article, please give it a vote above!

History

- Version 1 - 20th October, 2015

- Version 2 - 10th December, 2015 - added file download code

- Version 3 - 26th March, 2016 - added links to related articles

- Version 4 - 10th May, 2016 - added update about legal implications of web scraping and new case law