Building a Deep Learning Environment in Python with Intel and Anaconda

In this article, we’ll explore how to create a DL environment with optimized Intel packages.

Deep learning (DL) is a swiftly advancing field, with a rapid increase in model complexity along with the software ecosystem that attempts to address it. Millions of students, developers, and researchers rely on their computers’ efficient DL ecosystems to stay at the forefront of the industry. To support the field and those working within it, Intel and Anaconda have collaborated to provide continuously improved and optimized tools for everyday and advanced DL tasks.

Intel develops software optimized for high-speed, high-performance DL packages, and its Python and TensorFlow products come with integrated optimizations from the oneMKL and oneDNN libraries. Anaconda makes it easy to install and update these packages without having to manage installations and dependencies, helping keep your DL environments up to date and optimized for modern processor architectures.

In this article, we’ll explore how to create a DL environment with optimized Intel packages. We’ll use the Anaconda Navigator desktop application to build the DL environment.

Before we begin, ensure that you download Anaconda and set it up on your machine.

Creating a Deep Learning Environment

In this article, we build a DL environment for computer vision (CV). This environment includes tools to handle the usual tasks in DL applications for CV, such as working with images, creating neural networks, training, and testing neural network models. We use the following components to build the environment:

- Intel® Distribution for Python for use as a kernel of the environment

- Pillow library for loading and processing images

- Intel® Optimization for TensorFlow* to build neural networks and to fit and test DL models

- Jupyter* Notebook web application to develop, execute, and test Python scripts

First, start your installed Anaconda Navigator application and go to the Environments tab.

As we use Intel-optimized packages in the environment, let’s first add the new distribution channel — the Intel channel. Click the Channels button, and add the new channel, intel, in the opened window. Then click Update channels to finish the operation.

Now, let’s add the new environment. Click Create and input the name of the environment in the Create new environment text box. We use intel_dl_env.

Confirm the creation of the new environment in the window. The Anaconda Navigator application creates and activates the environment.

Now, we’re ready to install the required packages in our DL environment.

First, let’s install the Intel Distribution for Python. Select All in the package box and input “intel” in the search area, as shown below. Anaconda Navigator offers all suitable packages with descriptions and versions in the grid. Find the intelpython3_core and intelpython3_full packages in the list and mark them for installation.

Click Apply to continue. In this step, Anaconda checks all the package dependencies and shows detailed information about the installation in a separate window.

Confirm the installation by clicking Apply. The process can take a couple of minutes.

When the installation is complete, we should verify that it was successful and that we’ve installed the appropriate packages in our environment. Run the terminal from the environment, as shown in the following image.

In the opened terminal, execute the following command:

python --version

We see that the Intel version of Python works in our DL environment.

We use the Intel Distribution for Python in our environment because of its high-speed performance. Intel accelerates the performance using optimized routines as the back-end implementations of various Python functions.

Our next step is installing Intel Optimization for TensorFlow. This installation is analogous to the previous. Input “tensorflow” in the search box, find the tensorflow and tensorflow-base packages in the list, and mark them for installation:

Click Apply, and the application once again shows a window with the packages to modify and install.

Confirm the installation and wait for the process to finish.

Here, we should focus on the Intel Optimization for TensorFlow and compare it to the standard version. These optimizations fall into two major categories. The first is the optimization of the execution graph. Because TensorFlow processes any deep neural network (DNN) model using the execution graph, optimizing the graph can significantly increase the speed of model training and testing.

This package also optimizes TensorFlow through its use of the Intel® oneAPI Deep Neural Network Library (oneDNN). The oneDNN library provides building blocks for convolutional neural networks (CNN), such as convolutions, pooling, and rectified linear units (ReLU), optimized for the latest processor architectures.

Installing Intel Optimization for TensorFlow increases DNN model training and testing speed. Intel and Google collaborate to update this package for improvements in CPU, GPU, and other processors. Keeping this up to date using Anaconda ensures that your environment keeps up with the latest software innovations to address growing deep learning model demands.

The next part of the environment is the Pillow library, an image processing library that supports many base images file formats and provides image operations like flipping, rotation, and resizing. The TensorFlow framework uses this library in the image preprocessing module, and the library must be available in our environment to work with image data. Install the Pillow library into the environment with Anaconda. The installation is straightforward, so we won’t cover it here.

The last ingredient of our DL environment is the Jupyter Notebook application, web-based software we can use to develop scientific Python programs. This application’s key feature is interactive computing. Interactive computing is sequential code execution with online visualization of all intermediate results.

To activate the application in the new environment, go to the Home tab and click Install under the Jupyter Notebook icon.

Now our DL environment is ready to deal with everyday image recognition tasks! Let’s start the Jupyter Notebook application and explore how to write a simple program in our DL environment.

First, we create a separate working directory to store all code and data files. On our computer, we created C:\intel_dl_workfolder for this purpose. Start a terminal in the DL environment and change the current location to this working directory. Then execute the following command:

jupyter notebook

The application starts in the working folder, as shown in the picture below.

Copy the URL from the terminal and open it in your preferred web browser. Create a new notebook file by clicking New and then Python 3.

Finally, let’s write and run some simple Python code to ensure that the DL environment has the TensorFlow version powered by oneDNN and that we have all the required functionality. Run the following code in the created notebook:

import os

os.environ['TF_ENABLE_ONEDNN_OPTS'] = '1'

import tensorflow as tf

print(tf.__version__)

t1 = tf.constant([[1., 2.], [3., 4.]])

print(t1)

When we run the code on our computer, we get the following output:

2.7.0

tf.Tensor(

[[1. 2.]

[3. 4.]], shape=(2, 2), dtype=float32)

The first two lines of the code set the system variable TF_ENABLE_ONEDNN_OPTS to 1. Starting with TensorFlow 2.9, the oneDNN optimizations are enabled by default. We explicitly set this variable to 1 to ensure that we’ve enabled the oneDNN optimizations of TensorFlow in our environment.

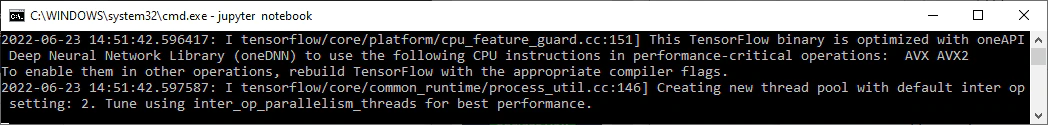

In the last two lines of the code, we created a Tensor instance and printed its value. The goal of this is to check our version of TensorFlow. In the terminal output, we got the following message:

The message shows that we use the version of TensorFlow that’s optimized with the oneDNN library.

Next, let’s test image loading from a file:

from tensorflow.keras.preprocessing.image import load_img

img = load_img("c:\\intel_dl_work\\1.png", color_mode="grayscale")

print(img.mode)

print(img.size)

img

The code reads an image from the specified file c:\\intel_dl_work\\1.png, prints the attributes of the image, and plots it. The picture below is the input and output for the code in our notebook.

The final testing code converts the loaded image to the NumPy array format.

from tensorflow.keras.preprocessing.image import img_to_array

np_array = img_to_array(img)

print(np_array.dtype)

print(np_array.shape)

The code uses the img_to_array function to get a NumPy array from the image and then prints the properties of the array. Note that the type of the array elements is float32. The array has three dimensions. So, this is a three-dimensional tensor. The TensorFlow framework uses such three-dimensional tensors as a common input for convolutional neural networks (CNN) when solving problems of image classification, object detection, and recognition.

Conclusion

As we’ve discovered here, the Anaconda Navigator desktop application enables us to easily create and manage Python environments and provides access to the optimized Intel packages through its distribution channels. Intel develops specialized software for DL, including TensorFlow extensions and the oneDNN library, which boost the speed of DNN models. A DL environment built with these Intel packages ensures that you use software optimized for the latest modern processor architectures. Moreover, we can update the environment effortlessly, remaining at the forefront of the DL industry.

References: Intel AI Software | AI Frameworks | Intel AI Tools