Fine-tuning VGG16 to Classify Clothing

5.00/5 (3 votes)

In this article we show you how to train VGG19 to recognize what people are wearing.

Introduction

The availability of datasets like DeepFashion open up new possibilities for the fashion industry. In this series of articles, we’ll showcase an AI-powered deep learning system that can revolutionize the fashion design industry by helping us better understand customers’ needs.

In this project, we’ll use:

- Jupyter Notebook as the IDE

- Libraries:

- A custom subset of the DeepFashion dataset — relatively small to reduce the computational and memory overhead

We are assuming that you are familiar with the concepts of deep learning, as well as with Jupyter Notebooks and TensorFlow. If you’re new to Jupyter Notebooks, start with this tutorial. You are welcome to download the project code.

In the previous article, we showed you how to load the DeepFashion dataset, and how to restructure the VGG16 model to fit our clothing classification task. In this article, we’ll train VGG16 to classify 15 different clothing categories and evaluate the model performance.

Training VGG16

Transfer learning for VGG16 starts with freezing the model weights that had been obtained by training the model on a huge dataset such as ImageNet. These learned weights and filters provide the network with great feature extraction capabilities, which will help us boost its performance when it is trained to classify clothing categories. Hence, only the Fully Connected (FC) layers will be trained while keeping the feature extraction part of the model almost frozen (by setting a very low learning rate, like 0.001). Let’s freeze the feature extraction layers by setting them to False:

for layer in conv_model.layers:

layer.trainable = False

Now, we can compile our model while selecting the learning rate (0.001) and optimizer (Adamax):

full_model.compile(loss='categorical_crossentropy',

optimizer=keras.optimizers.Adamax(lr=0.001),

metrics=['acc'])

After compiling, we can start model training using the fit_generator function, since we used ImageDataGenerator to load our data. We will train and validate our network using data indicated as train_dataset and val_dataset, respectively. We’ll train with three epochs, but this number can be increased depending on the network performance.

history = full_model.fit_generator(

train_dataset,

validation_data = val_dataset,

workers=0,

epochs=3,

)

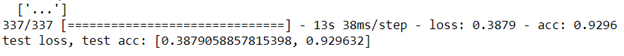

Running the above code will result in the following output:

Now, to plot the learning and loss curves for the network, let’s add the plot_history function:

def plot_history(history, yrange):

'''Plot loss and accuracy as a function of the epoch,

for the training and validation datasets.

'''

acc = history.history['acc']

val_acc = history.history['val_acc']

loss = history.history['loss']

val_loss = history.history['val_loss']

# Get number of epochs

epochs = range(len(acc))

# Plot training and validation accuracy per epoch

plt.plot(epochs, acc)

plt.plot(epochs, val_acc)

plt.title('Training and validation accuracy')

plt.ylim(yrange)

# Plot training and validation loss per epoch

plt.figure()

plt.plot(epochs, loss)

plt.plot(epochs, val_loss)

plt.title('Training and validation loss')

plt.show()

plot_history(history, yrange=(0.9,1))

This function will generate these two plots:

Evaluating VGG16 on New Images

Our network performed well during training. So it should also perform well when tested on images of clothes that it had not seen before, right? We’ll test it on our testing set of images.

First, let’s load the test set, and then pass the test images to the model using the model.evaluate function to measure the network accuracy.

from tensorflow.keras.preprocessing.image import ImageDataGenerator

test_dir=r'C:\Users\abdul\Desktop\ContentLab\P2\DeepFashion\Test'

test_datagen = ImageDataGenerator()

test_generator = test_datagen.flow_from_directory(test_dir, target_size=(224, 224), batch_size=3, class_mode='categorical')

# X_test, y_test = next(test_generator)

Testresults = full_model.evaluate(test_generator)

print("test loss, test acc:", Testresults)

Well, it is clear that our network is well trained. With no overfitting: it had achieved an accuracy of 92% on the testing set.

Next Steps

In the next article, we’ll evaluate VGG19 using real images taken by a phone camera. Stay tuned!