Clang Rocks!

5.00/5 (1 vote)

In this article, we will discover the power of Clang's design that can help you to develop many kinds of tools.

Four years ago, when we began the development of CppDepend, we needed a C\C++ parser, our first reflex was to use GCC, in the beginning, we were very excited to integrate it, but after a few weeks, we decided to abandon it for the following reasons:

- Gcc is monolithic and it’s difficult to isolate only the front end parser.

- Gcc doesn't treat Microsoft extensions.

- The GPL license is restrictive, and we can’t use it for our commercial product.

Clang was another option, but at this time, it was not very used and we didn’t know if it will be actively developed or not.

Last year, we decided to change our C/C++ parser for our major release 3.0 to have a more reliable result, and we checked Clang to have an idea of its evolution, we were very surprised that it implements almost all C++11 new features and it became very popular.

After few days of inspecting its capabilities, we decided to use it, in this article, we will discover the power of its design that can help you to develop many kinds of tools.

Clang Design

Like many other compiler designs, Clang compiler has three phases:

- The front end that parses source code, checking it for errors, and builds a language-specific Abstract Syntax Tree (AST) to represent the input code.

- The optimizer: its goal is to do some optimization on the AST generated by the front end.

- The back end: that generates the final code to be executed by the machine, it depends on the target.

What's the Difference between Clang and the Other Compilers?

The most important difference of its design is that Clang is based on LLVM, the idea behind LLVM is to use LLVM Intermediate Representation (IR), it’s like the bytecode for Java.

LLVM IR is designed to host mid-level analyses and transformations that you find in the optimizer section of a compiler. It was designed with many specific goals in mind, including supporting lightweight runtime optimizations, cross-function/interprocedural optimizations, whole program analysis, and aggressive restructuring transformations, etc. The most important aspect of it, though, is that it is itself defined as a first class language with well-defined semantics.

With this design, we can reuse a big part of the compiler to create other compilers, you can for example just change the front end part to treat other languages.

Let’s discover the Clang design internals using CppDepend.

Front End

Clang is designed to be modular and each compilation phase is done by several modules, here are some projects implied in the front end phase:

As any front end parser, we need a lexer and a semantic analysis.

When the front end is executed, here’s the sequence of some interesting methods executed.

The method ExecuteAction take FrontEndAction as parameter, the goal is to specify which frond end action to execute. This class is abstract, and we need to inherit from it to implement a new front end action.

Let’s discover all FrontEndAction implemented by Clang, for that, we can search for all classes inheriting directly or indirectly from it.

from t in Types

let depth0 = t.DepthOfDeriveFrom("clang.FrontendAction")

where depth0 >= 0 orderby depth0

select new { t, depth0 }

Many front end actions are available, for example ASTDumpAction permit to generate the AST without creating the final executable. What’s interesting with this design is that we can plug our custom FrontEndAction easily, we have just to implement a new one, and no need to change a lot the existing Clang code source, what makes the merge with the new version very easy.

Almost all frontend action derives from ASTFrontEndAction, it means that they work with the generated AST.

How can we do some treatments on the AST?

Each ASTFrontEndAction creates one or many ASTConsumer, ASTConsumer is also an abstract class, and we have to implement our AST consumer for our specific needs, the FrontEndAction will invoke the AST consumer as specified by the following graph.

Let’s search for all ASTConsumer classes:

from t in Types

let depth0 = t.DepthOfDeriveFrom("clang.ASTConsumer")

where depth0 == 1

select new { t, depth0 }

As we specified before, the power of LLVM is to work with IR, and to generate it, we need to parse the AST. CodeGenerator is the class inheriting from ASTConsumer responsible for generating the IR, and what’s interesting is that this treatment is isolated into another project named ClangCodeGen.

Here are some classes implied in the LLVM IR generation:

In our case, we needed only to use the front end parser, and as specified before, it’s very easy to customize it, we just added our custom FrontEndAction and ASTConsumer.

Optimizer

To explain this phase, I can’t say better than Chris Lattner the father of LLVM in this post:

“To give some intuition for how optimizations work, it is useful to walk through some examples. There are lots of different kinds of compiler optimizations, so it is hard to provide a recipe for how to solve an arbitrary problem. That said, most optimizations follow a simple three-part structure:

- Look for a pattern to be transformed.

- Verify that the transformation is safe/correct for the matched instance.

- Do the transformation, updating the code.

The optimizer reads LLVM IR in, chews on it a bit, then emits LLVM IR, which hopefully will execute faster. In LLVM (as in many other compilers), the optimizer is organized as a pipeline of distinct optimization passes each of which is run on the input and has a chance to do something. Common examples of passes are the inliner (which substitutes the body of a function into call sites), expression reassociation, loop invariant code motion, etc. Depending on the optimization level, different passes are run: for example at -O0 (no optimization) the Clang compiler runs no passes, at -O3 it runs a series of 67 passes in its optimizer (as of LLVM 2.8).

Let’s discover the LLVMCore passes, for that we can search for classes inheriting from "pass" class.

from t in Types

let depth0 = t.DepthOfDeriveFrom(“llvm.Pass”)

where t.ParentProject.Name=="LLVMCore" && depth0 >= 0 orderby depth0

select new { t, depth0 }

Of course, many other passes exist in the other LLVM modules.

BackEnd

Like other phases, the backend responsible for generating the output for a specific target, in the case of Clang, it’s very modular, let’s take as example LLVMX86Target the module generating for X86 target.

Some Other Design Choices

Low coupling:

Low coupling is in general an indicator of a good design, it makes the application more flexible, in the case of Clang/LLVM, many classes are abstract.

from t in Types where t.IsAbstract select new { t }

Design patterns:

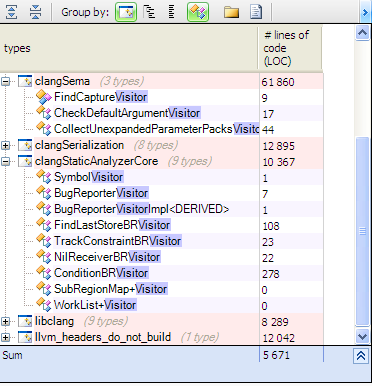

Many design patterns are used, like factory or visitor.

Conclusion

Clang became very popular because it has many advantages for the developer using it to compile his code, and I can confirm that Clang also has many advantages for the developers searching to create static analysis tools, refactoring tools, or any tool working on the source code.

So just an advice. Make it your first choice if you want to develop such tools, it Rocks!

Filed under: C++, CodeProject, Design

![]()