Let's Build a Robot!

4.90/5 (102 votes)

Are you a developer? ... then you can build a robot...

Introduction

Only the most isolated technophobe nomads wandering in the desert for the past few years will have failed to notice the surge and burst of advancements in the amazing technology that surrounds us. This is not only in the electronic and digital space, but in almost every area you look at.. for example, medicine, physics, heavy industry. It's like the concept of Moores law is at work everywhere!

The speed of innovation seems to increase by the day, and critically, technologies that were previously only available to those with really specialist knowledge, are now within the grasp of the ever humble mere mortal developer (that's me, and, if you are reading this column, possibly you!). I have been playing with some IoT (Internet of Things) technology for a while now, and like I think most people, haven't really got beyond the 'it's a toy/bit of fun' stage ... by that I mean - great, we get our new RaspberryPI or Intel board in the post, we are all excited, and then we make ... well, the same as the majority of others ... a media center or something similar. And that's the end of our IoT adventure... or is it? ... I'm an engineer, I create and build things on an Internet scale, I have an itch to scratch, and I'm bored with my media center. So recently I did what comes naturally when I am bored ... I ordered more stuff :)

Note that this article is the starting point for a robot building adventure - it's not the finished project. However, I believe there is enough conceptual information provided here to enable you to go off and start building in the morning if you had the time available! As I progress the project, I will build out another article (or two) that gives specific instruction to enable you to team up and join my robot army :P

Hatching the Idea

I recently took delivery of various electronic parts to enable me to build a toy vehicle/car/whatever (it has wheels, it moves!), based on the RaspberryPI ... that was cool enough, and quite doable. I was going to add a camera as well, and let it stream images back to a phone or something ... why? ... well, I originally had a great concept about this autonomous thing wandering around the garden chasing after the family chickens and finding out where they laid their eggs....

If you have chickens, or know someone with chickens, you will realize that it's a big problem when all of the family chickens (in our case, six of them), decide to go awol at laying time, and you can't find where they laid their eggs for four days in a row. Believe me, that's a LOT of eggs to eat! Anyway - let's park that idea for the moment.

We've all seen various household and industrial/commercial robots come to the market. They are generally big budget, expensive things that are mostly out of reach financially, or so limited in their functionality as to be almost useless. Interesting, and indeed fascinating, but quite useless. At the end of May of this year (2016), Asus launched its own first stab at a household robot, and I thought - wow, that's cute, and actually, not that difficult to build... sure, what we might build at home may not be as polished or slick as the cool thing Asus sells, but it sure as heck could have similar functionality, if not more!

So - cute little guy, eh? ... he has no arms or means to do anything physical - he (she/it?) can move around, and interacts with you using its head ... ok, but it's not a head - it's a combination of a tablet style screen, microphone and camera. The advertisement that Asus have for their robot is quite cringe-worthy, but going through it does give a good feel for the capabilities and functionality of the device. From looking at that and reading a little, here is what my understanding is that it can do:

- move around your home (on its own)

- avoid obstacles

- 'look' using its onboard camera, and identify certain objects

- 'communicate' with you using its 'voice' (onboard speaker)

- 'listen' to you and your 'commands' using its 'ears' (onboard microphone)

- 'show' you things with its face (onboard tablet/screen)

Clearly if we had the above basic functionality, and backed it up by some onboard and cloud based technology, then this cute little robot can start to be quite useful. So let's talk about how we humble developers, who perhaps build websites or enterprise stuff for a living, can even *start* to approach the mad crazy world of robotics and electronics... it may look scary, but it's not really .. let's get started and see how we can make it happen...<

Breaking It Down to Basics

I think it's safe to say that most developers would be able to build their own computer. I mean these days, it's as easy as point and click ... except it's more like 'push' (into a slot) and (wait for the) click... get a motherboard, sit in the CPU, click in some ram, connect cables from the motherboard to the hard-drive and the power-supply, and you're pretty much ready to go. Once you install the operating system, as developers we then spend our time working with APIs of one kind or another, building applications that run on the computer. Well, guess what - working with electronics and building things isn't that much different .. so let's take a look at how things might work, assuming we are really only (kind-of) building a PC! (but it's actually a robot, shhhh!)

1 - The Motherboard

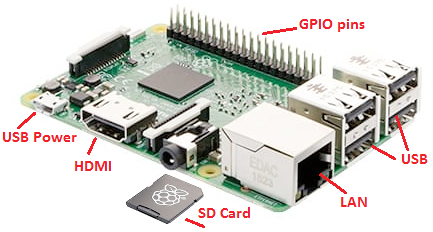

Since we come from the world of developing software on top of an operating system, one of the easiest and useful pieces of kit in the world of IoT (Internet of Things) to work with is the amazing RaspberryPI (R-PI). This self contained mini computer can run different versions of Linux, and also, amazingly, a version of Windows 10 called Windows IoT Core. You can even buy a RaspberryPI in the Microsoft store - very cool. The RaspberryPI motherboard is powered by one of a number of USB slots. In addition to the power slot, there are also other slots that you can use for connectivity to USB devices, like Webcams, WiFi dongles, keyboards, storage, sensors - if it's USB, it should connect. In the image below, you can see a RaspberryPI device and its USB ports. There are four standard sized ports to the rear (right of this image), and if you look at the bottom left of the image, you will see the small USB power port. Right next to the mini-usb port sits a HDMI port - we can use that to drive a display.

2 - GPIO - The Gateway to Talk to Devices

In addition to the USB slots for communication with devices, the R-PI also has what are called 'GPIO' pins. If you look at the image above, to top/back of the board, there is a row of metal spikes sticking up in the air. These are the 'GPIO' pins. They are literally, metal pins, secured in a block of plastic, and connected below that to the motherboard. I've mentioned the letters 'GPIO' a few times - it stands for 'General Purpose Input Output'. These pins are the gateway if you like, between your computer code on the motherboard, and the devices you decide to connect to the motherboard in the outside world. Very simply, in the same way you can set some CSS to make a DIV display be hidden (e.g.: set style display:none), you can also send a signal to a pin to say "turn something on, or off...", or 'communicate with X device/component/sensor and send/receive instructions/information to/from it'.

To make things real - here is some C# code we can run on RaspberryPI running W10-IOT - it simply turns an LED that is attached to GPIO ports, on and off, making it blink:

To turn the LED on - pin.Write(GpioPinValue.Low);

To turn the LED off - pin.Write(GpioPinValue.High);

Here's a small visual to illustrate:

It really is that simple ... sure, guess what, there's an API to learn, but you do that every time you work with a new library or web-service anyway - it's no big deal. (We'll talk about the other messy stuff like resistors and such in a different post). So, if we know that using an API, we can write some simple code, and it sends a signal out over the GPIO or USB, and that can control something on the outside, then we can make the leap that we can use our code for example to tell a small motor that is attached to the motherboard (via the GPIO) to start and stop, thus moving our robot around the place.... suddenly, making a robot seems quite feasible!

3 - Collecting Data from the Outside World

Since we now understand that GPIO can send instructions out to connected devices (such as our LED or our stepper motor), its not a big leap to conceive that we can also get information back from connected devices - and this is where the world of 'sensors' comes into play.

The word gives it away - sensors allow us to sense information about the world. We are already aware of sensors from our mobile smart-phones ... the touch-screen is a sensor, the GPS is a sensor, the accelerometer is a sensor. All these are things that can detect, measure, and report on information in the outside world. I have a great introduction book to sensors 'Make: Sensors: A Hands-On Primer for Monitoring the Real World with Arduino and Raspberry Pi'. It's a great read and worth getting. While there are many more areas, here are some of the areas the book describes that sensors can cover:

- Distance

- Smoke and gas

- Touch

- Movement

- Light

- Acceleration and angular momentum

- Identity

- Electricity

- Sound

- Weather and climate

It's easy to just go out and buy sensors, but it's good to stop and think about them as well, and understand the basics of how they work - this will help you decide which is best for your particular application. Let's look at two, for example.

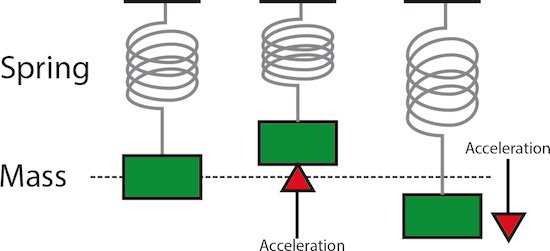

Accelerometer

(Ref: arcbotics)

The accelerometer is a very simple concept. We have a central mass that is connected or suspended in some way to a 'point zero'. When the mass moves (e.g., you shake your phone), then the position of the mass in relation to its point zero changes. When this happens, we can record both the new position, and the rate of change, simplistically combined, this gives us the rate of acceleration.

Pressure

There are many different types of pressure sensor - at its most basic, we have two objects that can touch, and make a connection, but are separated by something that keeps them apart. In this example, we have two pieces of metal, held apart using a small piece of rubber. In the default state, there is no connection between the two pieces of metal, so the default state is OFF.

When pressure is applied to the top of the device, the rubber flexes, allowing the two bits of metal to connect, thus creating a circuit. Now the connection between the two pieces of metal is made, and the circuit state is ON.

(Ref: me and ms-paint!)

Sample Sensors

The message here is to think about what you are trying to achieve and then look at what sensors are available to help you. Some sensors work better and indeed differently than others, so investigate your options. This example list of sensors gives you a good idea of what you can get - and it's only the tip of the tip of the iceberg!

For playing around, just do an Inter-web search for 'sensor kit' and you will find many examples. Going through the list of sensors sown below, you will quickly see a number of them that will be helpful in this robot project, for example the sensors for sound, motion, flame, knock, vibration, gas, temperature and photocell could all be used in my own robot project (ain't this IoT business exciting!).

(Ref: sensors image)

Bringing the Outside in...

I thought it would be good at this stage to have a quick look at how we get data back from our sensors. We have already seen earlier how we can send an instruction to the sensor/device/component by telling an LED to turn ON or OFF. We are used to that kind of thing from a development point of view - issue an instruction, make something happen. Getting data from sensors works in patterns we also know well, either through a function, or a callback/event. The actual implementation of code clearly depends on the sensor device you are using, and the libraries you are using to connect to the device. Here is one small example of connecting to a temperature sensor. In this case, the sensor is identified as a system device, the code opens a connection to the device, reads whatever the device is saying (about the temperature), and closes the connection.

#!/usr/bin/env python

import os

import glob

import time

# load the kernel modules needed to handle the sensor

os.system('modprobe w1-gpio')

os.system('modprobe w1-therm')

# find the path of a sensor directory that starts with 28

devicelist = glob.glob('/sys/bus/w1/devices/28*')

# append the device file name to get the absolute path of the sensor

devicefile = devicelist[0] + '/w1_slave'

# open the file representing the sensor.

fileobj = open(devicefile,'r')

lines = fileobj.readlines()

fileobj.close()

# print the lines read from the sensor apart from the extra \n chars

print lines[0][:-1]

print lines[1][:-1]

(Code ref: http://raspberrywebserver.com/gpio/connecting-a-temperature-sensor-to-gpio.html)

In production, you would probably wrap that in a method 'GetTemperature' that returns a single value.

4 - Semi Autonomous House-Bot

So far, we have explored what we want to do, and seen that by leveraging our existing developer skills and building up things in logical steps, it is reasonable to move from 'interesting concept..' to 'wow, this is possible...'. For me at least, that's exciting, but just one part of the puzzle. Realistically, we have only looked at the initial 'back of a napkin' design. Before proceeding further, we should think about what happens after we stick all of these components and sensors onto a box and call it 'Robot'.

We have established that by hooking up a board like the RaspberryPI/Arduino/Galileo, etc. to external devices and sensors, we can work out how it can move around, sense things, and get from A to B easily enough. What we haven't really looked at however are the bits that make it more than simply a moving equivalent of the 'cheap media center' project. In some ways, the processing we want to do to give our robot 'intelligence' (I use the term very very lightly!) is simple, in others, quite complex. It will involve things like image recognition, connection to web based services, environmental awareness/analysis and voice recognition. I say the robot will be semi autonomous because some things it will manage to do and figure out for itself, but for others, it will have to rely on the support of online services like Cortana. The aim is to maximize what we can do 'on the ground', and make up for what we can't do within the realm of the device itself, by supporting it from the cloud.

The Shopping List

Since we now have a good grasp of the concepts, we need to even approach building a robot, let's look at some of the specific things we need to pull together to make it happen. I'm going to approach this by looking at my reverse engineer specification requirements from earlier. Remember, I'm going to base this bot on the general concept presented by Zenbo, the Asus bot.

The Motherboard

I am going to use a RaspberryPI as the central piece of my robot. You can choose this as well, or go for the equally capable offerings from other vendors such as Intel. The only reason I am choosing the PI at this particular time is that I have a few hanging around the house so they may as well get used. The PI is also well known, and is supported by Windows IoT so I can try things out using that platform as well as the custom version of Linux that runs on RaspberryPI by default.

The Body

To start with, I will build the bot onto an existing chassis I have. Depending on how things progress, I may quickly have to change this to accommodate different sensors, etc. I do want the bot to be pretty and look nice, but it's the first time I've done this so I am aiming to get the functionality working first, and then come back afterwards and see what I need to do to fit a sleek casing around it. Of course, I might do it the other way around the next time (yes, the bot army cometh!), or I may look to a 3D printer for a custom case solution to fit my needs.

Movement

There are a number of aspects to the robot movement.

- getting around ... we need something to transport the robot itself and make it mobile. There are different options I could look at, including tracks, two wheels with a tail-dragger, or three/four (or more) wheels. As I want the robot to have maximum flexibility, I am going to go for the four wheel option, and do something a bit different. I came across a small controller board called a 'piborg' - its specifically designed to do the heavy lifting of controlling individual servos (small motors that move the wheels), individually. This is both useful and important when trying to have advanced control of movement of servos. From watching a video on 'Zeroborg', I learned about 'mecanum wheels' - these are a design of wheel that allows for movement in any direction, even sideways! ... watch this video to see the Zeroborg moving sideways and you will get the idea - its very cool. My choice of parts for moving around will be a combination of a PiBorg controller, sitting on top of a RaspberryPI, that will be connected to four individual servos controlling the ingenious sideways moving mecanum wheels. Combining all of this at the start may be a bit ambitious, so I may start with a standard two wheel and tail-dragger system, but ultimately, the flexibility demonstrated in the Zeroborg video is what I want to achieve. Even the best of plans never survive first outing, so we'll see what happens!

images ref: http://www.robotshop.com/en/3wd-48mm-omni-wheel-mobile-robot-kit.html

http://robu.in/product/a-set-of-100mm-aluminium-mecanum-wheels-basic-bush-type-rollers-4-pieces/

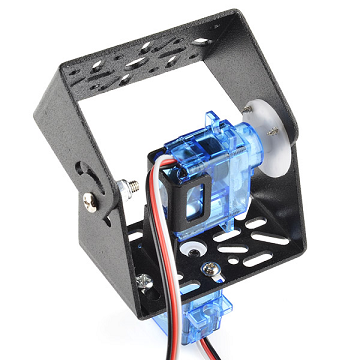

- Moving the head ... one of the tiny things that makes a robot seem more amenable, is the ability for its 'head' to move or tilt somewhat - this simple thing seems to give us some kind of emotional connection with it. Even without adding movement, simply putting a cartoonish expressive simplistic line drawing on a screen can add 'personality' to a robot.

(Ref: Baxter)

A lot of research has been done in this area, and we seem to 'find cartoonish parodies of humans charming'. If however you go too far into making robots look like humans however, the humans that are interacting with those robots get uneasy. Have a look at this video about the uncanny valley to find out more, interesting stuff.

To get the head moving, I intend to mount it on a fixture that can be controlled on a 'pan and tilt' servo mechanism. You have seen these on CCTV cameras for example. It allows you to control the four axis of up, down, left and right. This pan and tilt servo bracket from Sparkfun demonstrates the concept clearly. There is a bracket that swivels, and the swivel direction is handled by one of two servos that can act individually, or in unison to give a fluid movement effect.

Looking Around / Environmental Awareness

Vision/Image Detection

Apart from moving around, we also need to be able to look around. We could do this using a standard component camera, webcam or similar. In the case of this robot, I want to enable it to be smarter than a remote controlled car, I want it to recognize things, and interact intelligently. Is this too much to ask? ... nope, it turns out its not. Using for example the OpenCV image recognition library hooked into our camera, we can make magic happen. There is a great instructable that describes using OpenCV with a pan and tilt camera to do face tracking. Here is another that demonstrates using OpenCV with a RaspberryPI to carry out face recognition/matching. Finally, Sergio Rojas in his article shows how to use a .NET wrapper on OpenCV to map names to faces in real time.

The reason face identification and recognition is important for our robot, is that it will allow it to identify family members and give them personal attention. Another thing we can do with face recognition is identify emotion from facial expressions - also very useful for a home help robot. Of course this is not only about the humans in the life of the robot, its also about the environment it works within. OpenCV and other libraries are extremely useful in detecting (and helping avoid) obstacles, and identifying objects as well.

Before leaving image recognition behind, I also want to give a nod to another board I am considering using in the project, the OpenMV board. This specialized board is dedicated to machine vision with python. To quote their web-page, 'with OpenMV Cam, the difficult and time-consuming work of machine vision is already done for you – leaving more time for creativity'. I think it's certainly worth some investigation.

Navigation, Obstacle Detection, General Awareness

Navigation can be put in place either by pre-mapping out an area, fixing beacons the robot can navigate by, or by letting the robot toddle along itself and discover its own environment. The latter is a very interesting area to look at, and this article on Robot Cartography with RaspberryPI gives great insight into how it works.

Most likely, I will start with the first and move onto the second adn then third as I make the robot increasingly more autonomous. Regardless of which method I use, I will need to add in some sensors that will help with navigation - here are some I will consider:

Detection: We can use image recognition and object detection in software, but sometimes this can, and needs to be aided by more specialized sensors. Worth considering for example would be a motion detector and obstacle avoidance.

Home safety: On the general awareness side of things, I tend to be a bit paranoid about house fires, gas leaks and such like. For this reason, it would be good to incorporate some safety aspects to the robot. I will be implementing a gas sensor, and some kit to handle heat/flame. Flood detection for when *someone* leaves on the bath could also be a good thing.

Communication

So far, we have a pretty good shopping list of technologies that we can bring together to make the robot move around and get a lot of input from its environment. What we haven't touched on yet is how it can communicate with its human overlords <<cue evil laugh!>>. The act of communication is a two way thing - we need input, and we need to be able to give output. We have some of the input covered with a handful of sensors and technology like image recognition libraries, but the glaring input we haven't looked at yet is voice. I will be tackling this from two fronts. First, what can I do offline, onboard on the robot, and second, what needs to be handed off to a cloud based intelligent support service such as Microsoft Cortana. To capture the input, I will start off using the in-built mike on the Webcam I will use - depending on how this turns out, I may or may not have to resort to a standalone mike. A small speaker would also be useful, as would be a screen. The RaspberryPI has two official cameras, one for normal light and the other does Infrared - that might be useful to consider for when the lights go down. One other thing to mention - there is a lot going on here, speakers, cams, screens, external boards - it may be that I need to take a small step up from the standard RaspberryPI to the ''computer module development kit". This is a proto-typing kit designed for more heavy experimentation. It has the added bonus of 120 GPIO pins, a HDMI port, a USB port, two camera ports and two display ports, allowing for far more flexibility than the core system itself.

There are a number of different screen options I could use, from something quite basic, up to a high resolution touch-screen. The official Raspberry Pi 7 inch touch-screen display looks interesting as it has direct integration with the RaspberryPI itself, and does not need a separate power supply. The screen will be used for three main things. First, it will be the 'emotive face' of the robot, with some nice animated facial expressions when it is communicating with its people. Second, it will be a display area for showing information (for example, a slick graphic of the weather, or a web browser showing a recipe for a nice cake!). Finally, it will act as an input device to allow the user to use the touch-screen to communicate with the robot when natural language/voice-control is not working, or is simply not suitable to the task at hand.

Speakers are of a similar nature - I may find that something cheap of the USB variety works well, or I may get something that can work in passive mode, perhaps with a booster, that connects directly into the PI Audio jack. The RaspberryPI-Spy has a short article with Python code to test sound output through simple cheap speakers connected to the raspberry-pi audio jack.

Networking is already taken care of - the latest RaspberryPI model has a lot of connectivity already built-in, so no need to worry about needing any extras. The following is supplied as standard with the RaspberryPI version 3.

- 802.11n Wireless LAN

- Bluetooth 4.1

- Bluetooth Low Energy (BLE)

One of the things I will look at once I have the basics working is making use of the data collected from the robot. I envision using the Azure IoT suite to assist me here.

To wind up the communication end of things, let me introduce you to a very cool open source library for voice control called Jasper - it's going to be my starting point for recognizing commands given by the users and communicating back information. Jasper operates very well on the RaspberryPI platform. It can do some basic things offline, has an extensive API, and can also integrate with online speech engines like the one provided by Google. Jasper is a framework that carries out two tasks. Using various hooks, it can convert speech into text, and do the same thing the other way around (text into speech). Jasper has two main functions called 'Modules'. The first of these is the standard module. It is responsible for active interaction with users. In standard module (mode sounds better?!), users initiate a contact with Jasper and ask it to do something. Jasper intercepts the speech, translates it, and carries out the requested action.The second module is notification. Its function is to listen/silently monitor an information stream (for example a twitter feed or email account), and to speak to the user when there is something flagged to report (lets say you tell it to let you know whenever you receive a DM on twitter!). I've done some initial investigation with Jasper and very much looking forward to making it a cornerstone of my robot project. For a really great overview of using this library from an IoT/home automation point of view, take a few minutes to check out Lloyd Bayley's YouTube video report on Jasper. Lloyd uses Jasper to communicate with his home weather station, tell the time, turn on and off lights - its really inspiring and well worth a few minutes of your time!

Before we close off the shopping list, let's have a look at where everything might fit (with thanks to Asus for the inspiration!)

The Specification

Right then - we have worked out how the individual parts could hang together and the technology we want to utilize - how about looking at what we want our actual robot friend to do about the house!

Here is a starter list I have put together - I'm sure when I start building and finding out the pros/cons of different things, the list will morph and take on a life of its own.

- Remote 'Check in'

- The ability to use a mobile app or the web, to 'check in' with the robot when I am away from the house, and ask it to take a spin around the premises and check things are ok. For example - I had a business meeting this morning and ironed a shirt before I left - did I plug the iron out? .. Robot could be directed to the appropriate location and give me a camera shot to let me know.

- Perhaps I went out for an hour but got delayed, and wanted to check that the family dog was still doing ok - I could send robot to find fido and check he wasn't eating my favorite shoes!

- Did you leave the kids at home with a list of chores? ... have Robot check they *did empty the dishwasher* and are outside getting fresh air and not "vegging" in front of the TV.

- Remote 'Warning/alert'

- You recall I want to install home safety sensors. If the robot senses GAS or the temperature suddenly rises (fire?), then they could contact me and give me a live update with video.

- How about unwanted visitors like a burglar who sniped the cables to your alarm system? ... Robot could video them and live stream an alert to you and the authorities (over mobile gprs of course since the wires were cut!)

- Take a message to...

- Since the robot will have the ability to recognize family members, how about the ability to make it the 'message taker and reminder'. I could say 'Robot, remind <PersonName> to pick me up from the station at 6pm'. Then, even if that household member had their phone turned on silent, or were listening to loud music or <insert reason to NOT pick me up at the station>, the robot could wander through the house, find the person, and give them the message.

- Portable Amazon Alexa

- Yup, you will have no doubt noticed that with the addition of the Jasper library we could start to replicate some of the functionality of the likes of Amazon Alexa or Siri, etc.

- Chicken herding

- This *has* to be done! ... I intend to make at least one version of robot that works in the garden, keeping an eye on the chickens, ensuring they go into roost at night (image detecting and auto-tracking of chickens anyone?!), and of course, keeping an eye on where they lay those eggs!

I have included my own list of links to suppliers/parts that I have found while researching this project - download it if you intend jumping into something similar - it might save you some time.

Summary

The take-away from this article is that if you can code, you can build a robot, really! ... There used to be a wide divide between the hardware guy and the software guy - this is no longer the case. The availability of low cost components and open source libraries make it easier than ever before to put together really cool projects like this robot. Take the IoT plunge, who knows, you may come up with something you can commercialize, put on kickstarter and make your fortune. We are developers, we build stuff - go on, you know you want to, you'll never know 'til you try :)

P.S.: As always, if you liked the article, please consider a vote!

P.P.S.: When you're finishing giving your vote <ahem>, pop over here for an interesting light look at current commercial domestic robots.

History

- 30th June, 2016 - Version 1

- 3rd August, 2016 - Corrections

- 5th August, 2016 - Added additional note on using sensors with code example

- 6th August, 2016 - Added list of links to suppliers/parts I have built up while researching this project

- 7th August, 2016 - Added link to report on commercial domestic robots

- 12th August, 2016 - Minor corrections/link addition

- 17th August, 2016 - Minor corrections/link addition