Biometric Locker- Intel Edison Based IoT Secured Locker With Cloud Based Voice and Face Biometric

4.95/5 (16 votes)

From unboxing to prototype, a complete C# and Node.js Intel Edison product development guide with a real time cloud biometric IoT project

"A Multi Modal Biometric System with Cloud Based Voice Biometric and Face Biometric Written in C# as PC App and a Face and Voice Biometric Node.js IoT App communicating over MqTT And Dropbox's Storage as Service to offer an Intel Edison based Secured Locker with Biometric Security."

- Download BiometricLocker-NodeJsIoT_App.zip - 13 KB

- Download myFirstProject.zip - 8.7 KB

- C# Voice Biometric Face Biometric DropBox MqTT PC IoT App - 28 MB

Index

Part A: Let's Learn

1. Background

2. Getting Started With Intel Edison

2.1 Setting Up Your Development Environment

2.2 Loading OS- Burning Linux Yocto Image to Edison

2.3 Configuring Intel Edison

2.4 Remote Login

2.4.1 Working with Edison through SSH

2.4.2 File Transfer

2.5 Solving WiFi Problem

3. Setting Up Development Environment

3.1 On-Board LED Switching

3.2 Blinking LED, the IoT "Hello World"

3.3 Powering Edison Board

3.4 Getting Started with Intel XDK

4. Embedded and IoT Workflow with Node.js and Grove components

4.1 Mini Project 1: Switch ON and OFF a LED based on a button press

4.2 Mini Project 2: Remote Control with MqTT

4.2.1 MqTT

4.2.2 Working with MqTT in Intel Edison

4.3 Mini Project 3 : Controlling Light Intensity Based on Room Light

4.3.1 Working with LCD in Intel Edison

4.3.2 Working with LDR

4.3.3 PWM- Controlling Light Intensity based on LDR value

4.3.4 Servo Control

5. Setting up Audio and Web Cam in Intel Edison

5.1 Setting Up Web Cam with Intel Edison

5.2 Setting up Audio

Part B: Let's Make

6. System Design

6.1 System Architecture

6.2 Fabrication

6.3 Voice Recognition With Knurld

6.3.1 Understanding Voice Recognition

6.3.2 Knurld API and Services

6.3.3 Knurld Process flow

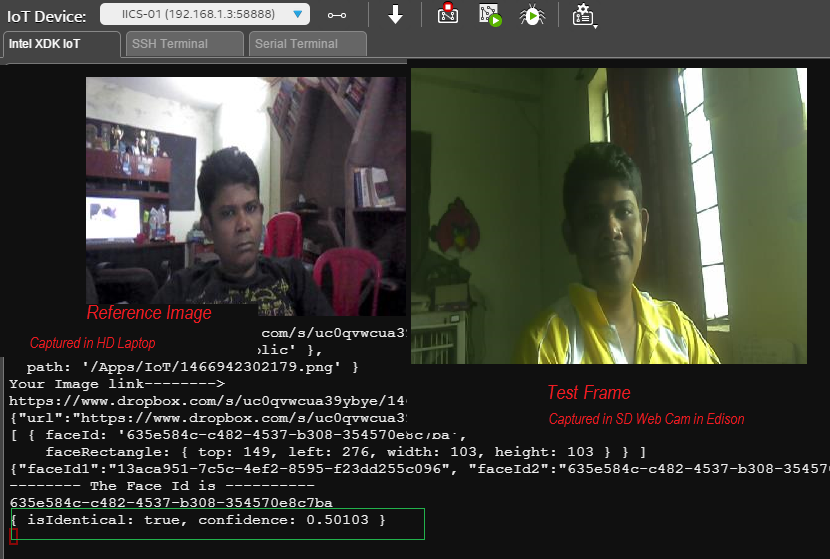

6.4 Face Verification With Microsoft's Cognitive Service ( Oxford AI)

6.4.1 Understanding face Recognition and Multi Biometric

6.4.2 Micosoft Cognitive Service- Face API

6.5 Dropbox - The bridge between App and Biometric Endpoints

7. Coding-Developing Software Suite for the Locker

7.1 Pc App

7.1.1 Setting And Registration

7.1.2 Voice Registration

7.1.3 Face Registration

7.1.4 A General Approach For Calling Cloud Services in C#

7.2 IoT Device App

8. Conclusion

Part A: Let's Learn

1. Background

Beside the usual "billions of connected devices and a trillion dollar economy by 2020" , the other most common statement that often gets associated with IoT is "security is a challenge". How do we create more secured framework for establishing more secured communication between a device and a cloud and among the edge devices is one of the areas of research and development in IoT. When I started planning about an IoT project for this tutorial, I wanted to work with security aspect of IoT. My initial plan was to work on various levels of encryption and write a tutorial around that. But then I realized that a tutorial without a solid use case will not of much use to the readers. I also wanted to review the security context being offered by IoT ecosystem in the context of an end product. So, I started thinking about a product that can showcase the security aspect. A cashbox is generally used by small businesses to keep daily transaction money. Many of us have lockers at home too. We keep essentials and valuables in lockers. Traditionally lockers are being protected with keys which are easy to forge. Some of the cutting edge lockers also offers fingerprint verification based system. A biometric based user authentication is considered to be more sophisticated security extension for such locks. So, I thought biometric security would be a great system to build if I have to create a highly secured system.

Needless to say, these systems works in an offline context and are pretty much independent system. So I thought "wouldn't it be nice to have a secured locker that can run on IoT ecosystem?"

Now, this thought is not merely because I wanted to develop a secured system around IoT and cloud, but there is certain advantage to it over the traditional system. The first is the scalability of the solution. For instance, let us consider a bank locker system. Different account holders have individual lockers. Let us assume that the bank implements an offline solution, as the customer base grows, the system would face severe scalability issues. Let us now assume that bank wants to create some more branches. In each of the branches the users that holds the lockers would have to be manually registered. If a customer changes the location, he usually needs to release current locker and go for a new one in new location. This becomes a tedious process for the banks. So, scalability and mobility becomes the core issues with offline biometric solution. The other important issue is that of the security of the biometric system itself. Ensuring that the biometric trait database is secured in the local server is also challenging. There is an entire suit of services and solutions for ensuring data security in the local storage.

Now, think what if we can take the solution to the core instead of keeping it at the edge? The core or the cloud as it is now being commonly referred, is an extremely scalable infrastructure with high level of reliability, security and computation power out of the box. Now imagine, if the biometric solution of the bank locker that we discussed is implemented at the cloud, a bank wouldn't have to worry about local security of the records, maintaining the system, scalability or mobility of the customers. They can focus on their business where as the security and scalability would be taken care by the cloud. This indeed was the motivation of this project called Biometric Locker.

The cloud also offers other advantages. For instance, a bank can easily offer mobile based verification system. So the biometric trait of the user collected for the locker can be easily extended to other core banking services. For example user can use his biometric trait to access his internet banking. Banks can extend the service to ATMs. They can even be extended to mobile banking. Mobiles being the most popular platform for personal and even some of the enterprise applications now, makes it even more important for the services to be in the cloud such that they can be extended across different devices and service areas.

So, a cloud based biometric locker was a good product to build from both an IoT ecosystem point of view, as well as a scalable enterprise security system.

But there is one other aspect that needed some thought. Which biometric traits? Iris, fingerprint, face, voice, palm prints are some of the popular choices of the biometric traits. But which can be cost effective and a scalable trait? Face immediately comes to our mind. Why? because it needs no extra hardware. A mobile has a camera, tablet has a camera, laptops have cameras. But, biometric systems are often vulnerable. For example a face biometric system can be easily gamed by presenting a high definition photograph of the user in front of camera. Liveliness detection system was built to counter this threat where a user is asked dynamically to make some facial gesture like smile or blink eye. If user could follow that, he would be considered as alive and his face will be verified. However, unfortunately due to requirement of subsequent frames for such a detection, many commercial face biometric providers have failed to offer this solution out of the box. Which is the other way to verify the user? Voice, of course. If user is asked to speak out certain phrases in random orders, then he would be considered as alive. It further enhances the security of the system. But the best part is that, like face, even this system needs no special hardware as tablets, mobiles and laptops all have MIC which can be used to record user's voice.

So, a cloud based biometric system with combined face and voice biometric would be an ideal mobile, scalable and reliable security ( authentication in more finer term) system would be the most obvious choice for a security system.

An efficient IoT ecosystem can be built only if sufficient thought is put behind connecting not only "things" but also the clients. So we propose a novel "Cloud Based Face and Voice Biometric powered Secured locker". However, our focus for this tutorial will be around implementing these techniques in edge IoT device( because at the end locker is a thing and the thing has to be secured).

The last ,most important question was "which device?" Raspberry Pi? Beagle Bone? Arduino Yun? After not so much thought as much I spent on the concept, I decided to go with Intel Edison. Why?

Because, trust me, nothing gets your IoT prototyping easier in comparison to Intel Edison and you would learn and admit that by the course of this tutorial. On a side note( you are welcomed not to note it at all) I am an Intel Software innovator and I have weakness around Intel technologies. Also, Intel have been kind enough to offer me the entire hardware kit for free( yay!!! the secret revealed). But, even if you don't get it for free and need to spend some amount on it, I advise you do so. Because Intel Edison and Grove kit combo can really bring out IoT skills to even a 10 year old kid.

Organization of the article

When I worked out the problem space that I want to work with, next obvious question for me was what I want to cover through this tutorial.

A simpler choice would have been to drop certain external links for some getting started guides and keep the focus area of this article around the core concept. But then I thought, if I am creating a product from the scratch, why not build a tutorial of the complete stack? From conceptualization to design and prototyping? So, instead of limiting this article as a code or hardware explanation limited to our Biometric Locker, I decided to guide a completely novice and never exposed to IoT ecosystem guy ( or girl!!! no gender bias intended! ) to encourage, help, guide and mentor to develop a commercial grade IoT product.

If you are already a punter in IoT, or an Arduino DIY guy and you already know things about "things", you can straight away jump to the section that introduces the security. If you are a security professional and you want to know how your skills can be used in IoT, you can use the tutorial to skip through security topics and focus on the IoT aspect. Whether you are a professional, or a hobbyist, or just a curious reader, you would found something interesting to learn from this article. If you do, just put a comment. Are you ready? Awesome, so let's get started. But, before that, a general warning:- this is going to be one huge article, so you are advised to bookmark this, make sure your coffee or beer stock(whatever you prefer) lasts till the end of the reading.

If you are already an active developer or a DIY or a hacker, skip the next paragraph, the absolute beginners are advised to read the footnote of this section.

Some quick getting started links for the beginners:

As IoT consists of framework, protocols, connectivity, cloud, hubs, buses, devices, standards and many more, you are advised to follow some basic tutorial and articles in IoT. If you have never worked with hardware before, I advise you to get yourself an Arduino UNO board first, get your hand dirty with some basic hardware hack and programming and then get started with this tutorial. Here are couple of quick links for you that might help you to accelerate your IoT learning.

1. Introduction to Internet of Things

2. Complete Beginner's Guide For Arduino Hardware Platform For DIY

Chapter 2: Getting Started With Intel Edison

In this chapter, we will learn about setting up our device, flashing operating system image, connecting the device to internet, allocating ip address, and some work around about Edison WiFi problems. This chapter is specifically for those of you who has just got an Edison board. Those of you who already have a board set up can straight way go to chapter 3.

2.1 Setting Up your development environment

This project is a multi-programming language-, multi-architecture, multi-hardware, multi-protocol stack and therefore need many tools, technologies. So, you are advised to grab all the necessary tools and install them to kick start making this project.

Hardware:

- Intel Edison with Arduino Expandable Board.( Amazon Link)

- Intel Edison Power Adapter ( I shall reveal why it is important in due course of time)

- A UVC Compatible USB web camera ( camera with microphone would be the most ideal choice)[ list of all the UVC compatible camera vendors]

- A Bluetooth microphone ( we are not going to use it in this tutorial. So if you already have a camera and Bluetooth microphone, then that is fine. I am just going to share some links for configuring Bluetooth microphone. However, be advised that separate microphone and camera will have performance issues)

- Grove Kit for Intel Edison( Amazon Link)

- A Grove compatible Servo motor( if your Grove kit doesn't come with one).

- USB Driver for Edison

- 7-zip for decompressing Yocto image

- PuTTY to connect to the board through SSH or Serial interface

- WinScp or FileZilla for easily transferring the files to and from Edison board

- Visual Studio 2015 Community edition. (I use VS2012). for developing our C# Client.

- Intel Edison Yocto build image

- Intel XDK IoT edition

- Bonjour from Apple for automatic discovery of IoT devices

- MyMqTT App in your mobile

You would need to setup many other tools which are not really required at this stage. You are starting with Intel Edison and IoT and therefore you need at least these tools. But more than anything, you need a good internet connection( after all it's INTERNET of things).

2.2 Loading OS- Burning Linux Yocto Image to Edison

There are tons of tutorials( here is one official tutorial by Intel->Flashing Firmware into Edison) about getting started guide with Edison. So I could easily avoid this section. However, to maintain the continuity of this article and to cover a complete end to end process of IoT product development with Edison, I would cover the firmware flashing part here.

If you have just got your brand new Edison board, you can follow the steps in figure 2.1 to unbox and prepare your Edison board.

Figure 2.1 Unboxing Intel Edison Board

Before you start with flashing, you have to power up the board. Please see figure 2.2 to know what each port and switches do in Edison board.

Figure 2.2 Understanding the switches and ports of Intel Edison

Figure 2.2 Understanding the switches and ports of Intel Edison

As you can see in the above figure, the first micro USB port ( the one after power selection switch) is used to flash firmware into Edison as well as is used for powering up the board through USB. So for flashing, it is sufficient that you connect a single USB cable with this port and proceed with flashing process. You can see the connection in figure 2.3

Figure 2.3: Connection Setup for Flashing

Figure 2.3: Connection Setup for Flashing

We will be using a utility called dfu-util( device firmware upgrade utility). Please go to the official dfu-util(http://dfu-util.sourceforge.net/) Source forge page and then from release folder download the windows exe (http://dfu-util.sourceforge.net/releases/dfu-util-0.8-binaries/win32-mingw32).

This tool in turn depends upon another tool called libusb which can be downloaded from the same Source forge release directory of df-utils (http://dfu-util.sourceforge.net/releases/dfu-util-0.8-binaries/win32-mingw32/libusb-1.0.dll).

Once you have downloaded the Yocto zip file( it would be named as iot-devkit-prof-dev-image-Edison-20160xxx.zip), right click ->7-zip->Extract Files, select a folder for extraction.

An obvious question here is why we need a separate zip/unzip pack and what's wrong with using WinRar or even windows out of the box unzip utility? Well, the reason is that the Linux distribution has some really deep nested files where the absolute path is extremely huge. Windows Unzip utility can not handle such large file path. Therefore we need 7-Zip. If you do not use 7-Zip, chances are that your installation will not completed successfully. Once the folder is unzipped, copy df-utils.exe and lib-usb.dll into this unzipped folder.

Your folder should look similar to following figure 2.4.

Figure 2.4 Directory view of unzipped Yocto with df-util and libusb

All you need to do is plug both the cables, make sure that Edison board power switch is towards USB ( don't worry, we shall discuss about powering up the board in another section). You can see following image to be sure of the connectivity.

Find and double click FlashAll.bat it should prompt you to reboot as bellow.

Figure 2.5 Initiating Flashing Process with flashall.bat

You can reboot Edison by pressing reset button ( see figure 2.2)on the board.

If things goes as par plan the installation will be completed and flashall command window will get closed.

It's time to connect the serial port with second micro USB, if you have not connected that while flashing. Connect your serial port to last micro usb port (figure 2.2).

Now when you right click on My-PC ( or My-Computer in Windows 7) and select properties->device manager under LPT ports you will see a new entry as shown in figure 2.6.

Figure 2.6: Detecting USB Serial Port for Edison

So, now your Edison board is connected to PC using Serial port. It's time to test if the board boots or not.

Open Putty. Select Serial radio button instead of default SSH and make sure that you have set the baud rate to 115200. The Edison board doesn't work with 9600 or 19200 baud rate which you may be habituated with if you have used Arduino earlier. Enter your comport and hit 'open'.

Figure 2.7 : Connecting to Edison board via Serial USB using PuTTY

You will see your Edison board booting as shown in figure 2.8 below.

After booting, Edison prompts you for user name, which is root. Password is not yet configured. So once you give root, you will see root@edison# prompt as shown below in figure 2.9. From here onwards we will refer this prompt as Edison shell.

Figure 2.9 User Login in Edison

Voila. You have just completed the first step- i.e. setting up your board. Cheer up yourself, you have done a great job. Take a break. When you comeback, we will start with the next important step, i.e.. configuring the board.

2.3 Configuring Intel Edison

There are few things to do before we can actually start coding with Edison. The first thing to be noted is that, Edison is an IoT device. IoT devices are needed to exchange messages through many secured and in-secured gateways locally as well as globally. So setting up a unique name and password for the board is a very important step towards getting things working properly. It is extremely simple. You can configure and setup the board with a single command in Edison shell.

configure_Edison --setup

First, it will prompt you for a password as shown below in figure 2.10

Figure 2.10 Password setup in Edison

Next, it will prompt you to enter a unique name for the device. If you are connecting many Edison devices in your network, which is basic requirement in many home automation as well as industrial application, then DNS needs a way to resolve the ip address of the devices. It is advised that you give a unique name to your device in order to avoid a DNS-IP conflict or DNS resolve error following figure shows device name setup.

Figure 2.11 Edison Device name setup

Once device name is setup, it will prompt you for password. Enter a password. If you are in production environment, create a strong password is recommended.

it will now ask whether you want to set up WiFi or not. Just type y and enter.

It will show you list of WiFi Networks where you have to select the network you want to get connected to, when it asks for confirmation, enter y and then enter your WiFi password.

Figure 2.12 Wifi setup in Edison

If things go as smooth as they often do, then you will see a dynamic IP address being assigned to your device. This is of course a local IP address corresponding to the your local network.

Now test your internet connectivity by pinging google.com. If you see packets exchange is successful in ping, then your internet is connected and you are almost ready to rock with your board.

(use ctrl+c) to stop ping.

Figure 2.13 : Ping test for checking internet connectivity

Figure 2.13 : Ping test for checking internet connectivity

This is the optimal setup you need to get started with IoT with Intel Edison. During the course of this tutorial we need to set up several other things like a web camera, audio, Bluetooth and so on. We shall skip those setup at this stage and cover them appropriately just before we start using them.

By the way, if you have reached till this point, you have done a great job so far.

Note(important for beginners):

When you are login for the second time through serial port in PuTTY, after hitting open button, you will see a blank console. Don't worry, just press enter and you will be to login shell.

2.4 Remote Login

Having setup our device, the first thing we need to do is to test if we are able to login to our Edison board remotely or not. In most of the cases, we will be deploying our IoT app with the device as an independent entity. That must not require any laptop connectivity for coding and debugging. We will explore two different techniques here: first one is SSH which is one of the most important tools ever developed (particularly for IoT), the second one is remote file transfer via WinScp. File transfers are important to backup your code, extracting sensors log from device, storing configuration files.

2.4.1 Working with Edison through SSH

Secured Shell or SSH as it is commonly referred gives you the power to log into your device even if your device is not connected to your laptop. SSH also gives you the power to work with Edison from any operating system. Once IP address is configured in Edison and it is in your local network, you can SSH to device from Unix shell or from mac with following command. It will prompt you for password. Enter the Edison password. You will be logged in Edison and you can work with the device.

ssh root@<Edison_ip_address>

As, my focus is on windows environment, I will be using PuTTY for remote login( you can also use PuTTY with Linux or Mac).

If you are using PuTTY, enter Edison_ip_address in IP address text box after selecting default SSH radio button as shown in above figure 2.14 and hit open.

As SSH establishes a secured communication, putty exchanges a certificate with your board. So, when you are using PuTTY for SSH to a particular device for the first time, it will show you a security warning with the exchanged certificate. Just accespt and you are done. It will prompt you with root.

Figure 2.15 : SSH Security Warning

Note that SSH doesn't require a serial communication. So, you can very well unplug the last micro usb cable ( serial port cable as in figure 2.2 and then test the SSH command.

The great thing about Edison is that it supports Multi-Session. So you can login to same board from different PC through SSH at the same time. You can also use two putty windows: one for SSH and other with Serial in the same PC.

Following figure 2.16 shows how I logged into my Edison device in two different sessions, one using Serial port and the other using SSH

Figure 2.16: Multi Session with Intel Edison

So, if you are a team lead or a senior, and one of your team mate is struck in a code, you can always login to his machine through SSH and access a copy of his current code :). More than one developers may also work in different projects simultaneously with Edison (!not tested)

2.4.2 File Transfer

While working with IoT devices, many a times you need to exchange files like: downloading log files, uploading configuration files and so on. It is therefore recommended to setup a file transfer tool at this stage itself. Though this is not an essential step for this current project, you may need while working with Audio and video.

There are many file transfer tools. FileZilla is a very popular tool. If you are using FileZilla, you can login to your board via SFTP as shown in figure 2.17.

Figure 2.17 : Setting up SFTP through FileZilla

Don't forget to change the default port 80 to 22. Once you login to device, you will see your Edison root folder on right and your working directory on left as shown in figure 2.18.

Figure 2.18 FileZilla Structure

You can transfer file to and from Edison by dragging the file from and to your pc's local directory. WinScp supports only SFTP and therefore is little simpler for the beginners. Following figure 2.19 shows a WinScp screenshot.

I personally prefer to use WinScp, but you can use any of the tools you are comfortable with. You can transfer some files to Edison, ls in shell and see if the transfer is successful or not. This should have been the end of chapter 2, but I want to cover another important topic which is included as a hack to solve WiFi problem. You need not to follow this subtopic here, but taking a glance at this topic will make you aware of the type of problem you may face with Edison's WiFi and how to workaround with that problem

2.5 Solving WiFi Problem

Many a times, if your WiFi is not reliable, or of weak signal strength, Edison doesn't get connected properly. So even though an IP address is associated with the device, the device will refuse to get connected via SSH. In case you are getting such connection problems, login through serial mode, and test ping google.com. If you see that the device is not pinging google ( it will shoot a command response "bad address google not found"), you need to manually re-configure Edison again( if your WiFi is working properly). As a first step you can generate reboot command from serial putty and after loggin in test ping again. If the device still doesn't get connected, in shell generate

configure_Edison --WiFi

Follow the WiFi setup steps once more. You should now be able to access the device once more through Edison.

One of the problems with reconfiguring WiFi is that Edison stores the WiFi configuration in a file /etc/wpa_supplicant/wpa_supplicant.conf

Each time you reconfigure, your settings are added to this file( even if you are entering the same network and password). If the file grows too big, or if internal write operation gets corrupted, then you will see an error after configure_Edison --WiFi which can be either no ssids shown, or failed to connect to global_iframe as shown in figure 2.20

Figure 2.20 Common WiFi problems in Edison

In case you get any of the above errors, do not worry. Here is a workaround of the problem.

- Remove the configuration file.

-

rm /etc/wpa_supplicant/wpa_supplicant.conf

- create a new configuration file with vi command

-

vi /etc/wpa_supplicant/wpa_supplicant.conf

- copy and and right click on vi editor to paste the contents the following configuration template

-

ctrl_interface=/var/run/wpa_supplicant ctrl_interface_group=0 update_config=1 fast_reauth=1 device_name=Edison manufacturer=Intel model_name=Edison network={ ssid="YOURSSID" scan_ssid=1 key_mgmt=NONE auth_alg=OPEN wep_key0=f0039faded348299992344be23 } - ESC :wq to save and come out of vi editor.

- reboot

Now again got Wifi setup stage and you should be fine :). Note that after reconfiguration, your ip address may get changed. Please check and note down the ip address.

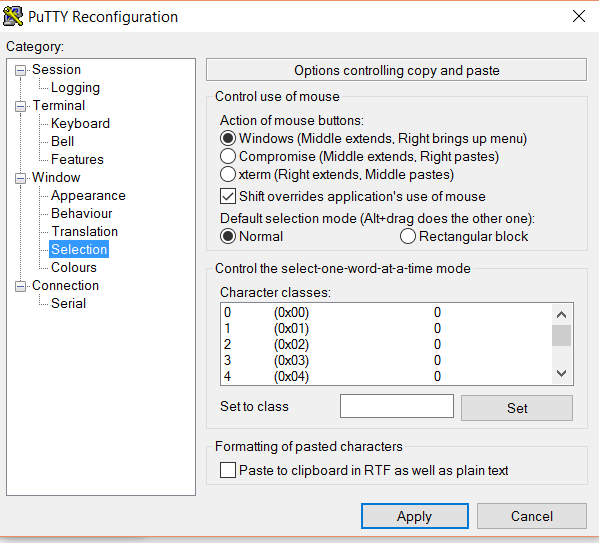

Note: If you are unable to paste on vi editor through right click, click on the top of shell to bring out change settings and make sure you have property being set as par following figure:

Figure 2.21 Desired PuTTY property for ability to copy paste

Well, that marks the completion of chapter 2. Here is a summery of what we learnt in chapter 2

Chapter Summery: What we learnt

- Unboxing Edison

- Flashing Yocto image into Edison

- Setup serial communication from PC to Edison

- logged in to Edison board via PuTTY and SSH

- File transfer to Edison using WinSCP or FileZilla

- And finally a work around to solve common WiFi problems in Edison.

3. Setting up Development Environment

In this chapter, we will learn about setting up our development environment, executing some basic programs in Node.js, understanding power and hardware components.

Edison supports many coding languages. Python, Arduino C and Node.js are some of these platforms. I love Node.js because of the simplicity of the language, plenty of libraries being available and support of Edison out of the box. So if you have setup the board using the steps covered in chapter 2, you do not need any other configuration. You can start coding :)

In this chapter, our focus will be understand writing simple codes in Node.js. It is better that you follow some great online tutorials on Node.js.

I particularly advise beginners to follow this Tutorial Point Node.js Tutorial which has inline execution option. So you can try the code as you learn.

However, through the course of this tutorial, we will cover important aspects of Node.js relevant to IoT context.

We will use Intel XDK IoT edition for writing, debugging, running and deploying the code. But, before we switch to Intel XDK, we will execute our first simple node.js program in vi editor to check every thing is setup perfectly.

Once we are done testing our first program ( which will be switching on-board LED of the board), we will discuss about powering up our Edison in different ways. Then we shall learn how to use different hardware components with Edison. Here is the agenda of this chapter:

- Simple On-Board LED glowing through Node.js code from vi editor

- Learning about powering option for Intel Edison

- Getting Started with Intel XDK

- Grove Sheild Connection

- preparing Intel XDK IoT edition for coding and debugging

Ready? Let's start "making"

3.1 On-Board LED Switching

Edison supports Node.js out of the box. So, you can straight away start codeing through vi editor. In your PuTTY Edison Shell type first.vi. Hit insert or 'i' key and type the following listing.

var mraa=require('mraa')

var ledPin=new mraa.Gpio(13);

ledPin.dir(mraa.DIR_OUT);

ledPin.write(1);

Press escape, then :wq and enter to save and exit.

To execute the script, type

node first.js

Look at your board. On board LED, which is hooked to pin 13 will now be on as shown in figure 3.1 below.

Figure 3.1: Onboard LED Switching

Awesome! You are on your way. Now, turn off this LED by changing the program!!!

Ok, getting back to what this program does!

mraa is a node.js wrapper of Libmraa which is a library written in C++ to access the hardware ports of Embedded and IoT devices running Unix.

Intel Edison Arduino expendable board has digital and analog pins just like Arduino. You are advised to read the Arduino article link I shared at the beginning of this tutorial if you are not aware of the actuators and sensors.

The on-board LED just like Arduino is hooked to pin 13. The pins are dual input out(DIP) type. So first we declare an object of mraa library called mraa. Now we access the pin 13 by declaring a variable called ledPin as a new instance of type mraa.Gpio() . As the pins can be used as input or output( but remember only of one type at any moment), we need tospecify the pin type through mraa.DIR_OUT or mraa.DIR_IN. As, we want to control the LED, LED needs to be an output device. Hence we select the direction as DIR_OUT. finally using write function to write 1 or 0 which results in turning on and off of the bulb respectively.

As, javascript is a scripting language, we do not need to define an entry point for the program. The program is executed from the first instruction.

However, you can observe one thing, the moment you execute the program, the LED will be on and your shell prompt will return. Which means that the program is finished executing. By definition, a microcontroller or embedded microprocessor is expected to run in an infinite loop, repeating the same sets of instructions over and over again differed by a time delay( recall the void loop() function of Arduino). So, in order to have our program do the same thing, we are going to create our own loop() function for node.js and extend our basic LED switching program into LED blinking which is considered as "Hello World" for embedded systems and IoT.

3.2 Blinking LED, the IoT "Hello World"

In node.js we can call a function asynchronously after predefined time period using setTimeout() function. Following is the general structure of a loop() that calls itself in every 1000 ms.

function loop()

{

//your code goes here

setTimeout(loop,1000); // recurssion in every 1000ms

}

You can write your main login in this function and from the script, you can simply call this function. Following in the blink.js code developed by tweaking first.js and above loop template.

//listing blink.js

var mraa=require('mraa')

var ledPin=new mraa.Gpio(13);

ledPin.dir(mraa.DIR_OUT);

var ledState=0;

loop();

function loop()

{

if(ledState==1)

{

ledState=0;

}

else

{

ledState=1;

}

ledPin.write(ledState);

setTimeout(loop,1000);

//ledPin.write(1);

}

We simply introduce a variable called ledState and invert it's value every time the loop is called. One obvious question that may come to mind is why not using a boolean variable instead? Because, write() expects it's parameter to be of integer type. So we use a number. Execute this code and you will see the onboard LED blinking in every 1 sec.

Can you alter this program to keep the LED on for 5 sec and off for 1 sec? Try out by yourself.

If your LED is blinking, you are now ready to start using hardware. We will start with Grove shield. But, before we use Grove sheild, I would introduce another sub-topic called powering up the board. As you read on, you would come to know the importance of understanding power and different power options that can be used with Edison and their use cases.

3.3 Powering Edison Board

One of the obvious question that may come to your mind would be "why the topic is introduced here? It should have been right at the beginning.Because, powering is after all one of the basic things".

Well, once we have a new toy, we want to get started with it first. We want to open, and have it's basic functionality. Then we start exploring the features. Blinking LED is one of the most important confidence boosters when you start working with an IoT component. So, I covered the essentials to help you reach upto this stage and understanding the hardware and program structure a little. Once you are at this stage, you have a ready working board to tinker with.

Table presented in figure 3.2 shows the different powering options, how to connect to Edison, their rating and use cases.

Figure 3.2 Intel edison powering up options

Generally I use the power bankfor my all non-camera based Intel Edison project because of mobility, good current rating and ease of use. I advise you not to power up Edison using USB as it has very poor current output. So, if you are using laptop to power up Edison, be prepared to frequent reboot and crash when many components are in used. Also, web cams do not work with USB powere board.

For robotic projects with 12v motor and relays and camera 12v battery is advise. Important things to note here is that many of these batteries have high current ratings like 5A or 7A. Please be sure to check the rating and go for batteries which offer 1.2A to 1.5A max.

The above table will be in your Edison reference list and would help you chose a power sourrce based on your requirement.

There is just one more setup before you can start working with serious IoT stuff with Edison

3.4 Using Grove Kit With Edison

"Time is money".

When you are prototyping, you want your model to be ready as quick as possible for a proof of concept. Image what if Copy-Paste was never invented? How many of us would still be an efficient coder? Code reusibility makes the software prototyping extremely fast and efficient. But the same isn't always true for hardware. You need to hook up connections, test voltage, power, have various connections and find out that something isn't working. You reopen the connection. Bread boards are one of the choices for temporary connection, but let's face it, you can't ever build a prototype on bread board and take it before the investors. They don't look neat, plus there is always a thick chance of connections coming out. Even though Arduino made the connections relatively simple by offering the most basic needs for a hardware prototyping out of the box, you still had to hook up the external components.

Grove kit by seed studio is one of the major leap towards making hardware prototyping simple. It allwows you to make good prototype at a fast pace, without worrying much about the connections.

So, let's begin with our Grove kit.

Let's open our Grove kit pack. You can see several components in slots. I prefer to mark them with marker so that I can place back the components after I am done with certain experiment. Here is how Grove kit looks.

Figure 3.3: Grove Kit Components: Inside the Grove kit box

I advise you to mark the solts so that you can always keep a track of your components. When you open remove the LCD, beneath it, you will find the base shield as shown in figure 3.4

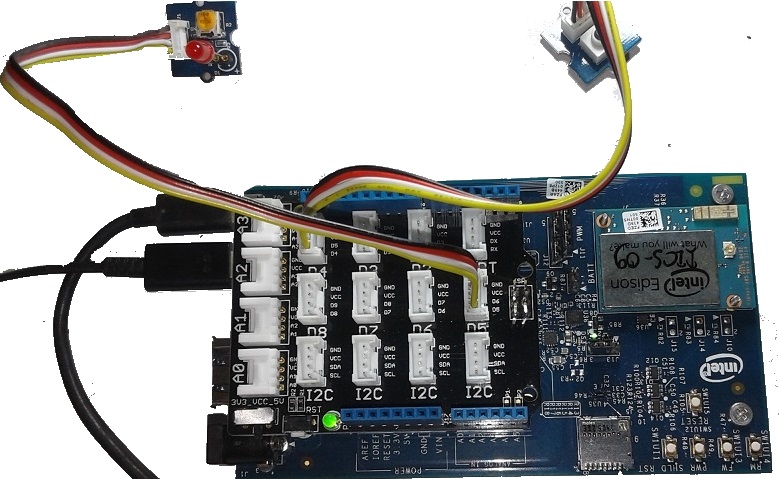

Now take out the Grove Base Shield as shown in 3.4 (a) and mount it on top of Edison Board as shown in figure 3.4(b). Ensure that the base shield has sit properly on the slot.

Figure 3.4 : Connecting Grove Base Shield with Edison

3.5 Connecting Hardware Components to Base Shield

Figure 3.5 Explains how to hook up Grove components with base Shield. The logic is pretty simple. Connect sensors with Analog ports, digital switches or output like Relay and LEDs with Digital port. If you want to use PWN for Speed control or Intensity control, use D5 or D6 which are PWM ports. LCD or Accelerometer must be connected to I2C port.

Figure 3.5 : How to connect components with Grove Shield

But, where are the connecting wires? pull up your white slotted box holding the components and you will see the connecting cables. Just take out the cable and connect one end to the component and the other end to the base shield port as per the table in figure 3.5 .

For those of you who are still unable to find the connecting cables and confused, refer to figure 3.6

Figure 3.6 Connecting Grove Component with Base Shield

The cables will get connected only in one way, so you need not to worry about a wrong connection. But if you really want to know the trick to "connect without confusion"?

Black wire goes to 'GND' marked side of the port. ( see above image)

In above figure, I have connected buzzer with D4. If you also connect the same, you can easily test it by changing pin number from 13 to 4 in blinking.js.

If you go through my Arduino tutorial that I had mentioned at the beginning of this tutorial and compare the effort I had to make in rigging up each of the circuits with the circuit you built, you would realize the revolution Seed's Grove kit brings with it!

Okey, so now you have flashed your Yocto, configured Wifi, tested LED blinking, connected Grove kit and tested it. It is now time to get started with serious coding and prototyping with the board.

3.6 Getting Started with Intel XDK

You have already developed a simple program in vi editor. So, an obvious question may be "why we need another software?". Well, vi editor is great to get started but is not ideal for large and real time projects as it doesn't have code searching, module maintanance, intellisense and other minimum requirement for clean coding.

Another question that might come to your mind is that why we did not set it up early? Because Intel Edison needs WiFi to get connected with your device. Once WiFi is setup, you definately want to test your board is working or not. Right?

Once you have followed all above steps, you are already a confident IoT starter. So let's complete the journey from beginner to intermediate.

Before you start Intel XDK, ensure that you have installed Bonjour as listed in software requirement section. This is used by XDK to discover your Edison.

The XDK would ask you to register an account with Intel when you run it for the first time. Once you have registered, sign in with your account.

In the bottom click on the combo box with text '-select a device-'. if your device is up and running in the network, XDK will automatically detect the device and show you in the list as shown in figure 3.7.

Figure 3.7 Device selection in XDK

If you are connecting te device for the first time, then it will prompt you for the password for default user root. Once you enter the password, you would be alerted that "your device is connected".

Click on the top project tab and then select "create new project" from the bottom as shown in figure 3.8

Figure 3.8 Creating a new project in XDK

Now select, Internet of things Node.js project. XDK comes preloaded with tons of ready projects for you to qucikly learn IoT coding. But, I am not too big fan of these templates. So, I would rather prefer a fresh and blank project for you start with bare minimum and then keep adding your devices and codes on top of it.

So, select blank template as shown in figure 3.9

Figure 3.9: Project type selection in XDK

Give a project name ( change the project location if you want your new project to be at a different directory than default).

Once the project is created, you can start writing your node.js code into the default main.js file. XDK shows you hints, compiles as you edit the code, highlights syntax, and supports many more features!

Figure 3.10 Intelli-Sense with XDK

Having run your first two basic programs in vi editor, you will immidiately fall in love with Intel XDK. I gurantee!

Write your code and use the upload and run button to upload the code to your board and run the code as shown in figure 3.11

Figure 3.11: Uploading and running Node.js code in XDK

XDK is a complete tool for Edison. You can login to your device and use commands the same way you did from PuTTy using both Serial port as well as through SSH from the tabs at the bottom of the editor.

try out your first two programs (first.js and blinking.js) in XDK. There is no switching back to vi :D

try out:

- try to login to Edison via SSH from XDK and execute ls command

- take one LED and one buzzer with two different ports and try to blink them alternatively, i.e. LED-ON<->Buzzer Off

With, that we come to an end of this chapter. let us recall what we have learnt in this chapter:

Chapter Summery:

- How to write simple node.js program

- On-Off and blinking on-board LED

- How to power up our Edison board

- How to use Grove kit with Edison

- How to write more efficient code with Intel XDK

The next chapter will be dedicated to make you a better IoT programmer. We will hook different components and implement some important logic. We will understand the hardware-node.js-internet-node.js-hardware workflow.

Cheers!!! for completing this chapter and congrats for setting up your development environment successfully.

4. Embedded and IoT Workflow with Node.js and Grove components

In this chapter, we will take some simple use case projects and implement them through node.js. We will learn how to install external libraries with npm, how to design an Embedded workflow, how to plan the circuit and coding, how to communicate through IoT specific protocols ( remote control). We will use the knowledge we gain here to create our final prototype. The simple mini projects here are the basic building blocks for our final project ( in fact for a lot of IoT projects)

This is going to be helluva fun. Let's just get started.

4.1 Mini Project 1: Switch ON and OFF a LED based on a button press

You need to connect a Butoon and a LED with Grove shield. When you press the Button, LED should be On and It should remain on untill you press the button again. When you press the button again, the LED should be turned off.

Let's rig up the circuit first.

The push button is essentially a switch ( kind of a sensor). So which port are we going to attach it to? Is it Analog or is it Digital? Well, technically you can attach the push button to either Analog or Digital port. But the Button have only two states: On and Off. We use Analog ports for sensors whose value varies. So, we will connect he button with digital port here. LED as we know goes to digital port.

Can you rig up the circuit as per the table given in figure 4.1? ( I am pretty confident that you can).

Figure 4.1 Connection for Mini Project 1

When was the last time you specified an Electronic circuit as a table ????

That's how simple Grove and Edison makes your development. let me also clarify why I have chosen particularly D5 for LED connection! In a later stage, I want to control the intensity of the LED depending upon the light intensity. Recall from chapter 2 that intensity or speed control is done through PWM and pin D5 and D6 are only PWM ports in Grove(refer figure 3.5)

Even though, I am pretty confident that you have connected the components correctly, I am also giving you a snap of the circuit, in case you want to validate!

Figure 4.2 : Circuit For Mini Project 1- Switching LED with Button

Let's now see the coding. can you please try writting the code for the above logic?

I am sure you have written something like this

//listing: wrongLedSwitch.js

var mraa=require('mraa')

var ledPin=new mraa.Gpio(5);

ledPin.dir(mraa.DIR_OUT);

var buttonPin=new mraa.Gpio(4);

buttonPin.dir(mraa.DIR_IN);

loop();

function loop()

{

ledPin.write(buttonPin.read());

setTimeout(loop,1000);

}

just like read() is a method that returns intpu port's value. As we are considering Gpio, which is a digital port, the value would be either one or zero. So, you are trying to control the state of the LED based on the state of the button.

what is the problem? You must have observed, as you press the button, LED is turned on but as you release, it also becomes off. But we don't want that right? Once release, it should remain ON untill we press the button again. Then the LED should be off.

So, logically speaking, when the button state is 1 and LED state is o, LED state becomes 1. When button state is 1 and LED state is 1, LED state should become 0. Observe that we need a state variable here to implement the logic appropriately.

Also, you might have observed a latency in state change once the button is pressed. This is due to high delay in the loop. 100ms is a standard delay for looping. So, you need to change the delay to 100ms. Along with that, you need to introduce a state variable.

So we modify the code as bellow:

As you can see, instead of dealing with the state of the button, we are dealing with the transition. Whenever, there is a button state transition from 0 to 1, we are triggering the action.

Anything that causes a state change in putput port is known as triggers. In many IoT ecosyste you will hear this word trigger. A trigger can be a hardware trigger as in this case or a logical trigger like ( high temperatyre value or low light intensity). A trigger in IoT can be implemented locally or in the cloud.

// --> copy this code in main.js in your project

var mraa=require('mraa')

var ledPin=new mraa.Gpio(5);

ledPin.dir(mraa.DIR_OUT);

var buttonPin=new mraa.Gpio(4);

buttonPin.dir(mraa.DIR_IN);

var ledState=0;

var btnState=0;

loop();

function loop()

{

var b=buttonPin.read();

if(b==1 && ledState==0 && btnState==0)

{

ledState=1;

ledPin.write(1);

btnState=1;

}

else if(b==1 && ledState==1 && btnState==0)

{

ledPin.write(0);

btnState=1;

ledState=0;

}

if(b==0)

{

btnState=0;

}

setTimeout(loop,100);

}

Now you can see, whenever we are pressing the button, the LED glows, remains ON till we again press the button as shown in animated figure 4.3.

Figure 4.3: LED Switching With Push Button

having been able to complete our first mini project, let us lern so IoT stuff. In the next mini project we will incorporate remote control of the same LED along with the switch that we have used here.

4.2 Mini Project 2: Remote Control with MqTT

4.2.1 MqTT

Figure 4.4 Basic Structure of MqTT Protocol

MqTT( figure 4.4) is a simple publish-subscribe protocol which in my opition is one of the best things to have happened for IoT.

A global MqTT broker allows the client programs to create a topic ( which is like a queue) in the server. A client program can be run on any device, including but not limited to Mobile, tablets, laptops, PC and of course an IoT device. Let us call an entity which runs a MqTT client as node9 nothing to do with node.js node). Plenty of Open source client libraries for MqTT is available in literally every programming language and stacks.

So, a node can publish binary data ( called message) into any topics. Topics can be heirarchially nested. For example We can create a topic as rupam, then rupam/home, then rupam/home/sensors and rupam/home/control, then rupam/home/control/locks and rupam/home/control/devices.

A node that subscribes to rupam will get every message published in either rupam or rupam/home or rupam/home/devices and so on.

Many nodes can subscribe to one or more topics. Whenever a node publishes a data in any of the topics, every node that have subscribed to the specific topic will receive the message from the broker asynchronously.

Further messages can be of two types: persistant and non-persistant. A persistant message will be retained by the broker even after it is pushed to subscribing nodes, where as non-persistant messages will be flushed.

So, a program that wants to use MqTT service needs to connect to broker, subscribe to topics and publish message into the topics.

In our current work context, all we need to do is publish message from our Android phone and write a node.js program in Intel Edison to subscribe to that topic. Whenever a message arrives through broker, analyze and take decision.

Important Resources:

Here are two free brokers for you that you can subscribe to enjoy IoT based messaging for FREE!!!!!

- iot.eclipse.org

- test.mosquitto.org

4.2.2 Working with MqTT in Intel Edison

MqTT is not included in core Node.js package. Therefore Edison doesn't support it out of the box. However the good news is that just like other Node.js environment, in Edison also, installing new Node.js package is painless and simple.

Installing external Node.js packages

Figure 4.5: Installing External Node.js package in Edison using npm

Just login to Edison using SSH tab of XDK. in the shell enter:

npm install mqtt

That's it. The package will be installed. You can now play around with MqTT. So, from our code we need to connect to a broker (we will use iot.eclipse.org here), subscribe to a topic( let's say rupam/control) , on message arrive event handler, analyze the message. If message is ON, switch on the LED, if OFF, turn off the LED. We also want to keep our switch based control intact. So we will modify ledSwitching.js listing of previous section and add MqTT feature.

Here is the MqTT part:

//////// MqTT Integration/////////

var mqtt = require('mqtt');

var client = mqtt.connect('mqtt://iot.eclipse.org');

client.subscribe('rupam/control/#')

client.handleMessage=function(packet,cb)

{

var payload = packet.payload.toString()

console.log(payload);

payload = payload.replace(/(\r\n|\n|\r)/gm,"");

if(payload=='ON')

{

ledState=1;

ledPin.write(1);

}

if(payload=='OFF')

{

ledState=0;

ledPin.write(0);

}

cb();

}

/////////////////////////////////

We create a mqtt client called mqtt, connect to the broker and subscribe to a channel called rupam/control. in the handleMessage() event handler we convert the message to string, remove extra blank characters and check for message text. We implement switching decision based on message.

Observe that we have created an function called cb() which stands for callback, and very importantly we call cb() again from handle message. This is a very important statement. If you forget to call cb() again from handleMessage(), then you will receive only one message :) rest of the messages will not trigger the handleMessage() function because client would have come out of it already. By calling the callback again we ensure that the client automatically go to listen mode again after it handles the first message.

Importantly, do not implement this chunk of code inside your loop. Because, if you do, it will keep creating a client instance for every looping instance, destroying the previous instance. So, you will never get a message because even before that message is arrived, listing client is reinitialized.

putting this back into our ledSwitching.js, here is the final code:

var mraa=require('mraa')

var ledPin=new mraa.Gpio(5);

ledPin.dir(mraa.DIR_OUT);

var buttonPin=new mraa.Gpio(4);

buttonPin.dir(mraa.DIR_IN);

var ledState=0;

var btnState=0;

//////// MqTT Integration/////////

var mqtt = require('mqtt');

var client = mqtt.connect('mqtt://iot.eclipse.org');

client.subscribe('rupam/control/#')

client.handleMessage=function(packet,cb)

{

var payload = packet.payload.toString()

console.log(payload);

payload = payload.replace(/(\r\n|\n|\r)/gm,"");

if(payload=='ON')

{

ledState=1;

ledPin.write(1);

}

if(payload=='OFF')

{

ledState=0;

ledPin.write(0);

}

cb();

}

/////////////////////////////////

loop();

function loop()

{

var b=buttonPin.read();

if(b==1 && ledState==0 && btnState==0)

{

ledState=1;

ledPin.write(1);

btnState=1;

}

else if(b==1 && ledState==1 && btnState==0)

{

ledPin.write(0);

btnState=1;

ledState=0;

}

if(b==0)

{

btnState=0;

}

setTimeout(loop,100);

}

We want to control the led by sending ON and OFF message from our mobile. Again you can implement a simple MqTT Android client using Eclipse Paho Library

But, we are not really into Android. So instead of writing an App purely for this testing, we will use an existing MqTT client App from Google Play!

I particularly like and use MyMqtt app for my testing purpose.

Open the App, go to settings, connect to iot.eclipse.org, leave other defaults. Now go to publish section and in topic write rupam.control [ important:: Don't give a # while publishing]. Now Send ON or OFF to see your LED getting ON and OFF.

See the result in figure 4.6

Figure 4.6: Controlling LED over Internet using MqTT

Woohoo! You have just done your first "real IoT" program in Edison and controlled a LED remotely. Why don't you now try to control LED as well as Buzzer with commands like 'LED ON', 'LED OFF', 'BUZZ ON', 'BUZZ OFF'?

You can very well use small case as commands, but I prefer a capital command case to distinguish them from notmal text.

With that we come to an end of our Mini-Project 2 where we have learnt how to remote control components as well as the communication protocol between clients

In our next mini project, which will be the last of our learning projects, we will control the LED intensity as per room light intensity and provide details in a LCD. So, will will learn about PWM, LCD as two major things in next mini project as part of our learning experience.

4.3 Mini Project 3 : Controlling Light Intensity Based on Room Light

In this mini project, we will connect a LCD to display room light intensity, and change the intensity of the LED as well as the back color of the LCD as per the room light. We will keep our switching logic that we had developed in the previous sections. So by the end of this mini project, you would be able to switch your LED, along with controlling it's intensity. You will also learn about LCDs.

You need to add two extra components here: LCD and a Light Sensor. Can you rig up the circuit by glancing figure 3.5?

4.3.1 Working with LCD in Intel Edison

We will connect a LCD with any of the I2C ports ( the program will auto detect the port). We will first do some basic LCD coding. We will then implement pwm in 4.3.2 and then combine them into one entity in 4.3.3.

Figure 4.7: Connecting LCD with Intel Edison

Coding LCD is also quite simple. The LCD provided with Grove is in-fact a RGB back light 2x16 LCD. Which means that you can print messages in two lines, as well as change the background color of the LCD.

Let us create a simple LCD 'Hi Codeproject' program

// lcd.js

//// LCD part///////////

var LCD = require('jsupm_i2clcd');

var myLCD = new LCD.Jhd1313m1(6, 0x3E, 0x62);

myLCD.setColor(255,0,0);

myLCD.setCursor(0,0)

myLCD.write('Hi Codeproject')

myLCD.setCursor(1,0)

myLCD.write('Intel Edison')

////////////////////////

jsupm_i2clcd is a UPM i2c library for LCD that comes out of the box. So no new install. I have no idea of what Jhd1313m1(6, 0x3E, 0x62); is, buit seems needed for LCD initialization :(

Rest of the code is straight forward. First set back color with setColor(r,g,b) function. Set the cursor position using setCursor(row,col) . First we print 'hi codeproject' in 0th row, i.e. first line and then we set the cursor to second line ( row=1) and print 'Intel Edison'.

Here is how the result looks like:

Figure 4.8: Hello LCD with Intel Edison

We will now hook up a light sensor( also called LDR or light dependent resistor). Check out the next section for knowing LDR functioning

4.3.2 Working with LDR

I must tell at this stage that we are not going to use LDR in our project. So, I thought of by-passing this topic. However, in order to make your learning curve complete, I have put up this section. The objective of this section is purely to let you know about the working of sensors and have no bearning on our project. ( So, if you are interested only in our Biometric locker, you can skip this section. For first time learners, this is imporant). However, we shall cover some LCD best practices here. So, you would lose out if you skip this section!

As LDR is a Sensor, we will hook it up in any of the four available analog ports: A0-A3 ( refer figure 3.5)

Let's connect our LDR with A0 here. All you have to do is declare a ldrPin as new mraa.Aio(0). In loop, read the value of the sensor in a variable and display in LCD.

We will use write(),setCursor(row,col) and setColor(r,g,b) inside the loop and do the initialization at the top.

Let us modify ledSwitchingMqtt.js by adding sensor handling and lcd.js

var mraa=require('mraa')

var ledPin=new mraa.Gpio(5);

ledPin.dir(mraa.DIR_OUT);

var buttonPin=new mraa.Gpio(4);

buttonPin.dir(mraa.DIR_IN);

var ledState=0;

var btnState=0;

// LDR Part//////////////

var ldrPin=new mraa.Aio(0);

// no need for initializing dir for Aio as Aio pins are only input type

//// LCD part///////////

var LCD = require('jsupm_i2clcd');

var myLCD = new LCD.Jhd1313m1(6, 0x3E, 0x62);

myLCD.setColor(255,0,0);

myLCD.setCursor(0,0)

myLCD.write('Hi Codeproject')

myLCD.setCursor(1,0)

//myLCD.write('Intel Edison')

// we will use second line to print light value

////////////////////////

//////// MqTT Integration/////////

var mqtt = require('mqtt');

var client = mqtt.connect('mqtt://iot.eclipse.org');

client.subscribe('rupam/control/#')

client.handleMessage=function(packet,cb)

{

var payload = packet.payload.toString()

console.log(payload);

payload = payload.replace(/(\r\n|\n|\r)/gm,"");

if(payload=='ON')

{

ledState=1;

ledPin.write(1);

}

if(payload=='OFF')

{

ledState=0;

ledPin.write(0);

}

cb();

}

/////////////////////////////////

loop();

function loop()

{

var b=buttonPin.read();

// LDR part/////////

myLCD.setCursor(1,0);

var ldrVal=ldrPin.read();

// convert the value into percentage

var pc=ldrVal*100.0/1024.0;

pc=Math.floor(pc * 100) / 100;

myLCD.write('Light='+pc+' %')

/////////////////////

if(b==1 && ledState==0 && btnState==0)

{

ledState=1;

ledPin.write(1);

btnState=1;

}

else if(b==1 && ledState==1 && btnState==0)

{

ledPin.write(0);

btnState=1;

ledState=0;

}

if(b==0)

{

btnState=0;

}

setTimeout(loop,100);

}

Let us analyze the LDR part:

// LDR part/////////

myLCD.setCursor(1,0);

var ldrVal=ldrPin.read();

// convert the value into percentage

var pc=ldrVal*100.0/1024.0;

pc=Math.floor(pc * 100) / 100;

myLCD.write('Light='+pc+' %')

/////////////////////

Analog ports in Arduino compatible devices are internally hooked to 10 bit A2D converters. So read() function on analog pin returns a 10 bit digital equivalent of the sensor value. Maximum value is 1024. After reading light intensity, we are converting into percentage. Math.floor() is used to convert the number to 2-place decimal only.

Try the example by commenting out this line.

observe we are calling myLCD.setCursor() every time in loop. Because, by default the cursor position will be placed at the end of the last string.

Try the example by commenting out the setCursor() call.

Here is how the circuit and the display looks like:

Figure 4.9 LDR Circuit and Result Displayed in LCD

So, in this section we learnt

- how to efficiently use LCD's 16 character in a line to efficiently display information

- how to work with sensors( LDR in particular) and display it's value in LCD

In next section we will learn about controlling our LED intensity depending upon light value.

4.3.3 PWM- Controlling Light Intensity based on LDR value

The first question that obviously comes to reader's mind is "what has a light intensity to do with a locker"? Well, actually nothing direct. But, we will be using servo motor in our project for controlling the lock( which will be covered later). Servo control is based on PWM. Even though we will use a library for motor control which will not expose PWM low level calls directly, it is always good to understand how to work with PWM so that you can in future write your own Servo control logic based on raw PWM calls.

In the context of the current tutorial, this section also helps in performing switching and PWM control through local switch as well as from remote using MqTT.

Some of the PWM basics can be found in my Arduino Basic Tutorial's PWM section. So, we will bypass the theory part and just take a simple summery from that tutorial.

Pulse Width Modulation or PWM is a way of controlling current through a load ( like motor/ light etc) by varying the duty cycle of the pulse. A pwm signal with duty cycle 70% means that the motor connected with the associated pin will be rotated with a speed of 70% of it's max.

Here is the code:

var mraa=require('mraa')

/*---------- Uncomment for Plain Switching *****/

//var ledPin=new mraa.Gpio(5);

//ledPin.dir(mraa.DIR_OUT);

/*---------- Uncomment for Plain Switching *****/

//---- PWM---------//

var ledPin=new mraa.Pwm(5);

ledPin.enable(true);

//---------------------//

var buttonPin=new mraa.Gpio(4);

buttonPin.dir(mraa.DIR_IN);

var ledState=0;

var btnState=0;

// LDR Part//////////////

var ldrPin=new mraa.Aio(0);

// no need for initializing dir for Aio as Aio pins are only input type

//// LCD part///////////

var LCD = require('jsupm_i2clcd');

var myLCD = new LCD.Jhd1313m1(6, 0x3E, 0x62);

myLCD.setColor(0,255,0);

myLCD.setCursor(0,0)

myLCD.write('Hi Codeproject')

myLCD.setCursor(1,0)

//myLCD.write('Intel Edison')

// we will use second line to print light value

////////////////////////

//////// MqTT Integration/////////

var mqtt = require('mqtt');

var client = mqtt.connect('mqtt://iot.eclipse.org');

client.subscribe('rupam/control/#')

client.handleMessage=function(packet,cb)

{

var payload = packet.payload.toString()

console.log(payload);

payload = payload.replace(/(\r\n|\n|\r)/gm,"");

if(payload=='ON')

{

on();

}

if(payload=='OFF')

{

off();

}

cb();

}

/////////////////////////////////

loop();

var desiredLight=1;

function on()

{

ledState=1;

//------ Uncomment for plain switching///////

//ledPin.write(1);

//-------------------------------//

// PWM---------//

console.log(desiredLight);

ledPin.write(desiredLight);

/////////////////

/////////////////

}

function off()

{

ledState=0;

// For pwm only////////

ledPin.write(0);

}

var t=0;

function loop()

{

t++;

var b=buttonPin.read();

// LDR part/////////

myLCD.setCursor(1,0);

var ldrVal=ldrPin.read();

// convert the value into percentage

var pc=ldrVal*100.0/1024.0;

pc=Math.floor(pc * 100) / 100;

myLCD.write('Light='+pc+' %')

desiredLight=(100-pc)/300;

if(ledState==1)

{

// if light is on adjust intensity every 5 sec

if(t>=50)

{

ledPin.write(desiredLight);

t=0;

}

}

/////////////////////

if(b==1 && ledState==0 && btnState==0)

{

on();

btnState=1;

}

else if(b==1 && ledState==1 && btnState==0)

{

off();

btnState=1;

}

if(b==0)

{

btnState=0;

}

setTimeout(loop,100);

}

Observe, for PWM, we are declaring ledPin as mraa.pwm(5). Calling enable(true) activates PWM mode.

//---- PWM---------// var ledPin=new mraa.Pwm(5); ledPin.enable(true); //---------------------//

Also note that, we have separated switching logic as on() and off() functions. write(0) is turning off logic for plain digital pins as well as in pwm mode.

Interestingly in the on() function we have used write(desiredLight) instead of write(1) which we used for plain switching.

desiredLight is a global variable, whose value changes in the loop() function right after calculating light percentage. pwmPin.write(duty_cycle_in_decimal) expects you to specify the desired duty cycle in fraction ( eg: .77 for 77% duty cycle, .63 for 63% duty cycle and so on). So we calculated desiredLight as a fraction by subtracting light percent pc from 100 and dividing that by 100 for percentage to fraction conversion.

So, if room light is 80%, we will be glowing our led with only 20% intensity, so desiredLight will be .2 .

Also, note that we are actually changing the intensity value in every 5 seconds when the light is on.

Execute this program. What you see?

You will notice that everything is working fine, just that after switching off, LED is not entirely turned off. It is glowing with very little intensity as shown in figure 4.10.

Figure 4.10: LED glowing even while it is off in PWM mode

This is because, in pwm a signal pulse is sent to keep the pwm pin active. The pulse width of no pulse can be zero, so the light never actually turns off.

But, don't worry. This problem can be overcome by calling enable(false) after write(0) in off() and calling enable(true) before write(desiredLight) in off() function. What if you change the order? If you call enable(false) before write(0), that pwm duty cycle of 0 will never be written and LED will not at all turn off.

So, our on and off functions are now modified as below.

function on()

{

ledState=1;

//------ Uncomment for plain switching///////

//ledPin.write(1);

//-------------------------------//

// PWM---------//

console.log(desiredLight);

ledPin.enable(true);

ledPin.write(desiredLight);

/////////////////

/////////////////

}

function off()

{

ledState=0;

// For pwm only////////

ledPin.write(0);

ledPin.enable(false);

/////////////

}

When you are enabling the pwm from on() function, you must not do it at the time of declaration. I do not know why, by enabling pwm multiple times fails to write the percentage value :(

//---- PWM---------//

var ledPin=new mraa.Pwm(5);

//ledPin.enable(true); don't call it here--> enable it from on function

//---------------------//

4.3.4 Servo Control

I had earlier planned to to include a separate sub section for Servo control and explain it directly in our IoT device app. But, my wife suggested that Servos may be needed in many other projects like robotics. So creating a basic working of Servo with Intel Edison will be good. As you know, we the men often are weak towards lady's suggestions. So here is the sub section demanded strongly by my wife.

Essentially you need to hook the servo with the PWM port like D5/D6 as you have done for LED intensity control.

We need two npm libraries for working with Servos. johney-5 and edison-io. johney-5 is particularly popular for robotics DIY in many platforms including R-Pi. Add them into your code with require function of Node.js

var five = require("johnny-five");

var Edison = require("edison-io");

What we will try to do here is connect the servo with D5 and a button in D4( yes, remove that LED and keep it in place in your Grove box). When we press the button once, Servo should move clockwise ( once) then again when we press the button, it should move 90' anticlockwise to come to it's actual position.

So, essentially we will be controlling the angle of the Servo's position. let's a 0' current position and 90' the other position. Let us define a global variable angle to keep track of current value.

Initially angle is 0.

var angle=0;

let us define a variable called sw ( short for switch) for defining button state.

var mraa=require('mraa');

var sw=new mraa.Gpio(4);

sw.dir(mraa.DIR_IN);

Let's initialize johney-5 and set the servo angle to 0.

var board = new five.Board({

io: new Edison()

});

var servo = new five.Servo({

pin:5

});

servo.to(0);

servo.to(ANGLE) can be used to set Servo's angle to ANGLE angle needs to be specified in degree. So 90', 45' are valid Servo angles.

This is extremely simple from this point on. You already know the switching logic from your LED Controlling with Button example. All you have to do now is replace ledPin.write(1) with servo.to(90) and ledPin.write(0) with servo.to(0). You also need to replace ledState=1 with angle=90 and ledState=0 with angle=0. In the comparision logic instead of comparing with ledState, compare angle.

function loop()

{

var b=sw.read();

if(b==1 && angle==0 && btnState==0)

{

angle=90;

servo.to(90);

btnState=1;

}

else if(b==1 && ledState==1 && btnState==0)

{

servo.to(0);

btnState=1;

angle=0;

}

if(b==0)

{

btnState=0;

}

setTimeout(loop,100);

}

Didn't I tell you that working with Node.js and Intel Edison is really simple? I am not adding any snaps of this experiment because I am now confident that you don't need an illustration for this experiment.

With that we come to an end of our learning process of Intel Edison basic hardware. There are plenty more to learn like accelerometer, vibration sensor and so on which can not be covered in this article.

I included this section for beginners in Edison to get comfortable with coding so that we we start with our project, you are comfortable and can perform the steps yourself.

Let us recall things we learnt in this chapter before starting the next chapter where we will be configuring Web Cam and Audio as integral part of our project.

Chapter Summery: What we learnt

- How to implement input-output embedded program logic in Node.js

- How to design hardware easily with Grove connectors and Grove Shield

- Design process

- We performed three live mini projects as part of our learning

- We learnt about MqTT, an easy and universal message exchange protocol for IoT

- We controlled LED remotely using our mobile

- We learnt how to use LCD efficiently

- Use of PWM and using PWM for both switching as well as intensity control

- How to use npm from XDK ssh in Edison to install externall Node.js libraries.

You can download all the codes in three mini projects as a single Intel XDK project from following Link. ( It includes package.json which automatically installs all the dependencies like MqTT. So once you import this project to your XDK, you wouldn't have to worry about installing npm separately.

Download all Mini project code as single XDK project

5. Setting up Audio and Web Cam in Intel Edison

Finally, after getting a foot hold on Intel Edison and doing three confidence booster projects, you are now ready to leap ahead and do some non-hardware media related operation with Intel Edison. As the name of the project suggests we are going to use two modalities for our project: Face and voice. Face recognition needs you to capture images from WebCam and Voice Verification needs voice capture from input Audio devices. Therefore the first step is to configure them.

Unfortunately there is no plug and play ways of doing it. Both camera and Voice needs to be configured first before you are able to work with them.

I am putting Voice and Camera configuration as a separate chapter and abstracted it from the core project development chapter to present it as a resource. If your own idea includes working with either or both of camera and voice, then you can use this chapter as a reference for setting up.

This chapter will have two sub topics:

- Configuring Web Camera with Intel Edison

- Configuring Audio with Intel Edison

Let us get started with Configuring camera. Before that, you may want to have a close look at our hardware requirement section to know what camera is needed. Non-UVC compatible camera will not work with Edison.

Also note that Web camera has to be connected to Edison via it's host USB port. So, Edison has to drive the current for the camera. Therefore a 12v supply is mandatory for the device. Please refer figure 3.2 for more details. I advise you to stick to Official Edison 12v power supply for working with Audio and Video in Edison.

5.1 Setting Up Web Cam with Intel Edison

I am using a Creative Sensz3d camera because I got it from Intel during Perceptual computing Challenge(free!) and it has got a nice Microphone array for capturing high quality Audio. You can go for any UVC compatible camera with Microphone.

If you have worked with Linux, most of the releases has nice "sudo apt-get install" kind of stuff which makes the life easy in Linux platform. However, Yocto doesn't have any such package manager out of the box. But fortunately Alex has created an awesome package manager for Edison called opkg which is unofficially the official package manager in Intel Edison. For complete 5.1 section , please login to Edison from PuTTY using SSH. ( because a) you need internet connection and b) you need to open multiple shells to install and validate)

Step 1: Driver Check

Web camera needs Web Cam drivers. First check if you have uvc camera driver installed or not by following command

find /lib/modules/* -name 'uvc'

If it shows an installed driver like following figure, then you are all set.

Figure 5.1 UVC driver detection in Edison

Step 2: Opkg Update

update the opkg first.

vi /etc/opkg/base-feeds.conf

and add following lines with the configuration file

src/gz all http://repo.opkg.net/edison/repo/all src/gz edison http://repo.opkg.net/edison/repo/edison src/gz core2-32 http://repo.opkg.net/edison/repo/core2-32

Press Esc : wq to save and come out of the Editor

now

opkg update

Step 4: Driver Installation ( if Step 1 fails, i.e. no driver is installed)

Now download and install the uvc driver using opkg

opkg install kernel-module-uvcvideo

You may need to reboot your Edison board after uvc driver installation.

Step 5: Web Camera detection

Plug in your camera and reboot the device ( camera driver is loaded only at the time of boot. So, either you connect the camera and boot or if the device is already booted, use reboot).

Now give following command.

lsmod | grep uvc

If the result shows uvc module then your camera is detected and it's driver is loaded by Edison

When web cam is installed and plugged in, Edison creates a node in /dev. So, check that video0 node is created by following command