Introduction

This article demonstrates how to make an offline browser using Visual C++. It uses the following APIs:

- WinInet - Download HTML of all the web pages.

- URL Moniker - Download all the resources, for e.g., images, style sheets etc. to the local folder.

- MSHTML - Traverse HTML DOM (Document Object Model) tree to get the list of all the resources that needs to be downloaded.

Below is the brief description of the algorithm:

- Download the HTML of the web page, for e.g., www.google.com, and save it to the hard disk in a specified folder.

- Traverse the HTML document and look for

src attribute in every tag, value of src attribute is the URL of a resource. If URL of the resource is absolute, for e.g., www.google.com/images/logo.gif, it is OK, but if the URL is relative, for e.g., images/logo.gif, make it absolute using the host name. I.e., its absolute URL will become <Host Name>/<path>, for e.g., www.google.com/images/logo.gif.

- Update

src attribute to reflect if there are any changes in the URL of the resource. Relative URLs will remain same, but for absolute addresses, src attribute will be changed now to a relative one.

- Save the original

src attribute's value to srcdump, it is just for future references, so that the original src is still available.

Background

I'd like to explain the reason/scenario behind the development of this code snippet. I was working on a module which records user interactions with Web pages and I require to save the web page on the local hard drive without using the web browser's Save As option.

I searched a lot for some code that does the same for me, but didn't find any helpful material, so I decided to develop it myself. I am uploading it here because it may help others working on some related stuff and to get some feedback on any mistakes I made. I didn't use MFC just to make it compatible with Win32 Applications as well as with MFC.

Not to mention, it is my first ever article.

Using the code

Download HTML of the Web Page:

LoadHtml() works in two modes based on the value of the bDownload argument:

- If

bDownload is true, it assumes that HTML is loaded already using SetHtml() function, and it doesn't execute the following code snippet, just populates the Hostname and Port fields from the URL.

- If

bDownload is false, it first downloads the HTML from the URL specified and then populates the Hostname and Port fields.

HINTERNET hNet = InternetOpen("Offline Browser",

INTERNET_OPEN_TYPE_PROXY, NULL, NULL, 0);

if(hNet == NULL)

return;

HINTERNET hFile = InternetOpenUrl(hNet, sUrl.c_str(), NULL, 0, 0, 0);

if(hFile == NULL)

return;

while(true)

{

const int MAX_BUFFER_SIZE = 65536;

unsigned long nSize = 0;

char szBuffer[MAX_BUFFER_SIZE+1];

BOOL bRet = InternetReadFile(hFile, szBuffer, MAX_BUFFER_SIZE, &nSize);

if(!bRet || nSize <= 0)

break;

szBuffer[nSize] = '\0';

m_sHtml += szBuffer;

}

Load HTML into MSHTML Document Interface:

BrowseOffline() assumes that the HTML is already loaded. First, it constructs the HTML DOM tree by loading the HTML into an MSHTML DOMDocument interface using the following code:

SAFEARRAY* psa = SafeArrayCreateVector(VT_VARIANT, 0, 1);

VARIANT *param;

bstr_t bsData = (LPCTSTR)m_sHtml.c_str();

hr = SafeArrayAccessData(psa, (LPVOID*)¶m);

param->vt = VT_BSTR;

param->bstrVal = (BSTR)bsData;

hr = pDoc->write(psa);

hr = pDoc->close();

SafeArrayDestroy(psa);

Traverse DOM Tree and download all the resources:

Once the DOM tree is constructed, it's time to traverse it and seek for the resources that needs downloading.

Currently, I only seek for src attribute in all the elements, and once an src attribute is found, it is downloaded and saved to the local folder.

MSHTML::IHTMLElementCollectionPtr pCollection = pDoc->all;

for(long a=0;a<pCollection->length;a++)

{

std::string sValue;

IHTMLElementPtr pElem = pCollection->item( a );

if(GetAttribute(pElem, L"src", sValue))

{

if(!IsAbsolute(sValue))

{

..........

}

else

{

..........

}

}

}

Download Resource with Absolute Path

If src attribute has an absolute URL of the resource, the following actions are taken:

- Download the resource and save it to the appropriate folder in the local folder.

- Update the

src attribute to the relative local path.

- Save the value of the original

src attribute as srcdump for future reference.

if(!IsAbsolute(sValue))

{

if(sValue[0] == '/')

sValue = sValue.substr(1, sValue.length()-1);

CreateDirectories(sValue, m_sDir);

if(!DownloadResource(sValue, sValue))

{

std::string sTemp = m_sScheme + m_sHost;

sTemp += sValue;

if(sTemp[0] == '/')

sTemp = sTemp.substr(1, sTemp.length()-1);

SetAttribute(pElem, L"src", sTemp);

SetAttribute(pElem, L"srcdump", sValue);

}

else

{

SetAttribute(pElem, L"srcdump", sValue);

}

}

Download Resource with Relative Path

If src attribute has a relative URL of the resource, the following actions are taken:

- Construct absolute URL from the relative URL using Hostname and Port fields.

- Download the resource and save it to the appropriate folder in the local folder.

- Update

src attribute to the relative local path if required.

- Save the value of original

src attribute as srcdump for future reference.

else

{

std::string sTemp;

sTemp = TrimHostName(sValue);

CreateDirectories(sTemp, m_sDir);

if(DownloadResource(sTemp, sTemp))

{

if(sTemp[0] == '/')

sTemp = sTemp.substr(1, sTemp.length()-1);

SetAttribute(pElem, L"src", sTemp);

SetAttribute(pElem, L"srcdump", sValue);

}

}

Save updated HTML

Original HTML is changed because of the values changed for src and the addition of srcdump attribute. Original HTML is finally updated and saved with the name [GUID].html, where GUID is a Globally Unique Identifier generated using CoCreateGuid(). It is just to make sure that it doesn't overwrite any existing web site in the same folder.

MSHTML::IHTMLDocument3Ptr pDoc3 = pDoc;

MSHTML::IHTMLElementPtr pDocElem;

pDoc3->get_documentElement(&pDocElem);

BSTR bstrHtml;

pDocElem->get_outerHTML(&bstrHtml);

std::string sNewHtml((const char*)OLE2T(bstrHtml));

SaveHtml(sNewHtml);

Download Resources

Once we've the absolute URL of the resource, it is straightforward to download it and save it to an appropriate local folder.

if(URLDownloadToFile(NULL, sTemp.c_str(), sTemp2.c_str(), 0, NULL) == S_OK)

return true;

else return false;

Directory Structure of the Web Site

I've tried to maintain the same directory on the local folder as it is on the website. For example: downloading the resource images/logo.gif first creates a folder images inside the directory specified by the user and then downloads logo.gif into that folder.

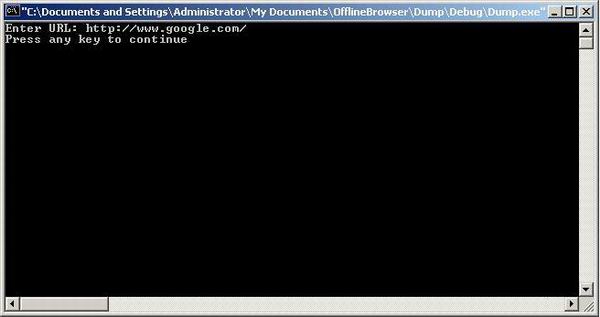

Sample Usage

COfflineBrowser obj;

char szUrl[1024];

printf("Enter URL: ");

gets(szUrl);

obj.SetDir("c:\\MyTemp\\");

obj.LoadHtml(szUrl, true);

obj.BrowseOffline();

This member has not yet provided a Biography. Assume it's interesting and varied, and probably something to do with programming.

General

General  News

News  Suggestion

Suggestion  Question

Question  Bug

Bug  Answer

Answer  Joke

Joke  Praise

Praise  Rant

Rant  Admin

Admin