Introduction

This article was inspired by:

Both of these articles have inspired me to do some benchmarking of the three forms of function invocation offered in C#:

- Direct call

- via a delegate

- via reflection

As I am in the prototyping stages of an "application automation layer" (The Application Automation Layer: Introduction And Design [^] and The Application Automation Layer - Design And Implementation of The Bootstrap Loader And The Component Manager [^]) which currently relies heavily on method invocation via reflection, I thought I should take the advice of both of these articles and look at the performance of my current implementation.

The results are rather astonishing.

Benchmarking C#

Didn't I read somewhere on CP that the Microsoft license agreement specifically says "thou shalt not benchmark .NET!"? Well, that's yet another commandment I've broken.

I decided to write a really simple benchmarking program, not for the purposes of gleaning minor differences between the three types of function invocation, but to determine if there are any large differences. The benchmark program compares:

Benchmark Matrix| Type | Direct | Delegate | Reflection |

|---|

| Static, No parameters | - | - | - |

|---|

| Static, With parameters | - | - | - |

|---|

| Instance, No parameters | - | - | - |

|---|

| Instance, With parameters | - | - | - |

|---|

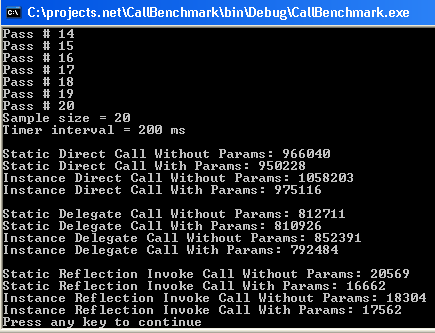

The results are as follows:

You will notice that reflection is approximately 50 times slower than direct calls. This means that I am going to have to seriously reconsider my implementation in the AAL!

Some Code

The program benchmarks twelve different types of invocation. They all look basically like this:

public static void StaticDirectCallWithoutParams()

{

++count;

}

simply consisting of a counter increment.

At the beginning of the program, the delegates and reflection methods are initialized along with a couple of embedded constants controlling the number of times the test is run, and how many milliseconds we spend calling the function under test:

CallBenchmark cb=new CallBenchmark();

SNPCall snpCall=new SNPCall(StaticDelegateWithoutParams);

SPCall spCall=new SPCall(StaticDelegateWithParams);

INPCall inpCall=new INPCall(cb.InstanceDelegateWithoutParams);

IPCall ipCall=new IPCall(cb.InstanceDelegateWithParams);

MethodInfo snpMI=GetMethodInfo

("CallBenchmark.exe/CallBenchmark.CallBenchmark/StaticInvokeWithoutParams");

MethodInfo spMI=GetMethodInfo

("CallBenchmark.exe/CallBenchmark.CallBenchmark/StaticInvokeWithParams");

MethodInfo inpMI=GetMethodInfo

("CallBenchmark.exe/CallBenchmark.CallBenchmark/InstanceInvokeWithoutParams");

MethodInfo ipMI=GetMethodInfo

("CallBenchmark.exe/CallBenchmark.CallBenchmark/InstanceInvokeWithParams");

int sampleSize=20;

int timerInterval=200;

A timer is set up to stop the test:

Timer timer=new Timer(timerInterval);

timer.AutoReset=false;

timer.Stop();

timer.Elapsed+=new ElapsedEventHandler(OnTimerEvent);

...

static void OnTimerEvent(object src, ElapsedEventArgs e)

{

done=true;

}

And each test looks something like this:

++n;

count=0;

done=false;

timer.Start();

while (!done)

{

StaticDirectCallWithParams(1, 2, 3);

}

benchmarks[n]+=count;

Rocket science, isn't it?

Conclusion

This little test clearly shows the performance hit taken by invoking methods using reflection. Even considerations like CPU caching wouldn't account for this significant variance (I think!). Obviously, I need to rethink my architecture implementation.

General

General  News

News  Suggestion

Suggestion  Question

Question  Bug

Bug  Answer

Answer  Joke

Joke  Praise

Praise  Rant

Rant  Admin

Admin

).

).

:

: As I hear it, there are all sorts of optimisations that you might not think about but are usually done.

As I hear it, there are all sorts of optimisations that you might not think about but are usually done.