Introduction

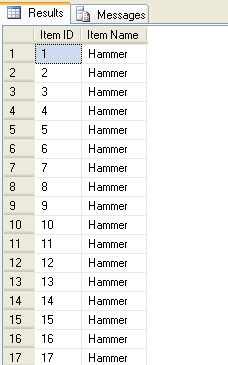

This article (my first) will describe an algorithm that enables a large amount of data to be generated very quickly using a SQL query. The test data can be static or incremental, such as “Item Name” and “Item ID”, respectively, as shown below:

Background

One of the tasks I did in a project involves generating a testing table with 103,680,000 records. The conventional method of data generation would take a month; hence, a fast method of data insertion was required. The new method took only 5 hours.

Using the code

Conventional method – Sequential INSERT

The conventional way of generating a list of numbers from 0…100000 would be using a loop and an INSERT statement as follows:

CREATE TABLE #tempTable([Item ID] [bigint], [Item Name] nvarchar(30))

DECLARE @counter int

SET @counter = 1

WHILE (@counter < 100000)

BEGIN

INSERT INTO #tempTable VALUES (@counter, 'Hammer')

SET @counter = @counter + 1

END

SELECT * FROM #tempTable

DROP TABLE #tempTable

Let's call this method of data generation “Sequential INSERT”.

New method – Exponential INSERT

The new method effectively makes a copy of the existing data and appends it as new data, and does so repeatedly until the desired amount of data is generated.

Here is the code for the exponential INSERT:

CREATE TABLE #tempTable([Item ID] [bigint], [Item Name] nvarchar(30))

INSERT INTO #tempTable VALUES (1, 'Hammer')

WHILE((SELECT COUNT(*) FROM #tempTable) < 100000)

BEGIN

INSERT INTO #tempTable ([Item ID], [Item Name])

(SELECT [Item ID] + (SELECT COUNT(*) FROM #tempTable),

'Hammer' FROM #tempTable)

END

SELECT * FROM #tempTable

DROP TABLE #tempTable

Points of interest

The condition for the WHILE..LOOP is (SELECT COUNT(*)). This condition statement takes a long time to be evaluated. A faster method would be to calculate how many iterations are needed to generate the desired number of records, i.e., 100,000 records in this case, which is 2^17=131,072, so we can rewrite the code to stop after the 17th iteration.

It took 4 seconds to execute the number count from 1 to 100,0000; the exponential method took two seconds with the code below:

CREATE TABLE #tempTable([Item ID] [bigint], [Item Name] nvarchar(30))

INSERT INTO #tempTable VALUES (1, 'Hammer')

DECLARE @counter int

SET @counter = 1

WHILE(@counter <= 17)

BEGIN

INSERT INTO #tempTable ([Item ID], [Item Name])

(SELECT [Item ID] + (SELECT COUNT(*) FROM #tempTable),

'Hammer' FROM #tempTable)

SET @counter = @counter + 1

END

SELECT * FROM #tempTable

DROP TABLE #tempTable

Also, not only can you use this to increment a number field, but it can be applied to datetime fields as well.

History

This member has not yet provided a Biography. Assume it's interesting and varied, and probably something to do with programming.

General

General  News

News  Suggestion

Suggestion  Question

Question  Bug

Bug  Answer

Answer  Joke

Joke  Praise

Praise  Rant

Rant  Admin

Admin