Here we change the dimensions for the video tag, import another model in our index.js file, add drawing to our canvas, and get our predictions.

In the previous article, we learned how to classify a person’s emotions in the browser using face-api.js and Tensorflow.js.

If you haven’t read that article yet, I recommend you do so first as we’ll be proceeding on the assumption that you have some familiarity with face-api.js, and we’ll be building on the code we created for emotion detection.

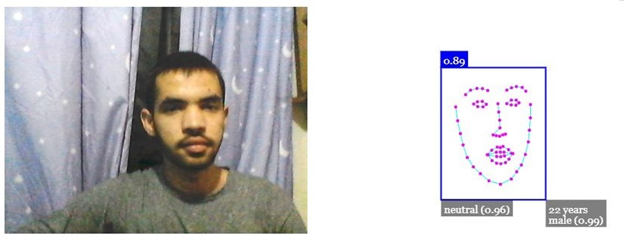

Gender and Age Detection

We’ve seen how easy it is to predict human facial expressions using face-api.js. But what else can we do with it? Let’s learn to predict someone’s gender and age.

We’re going to make a few changes to our previous code. In the HTML file, we changed the dimensions for the video tag since we’ll need some extra space for drawing to be visible:

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8" />

<script src="https://webrtc.github.io/adapter/adapter-latest.js"></script>

<script type="application/x-javascript" src="face-api.js"></script>

</head>

<body>

<h1>Emotions, Age & gender Detection using face-api.js</h1>

<video autoplay muted id="video" width="400" height="400" style=" margin: auto;"></video>

<div id="prediction">Loading</div>

<script type="text/javascript" defer src="index.js"></script>

</body>

</html>

We also need to import another model in our index.js file:

faceapi.nets.ageGenderNet.loadFromUri('/models')

Add age and gender to the predictions as well:

const detections = await faceapi

.detectAllFaces(video, new faceapi.TinyFaceDetectorOptions())

.withFaceLandmarks()

.withFaceExpressions()

.withAgeAndGender();

Face-api.js has some drawing capabilities too. Let’s add drawing to our canvas:

const resizedDetections = faceapi.resizeResults(detections, displaySize);

faceapi.draw.drawDetections(canvas, resizedDetections);

faceapi.draw.drawFaceLandmarks(canvas, resizedDetections);

faceapi.draw.drawFaceExpressions(canvas, resizedDetections);

Now we’re in a position to get our predictions:

resizedDetections.forEach(result => {

const { age, gender, genderProbability } = result;

new faceapi.draw.DrawTextField(

[

`${faceapi.round(age, 0)} years`,

`${gender} (${faceapi.round(genderProbability)})`

],

result.detection.box.bottomRight

).draw(canvas);

});

Here’s the final look of index.js file:

const video = document.getElementById('video');

Promise.all([

faceapi.nets.tinyFaceDetector.loadFromUri('/models'),

faceapi.nets.faceLandmark68Net.loadFromUri('/models'),

faceapi.nets.faceRecognitionNet.loadFromUri('/models'),

faceapi.nets.faceExpressionNet.loadFromUri('/models'),

faceapi.nets.ageGenderNet.loadFromUri('/models')

]).then(startVideo);

function startVideo() {

navigator.getUserMedia = navigator.getUserMedia || navigator.webkitGetUserMedia || navigator.mozGetUserMedia || navigator.msGetUserMedia;

if (navigator.getUserMedia) {

navigator.getUserMedia({ video: true },

function(stream) {

var video = document.querySelector('video');

video.srcObject = stream;

video.onloadedmetadata = function(e) {

video.play();

};

},

function(err) {

console.log(err.name);

}

);

} else {

document.body.innerText ="getUserMedia not supported";

console.log("getUserMedia not supported");

}

}

video.addEventListener('play', () => {

const canvas = faceapi.createCanvasFromMedia(video);

document.body.append(canvas);

const displaySize = { width: video.width, height: video.height };

faceapi.matchDimensions(canvas, displaySize);

setInterval(async () => {

const predictions = await faceapi

.detectAllFaces(video, new faceapi.TinyFaceDetectorOptions())

.withFaceLandmarks()

.withFaceExpressions()

.withAgeAndGender();

const resizedDetections = faceapi.resizeResults(predictions, displaySize);

canvas.getContext('2d').clearRect(0, 0, canvas.width, canvas.height);

faceapi.draw.drawDetections(canvas, resizedDetections);

faceapi.draw.drawFaceLandmarks(canvas, resizedDetections);

faceapi.draw.drawFaceExpressions(canvas, resizedDetections);

resizedDetections.forEach(result => {

const { age, gender, genderProbability } = result;

new faceapi.draw.DrawTextField(

[

`${faceapi.round(age, 0)} years`,

`${gender} (${faceapi.round(genderProbability)})`

],

result.detection.box.bottomRight

).draw(canvas);

});

}, 100);

});

What’s Next?

This series of articles introduced you to TensorFlow.js and helped you get started with machine learning in the browser. We built a project that showed you how to start training your own computer vision AI right in the browser and make it recognize breeds of dogs, human facial expressions, age, and gender. While these are already impressive on their own, this series is only a starting point. There are endless possibilities for AI and ML in the browser. For example, one thing we didn’t do in the series is train an ML model offline and import it into the browser. Feel free to build on top of any of the examples or create something interesting of your own. Don’t forget to share your ideas!