Introduction

I am afraid to say that I am just one of those people that unless I am doing something, I am bored. So now that I finally feel I have learnt the basics of WPF, it is time to turn my attention to other matters.

I have a long list of things that demand my attention such as WCF/WF/CLR via C# version 2 book, but I recently went for something (and got, but turned it down in the end) which required me to know a lot about threading. Whilst I consider myself to be pretty good with threading, I thought, yeah, I'm OK at threading, but I could always be better. So as a result of that, I have decided to dedicate myself to writing a series of articles on threading in .NET. This series will undoubtedly owe much to an excellent Visual Basic .NET Threading Handbook that I bought, that is nicely filling the MSDN gaps for me and now you.

I suspect this topic will range from simple to medium to advanced, and it will cover a lot of stuff that will be in MSDN, but I hope to give it my own spin also.

I don't know the exact schedule, but it may end up being something like:

I guess the best way is to just crack on. One note though before we start, I will be using C# and Visual Studio 2008.

What I'm going to attempt to cover in this article will be:

I think that's quite enough for an article.

When a user starts an application, memory and a whole host of resources are allocated for the application. The physical separation of this memory and resources is called a process. An application may launch more than one process. It's important to note that applications and processes are not the same thing at all.

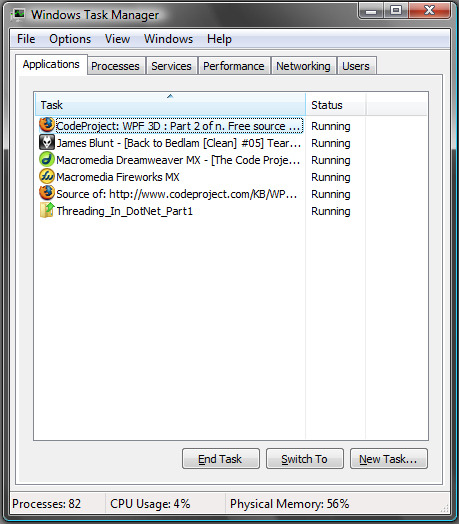

You should all know that you can view the running processes/applications in Windows using the Task Manager.

Here is how many applications I had running:

And here is the list of processes, where it can be seen that there are many more processes running. Applications may have one or more processes involved, where each process has its own separation of data, execution code, and system resources.

You might notice above that there is a reference to the CPU usage. This is down to the fact that each process has an execution sequence used by the computer's CPU. This execution sequence is known as a Thread. This thread is defined by the registers in use on the CPU, the stack used by the thread, and a container that keeps track of the thread's state (the Thread Local Storage, TLS).

Creating a process includes starting the process running at an instruction point. This is normally known as the primary or main thread. This thread's execution sequence is largely determined by how the user code is written.

Time Slices

With all these processes all wanting a slice of the CPU time cycle, how does it get managed? Well, each process is granted a slice of time (quantum) on which it (the process) may use the CPU. This slice of time should never be considered a constant; it is affected by the OS and the CPU type.

Multithreaded Processes

What happens if we need our process to do more than one thing, like query a Web Service and write to a database at the same time? Luckily, we can split a process to share the time slice allocated to it. This is done by spawning new threads in the current process. These extra threads are sometimes called worker threads. These worker threads share the processes memory space that is isolated from all other processes on the system. The concept of spawning new threads within the same process is called free threading.

As some of you may (as I did) come from VB 6.0, you will know, there we had apartment threading, where each new thread was started in its own process and was granted its own data, so threads couldn't share data. Let's see some figures, shall we, as this is fairly important?

With this model, each time you want to do some background work, it happens in its own process, so is known as Out Of Process.

With Free threading, we can get the CPU to execute an additional thread using the same process data. This is much better than single threaded apartments, as we get all the added benefits of extra threads with the ability to share the same process data.

Note: Only one thread actually runs on the CPU at one time.

If we go back to the Task Manager and change the view to include the thread count, we can see something like:

This shows that each process can clearly have more than one thread. So how's all this scheduling and state information managed? We will consider that next.

When a threads time slice has expired, it doesn't just stop and wait its turn. Recall that a CPU can only run one thread at a time, so the current thread needs to be replaced with the next thread to get some CPU time. Before that happens, the current thread needs to store its state information to allow it to execute properly again. This is what the TLS is all about. One of the registers stored in the TLS is the program counter, which tells the thread which instruction to execute next.

Interrupts

Processes don't need to know about each other to be scheduled correctly. That's really the job of the Operating System. Even OSs have a main thread, sometimes called the system thread, which schedules all other threads. It does this by using interrupts. An interrupt is a mechanism that causes the normal execution flow to branch somewhere else in the computer memory without the knowledge of the execution program.

The OS determines how much time the thread has to execute, and places an instruction in the current thread's execution sequence. Since the interrupt is within the instruction set, it's a software interrupt, which isn't the same as a hardware interrupt.

Interrupts are a feature used in all but the simplest microprocessors, to allow hardware devices to request attention. When an interrupt is received, a microprocessor will temporarily suspend execution of the code it was running and jump to a special program called an interrupt handler. The interrupt handler will typically service the device needing attention, and then returns to the previously-executing code.

One of the interrupts in all modern computers is controlled by a timer, whose function is to demand attention at periodic intervals. The handler will typically bump some counters, see if anything interesting is supposed to happen, and if there's nothing interesting (yet), return. Under Windows, one of the 'interesting' things that can happen is the expiry of a thread's time slice. When that occurs, Windows will force execution to resume in a different thread from the one that was interrupted.

Once an interrupt is placed, the OS then allows the thread to execute. When the thread comes to the interrupt, the OS uses a special function called an interrupt handler to store the thread's state in the TLS. Once the thread time slice has timed out, it is moved to the end of the thread queue for its given priority (more on this later) to wait its turn again.

This is OK if the thread isn't done or needs to continue executing. What happens if the thread decides it does not need any more CPU time just yet (maybe wait for a resource), so yields its time slice to another thread?

This is down to the programmer and the OS. The programmer does the yield (normally using the Sleep() method); the thread then clears any interrupts that the OS may have placed in its stack. A software interrupt is then simulated. The thread is stored in the TLS and moved to the end of the queue as before.

The OS may have, however, already placed an interrupt in the threads stack, which must be cleared before the thread is packed away; otherwise, when it executes again, it may get interrupted before it should be. The OS does this (thank goodness).

Thread Sleep and Clock Interrupts

As we just said, a thread may decide to yield its CPU time to wait for a resource, but this could be 10 or 20 minutes, so the programmer may choose to make the thread sleep, which results in the thread being packed in the TLS. But it doesn't go to the runnable queue; it goes to a sleep queue. In order for threads in the sleep queue to run again, they need a different kind of interrupt, called a clock interrupt. When a thread enters the sleep queue, a new clock interrupt is scheduled for the time that the thread should be awoken. When a clock interrupt occurs that matches an entry on the sleep queue, the thread is moved back to the runnable queue.

Thread Abort / Thread Done

All things have an end. When a thread is finished or it is programmatically aborted, the TLS for that thread is de-allocated. The data in the process remains (remember, it's shared between all the process threads, there could be more than one), and will only be de-allocated when the process itself is stopped.

So, we've talked a bit about scheduling, but we also said the TLS stored state for threads, how does it do this? Well, consider the following from MSDN:

"Threads use a local store memory mechanism to store thread-specific data. The Common Language Runtime allocates a multi-slot data store array to each process when it is created. The thread can allocate a data slot in the data store, store and retrieve a data value in the slot, and free the slot for reuse after the thread expires. Data slots are unique per thread. No other thread (not even a child thread) can get that data.

If the named slot does not exist, a new slot is allocated. Named data slots are public, and can be manipulated by anyone."

That's how in a nutshell. Let's see the MSDN example (blatant steal here):

using System;

using System.Threading;

namespace TLSDataSlot

{

class Program

{

static void Main()

{

Thread[] newThreads = new Thread[4];

for (int i = 0; i < newThreads.Length; i++)

{

newThreads[i] =

new Thread(new ThreadStart(Slot.SlotTest));

newThreads[i].Start();

}

}

}

class Slot

{

static Random randomGenerator = new Random();

public static void SlotTest()

{

Thread.SetData(

Thread.GetNamedDataSlot("Random"),

randomGenerator.Next(1, 200));

Console.WriteLine("Data in thread_{0}'s data slot: {1,3}",

AppDomain.GetCurrentThreadId().ToString(),

Thread.GetData(

Thread.GetNamedDataSlot("Random")).ToString());

Thread.Sleep(1000);

Console.WriteLine("Data in thread_{0}'s data slot is still: {1,3}",

AppDomain.GetCurrentThreadId().ToString(),

Thread.GetData(

Thread.GetNamedDataSlot("Random")).ToString());

Thread.Sleep(1000);

Other o = new Other();

o.ShowSlotData();

Console.ReadLine();

}

}

public class Other

{

public void ShowSlotData()

{

Console.WriteLine(

"Other code displays data in thread_{0}'s data slot: {1,3}",

AppDomain.GetCurrentThreadId().ToString(),

Thread.GetData(

Thread.GetNamedDataSlot("Random")).ToString());

}

}

}

This may produce the following:

It can be seen that this uses two things:

GetNamedDataSlot: looks up a named slotSetData: sets the data in the specified slot within the current thread

There is another way; we can also use the ThreadStaticAttribute which means the value is unique for each thread. Let's see the MSDN example (blatant steal here):

using System;

using System.Threading;

namespace ThreadStatic

{

class Program

{

static void Main(string[] args)

{

for (int i = 0; i < 3; i++)

{

Thread newThread = new Thread(ThreadData.ThreadStaticDemo);

newThread.Start();

}

}

}

class ThreadData

{

[ThreadStaticAttribute]

static int threadSpecificData;

public static void ThreadStaticDemo()

{

threadSpecificData = Thread.CurrentThread.ManagedThreadId;

Thread.Sleep(1000);

Console.WriteLine("Data for managed thread {0}: {1}",

Thread.CurrentThread.ManagedThreadId, threadSpecificData);

}

}

}

And this may produce the following output:

When I talked about processes earlier, I mentioned that processes have physically isolated memory and resources need to maintain themselves, and I also mentioned that a process has at least one thread. Microsoft also introduced an extra layer of abstraction/isolation called an AppDomain. The AppDomain is not a physical isolation, but rather a logic isolation within the process. Since more than one AppDomain can exist in a process, we get some benefits. For example, until we had an AppDomain, processes that needed to access each other's data had to use a proxy, which introduced extra code and overhead. By using an AppDomain, it is possible to launch several applications within the same process. The same sort of isolation that exists with processes is also available for AppDomains. Threads can execute across application domains without the overhead of inter process communication. This is all encapsulated within the AppDomain class. Any time a namespace is loaded in an application, it is loaded into an AppDomain. The AppDomain used will be the same as the calling code unless otherwise specified. An AppDomain may or may not contain threads, which is different to processes.

Why You Should Use AppDomains

As I stated above, AppDomains are a further level of abstraction/isolation, and they sit within a process. So why use AppDomains? One of this article's readers actually gave a very good example to this.

"I have previously needed to execute code in a separate AppDomain for a Visual Studio add-in that used Reflection to look at the current project's DLL file. Without examining the DLL in a separate AppDomain, any changes to the project made by the developer would not show up in Reflection unless they restarted Visual Studio. This is exactly because of the reason pointed out by Marc: once an AppDomain loads an assembly, it can't be unloaded."

-- AppDomain forum post, by Daniel Flowers

So we can see that an AppDomain can be use to load an assembly dynamically, and the entire AppDomain can be destroyed without affecting the process. I think this illustrates the abstraction/isolation that an AppDomain gives us.

NUnit also takes this approach, but more on this below.

Setting AppDomain Data

Let's see an example of how to work with AppDomain data:

using System;

using System.Threading;

namespace AppDomainData

{

class Program

{

static void Main(string[] args)

{

Console.WriteLine("Fetching current Domain");

AppDomain domain = System.AppDomain.CurrentDomain;

Console.WriteLine("Setting AppDomain Data");

string name = "MyData";

string value = "Some data to store";

domain.SetData(name, value);

Console.WriteLine("Fetching Domain Data");

Console.WriteLine("The data found for key {0} is {1}",

name, domain.GetData(name));

Console.ReadLine();

}

}

}

This produces a rather unexciting output:

And how about executing code in a specific AppDomain? Let's see that now:

using System;

using System.Threading;

namespace LoadNewAppDomain

{

class Program

{

static void Main(string[] args)

{

AppDomain domainA = AppDomain.CreateDomain("MyDomainA");

AppDomain domainB = AppDomain.CreateDomain("MyDomainB");

domainA.SetData("DomainKey", "Domain A value");

domainB.SetData("DomainKey", "Domain B value");

OutputCall();

domainA.DoCallBack(OutputCall);

domainB.DoCallBack(OutputCall);

Console.ReadLine();

}

public static void OutputCall()

{

AppDomain domain = AppDomain.CurrentDomain;

Console.WriteLine("the value {0} was found in {1}, running on thread Id {2}",

domain.GetData("DomainKey"),domain.FriendlyName,

Thread.CurrentThread.ManagedThreadId.ToString());

}

}

}

NUnit and AppDomains

Since I first published this article, there has been a few suggestions; the one that seemed to get the most attention (at least for this article's content) was NUnit and AppDomains, so I thought I better address that.

Shown below are two interesting quotes that I found on the NUnit site and also a personal blog.

"Dynamic reloading of an assembly using AppDomains and shadow copying. This also applies if you add or change tests. The assembly will be reloaded and the display will be updated automatically. The shadow copies use a configurable directory specified in the executable's (nunit-gui and nunit-console) config files."

-- NUnit Release Notes Page

"NUnit was written by .NET Framework experts. If you look at the NUnit source, you see that they knew how to dynamically create AppDomains and load assemblies into these domains. Why is a dynamic AppDomain important? What the dynamic AppDomain lets NUnit do is to leave NUnit open, while permitting you to compile, test, modify, recompile, and retest code without ever shutting down. You can do this because NUnit shadow copies your assemblies, loads them into a dynamic domain, and uses a file watcher to see if you change them. If you do change your assemblies, then NUnit dumps the dynamic AppDomain, recopies the files, creates a new AppDomain, and is ready to go again."

-- Dr Dobbs Portal

Essentially, what NUnit does is host the test assembly in a separate AppDomain. And as AppDomains are isolated, they can be unloaded without affecting the process to which they belong.

Just as in real life we as humans have priorities, so do threads. A programmer can decide a priority for their thread, but ultimately, it's up to the recipient to decide what should be acted upon now and what can wait.

Windows uses a priority system from 0-31 where 31 is the highest. Anything higher than 15 needs to be done via an Administrator. Threads that have priority between 16-31 are considered real time, and will pre-empt lower priority level threads. Think about drivers/input devices and things like them; these will be running with priorities between 16-31.

In Windows, there is a scheduling system (typically round robin), where each priority has a queue of threads. All threads with highest priority are allocated some CPU time, then the next level (lower down) are allocated some time, and so on. If a new thread appears with a higher priority, then the current thread is pre-empted and the new higher level priority level thread is run. Lower level priority threads will only be scheduled if there are no higher level threads in other priority queues.

If we again use Task Manager, we can see that it is possible to alter a Process to have a higher priority that will enable any new spawned threads a higher possibility of being scheduled (given some CPU time).

But we also have options when we use code, as the System.Threading.Thread class exposes a Priority property. If we look at what MSDN states, we could set on of the following:

A thread can be assigned any one of the following priority values:

HighestAboveNormalNormalBelowNormal- Lowest

Note: Operating Systems are not required to honor the priority of a thread.

For instance, an OS may decay the priority assigned to a high-priority thread, or otherwise dynamically adjust the priority in the interest of fairness to other threads in the system. A high-priority thread can, as a consequence, be preempted by threads of lower priority. In addition, most OSs have unbounded dispatch latencies: the more threads in the system, the longer it takes for the OS to schedule a thread for execution. Any one of these factors can cause a high-priority thread to miss its deadlines, even on a fast CPU.

The same could be said for programmatically setting a user created thread to be set at a Highest level priority. So be warned, be careful what you do when setting priorities for threads.

Starting new threads is pretty easy; we just need to use one of the Thread constructors, such as:

Thread(ThreadStart)Thread(ParameterizedThreadStart)

There are others, but these are the most common ways to start threads. Let's look at an example of each of these.

No Parameters

Thread workerThread = new Thread(StartThread);

Console.WriteLine("Main Thread Id {0}",

Thread.CurrentThread.ManagedThreadId.ToString());

workerThread.Start();

....

....

public static void StartThread()

{

for (int i = 0; i < 10; i++)

{

Console.WriteLine("Thread value {0} running on Thread Id {1}",

i.ToString(),

Thread.CurrentThread.ManagedThreadId.ToString());

}

}

Single Parameter

Thread workerThread2 = new Thread(ParameterizedStartThread);

workerThread2.Start(42);

Console.ReadLine();

....

....

public static void ParameterizedStartThread(object value)

{

Console.WriteLine("Thread passed value {0} running on Thread Id {1}",

value.ToString(),

Thread.CurrentThread.ManagedThreadId.ToString());

}

Putting it all together, we can see a small program with a main thread and two worker threads.

using System;

using System.Threading;

namespace StartingThreads

{

class Program

{

static void Main(string[] args)

{

Thread workerThread = new Thread(StartThread);

Console.WriteLine("Main Thread Id {0}",

Thread.CurrentThread.ManagedThreadId.ToString());

workerThread.Start();

Thread workerThread2 = new Thread(ParameterizedStartThread);

workerThread2.Start(42);

Console.ReadLine();

}

public static void StartThread()

{

for (int i = 0; i < 10; i++)

{

Console.WriteLine("Thread value {0} running on Thread Id {1}",

i.ToString(),

Thread.CurrentThread.ManagedThreadId.ToString());

}

}

public static void ParameterizedStartThread(object value)

{

Console.WriteLine("Thread passed value {0} running on Thread Id {1}",

value.ToString(),

Thread.CurrentThread.ManagedThreadId.ToString());

}

}

}

Which may produce something like:

Pretty simple, huh?

So you have now seen some simple examples of creating threads.

What we have not seen yet is the principle of synchronization between threads.

Threads run out of sequence from the rest of the application code, so you can never be sure of the exact order of events. That is to say, we can't guarantee that actions that affect a shared resource used by a thread will be completed before code is run in another thread.

We will be looking at this in great detail in subsequent articles, but for now, let's consider a small example using a Timer. Using a Timer, we can specify that a method is called at some interval, and this could check the state of some data before continuing. It's a very simply model; the next articles will show more detail about more advanced synchronization techniques, but for now, let's just use a Timer.

Let's see a very small example. This example starts a worker thread and a Timer. The main thread is put in a loop, waiting for a completed flag to be set to true. The Timer waits for a message from the worker thread of "Completed" before allowing the blocked main thread to continue by setting the completed flag to true.

using System;

using System.Threading;

namespace CallBacks

{

class Program

{

private string message;

private static Timer timer;

private static bool complete;

static void Main(string[] args)

{

Program p = new Program();

Thread workerThread = new Thread(p.DoSomeWork);

workerThread.Start();

TimerCallback timerCallBack =

new TimerCallback(p.GetState);

timer = new Timer(timerCallBack, null,

TimeSpan.Zero, TimeSpan.FromSeconds(2));

do

{

} while (!complete);

Console.WriteLine("exiting main thread");

Console.ReadLine();

}

public void GetState(Object state)

{

if (message == string.Empty) return;

Console.WriteLine("Worker is {0}", message);

if (message == "Completed")

{

timer.Dispose();

complete = true;

}

}

public void DoSomeWork()

{

message = "processing";

Thread.Sleep(3000);

message = "Completed";

}

}

}

This may produce something like:

We're Done

Well, that's all I wanted to say this time. Threading is a complex subject, and as such, this series will be quite hard, but I think worth a read.

Next Time

Next time we will be looking at Lifecycle of Threads/Threading Opportunities/Traps.

Could I just ask, if you liked this article, could you please vote for it, as that will tell me whether this threading crusade that I am about to embark on will be worth creating articles for.

I thank you very much.

History

- v1.1: 19/05/08: Added why to use AppDomain/NUnit with AppDomain, and extra parts to threading priorities.

- v1.0: 18/05/08: Initial issue.