Introduction

Our applications are populated with the objects of an arbitrary form and size. The view of any application (at least, the initial view) is predetermined at the design stage. The developers try their best to design the programs that would have the best view for any user, but each user has his personal estimation of what must be the best, so this task has no solution for the designer-driven applications, and there is an everlasting conflict between the designers and the users. The source of this conflict is in the basic idea of the designer-driven applications; no achievements in the adaptive interface or in the dynamic layout will allow to get rid of this problem. The only chance to step over this huge problem and to move the programming and all the applications to another level is the shift to the user-driven applications. These are the applications, in which only the purpose of the application and the calculation algorithms are determined by the designer, but the view of an application is totally decided by each user.

The main thing in the solving of this problem is in the design of the algorithm, which will allow to turn ANY object into moveable and resizable. There are two main requirements to such an algorithm:

- It must be EASY to IMPLEMENT for any object.

- Moving and resizing must be organized in the absolutely natural way, so that users don't need to learn anything at all; the only thing they need to know is that EVERYTHING is moveable and resizable.

The step from the designer-driven to the user-driven applications is a revolutionary one. There are two different communities (developers and users), which are dealing with the applications, so the fulfillment of the first requirement allows the developers to make this step; the fulfillment of the second requirement allows the users to make this step without any problems.

The requirement of everything in the applications to become moveable / resizable is based on one of the main features of the user-driven applications: if you implement even a single moveable object into your application, it will demand from all the surrounding or linked elements to have the same feature. (You can't place even a single unmovable element on the road; it will be a disaster.)

The easiness of the algorithm is the crucial thing in the design of the user-driven applications. Let’s look into the algorithm and some of the implementations. The GraphicsMRR application demonstrates only four forms with the samples. Much more detailed description of the algorithm can be found at www.sourceforge.net in the MoveableGraphics project (name is case-sensitive!), which contains a set of useful documents and programs.

Let’s begin with some terms. The main idea of the algorithm is to cover an object with the set of sensitive areas, in which moving or resizing can be started. The whole set of such areas for any object constitutes a cover (class Cover); each area itself belongs to the CoverNode class. As an object must be moved by any point, then the whole object’s area must be completely covered by the set of nodes; the overlapping of the nodes is possible and not a problem at all.

An object of the Mover class supervises the whole moving / resizing process for the registered objects (objects, which are included into its List). The cover for any object can be organized in many different ways; even the objects of the same class can have different covers. The important thing is the order, in which the objects are registered with the Mover, as they are selected for moving / resizing according with their order in the List. This is important, as with all the objects becoming moveable, they can easily overlap.

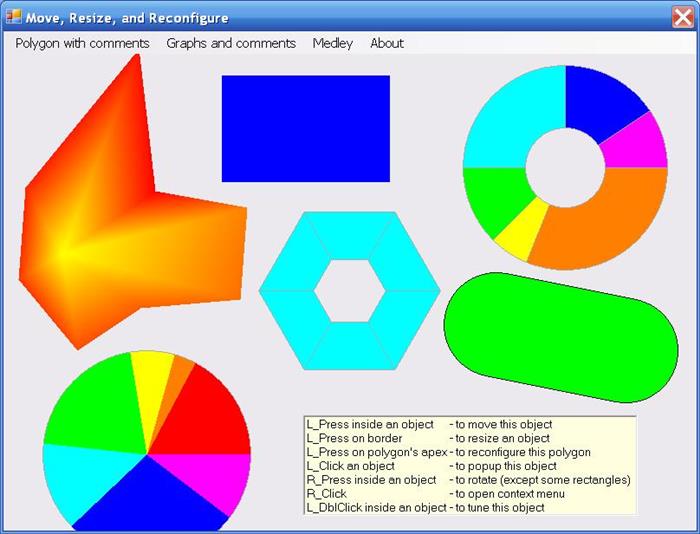

The Form_Main.cs demonstrates the work of the algorithm in case of the different geometrical figures. Through the context menu, you can add or delete the figures at any moment. Figure 1 demonstrates how the applications look for the users. There are no additional lines or marks, so the view of the working application is not changed at all. It’s enough for the users to know that everything here is moveable and resizable. These operations are done in the most natural way – with a mouse:

- Any object is moved by pressing it at any inner point.

- The resizing is done by pressing at any border point.

Fig.1: Any number of the objects in the Form_Main.cs can be moved, resized, and reconfigured

Objects in the application can be of an arbitrary form, but any of them can be covered with the nodes from the very limited variety of shapes: any CoverNode object can be either a circle, a convex polygon, or a strip with the rounded ends. The covers of the objects can be designed in the different ways (even the objects of the same class can have different covers). It doesn't really matter, how the cover is organized, if an object can be moved and resized. Figure 2 demonstrates exactly the same figures, as on figure 1, but with the shown covers.

Fig.2: The same objects with the shown covers

Figure 2 shows the use of all three different types of cover nodes.

The circles are mostly used to cover the special places, by which the objects are reconfigured (for example, apexes). The significant amount of the small circles can be used to cover the curved border, so that such object can be resized by any border point. This special type of cover design is called N-node covers.

Convex polygons of the different shapes can be used to cover the whole inner area, so that an object can be moved by any point.

Strips are mostly used to cover the straight parts of the border to allow the resizing.

This algorithm allows not only the forward movement of the objects, but also their rotation, if needed.

To organize the moving / resizing of an arbitrary set of elements, a Mover object must be declared and initialized.

Mover mover;

mover = new Mover (this);

Mover takes care only of the objects, which are included into its List.

mover .Insert (0, circle);

mover .Add (strip);

The moving / resizing process is organized solely with a mouse, so only three mouse events are used.

private void OnMouseDown (object sender, MouseEventArgs mea)

{

mover .Catch (mea .Location);

}

private void OnMouseUp (object sender, MouseEventArgs mea)

{

mover .Release ();

}

private void OnMouseMove (object sender, MouseEventArgs mea)

{

if (mover .Move (mea .Location))

{

Invalidate ();

}

}

This is not a simplification; this is the code, which you can find in nearly every form of the applications, regardless of the number or complexity of the objects, involved in moving / resizing. Each of these three methods includes a single call to one or the other of the Mover’s methods; these are the only things that are needed. However, some special things can require several additional lines of code in the OnMouseDown() and OnMouseUp() methods. For example, if an object can be involved in rotation, then the call to its StartRotation() method must be included into the OnMouseDown() method. The StartRotation() method often consists of a single line, which is demonstrated in the Form_Main.cs. If an object can be involved in rotation, then the angle of this object is one of its parameters. The idea of the StartRotation() method is to calculate and store the angle between this parameter and the angle to the mouse position at the start of rotation.

public void StartRotation (Point ptMouse)

{

double angleMouse = -Math .Atan2 (ptMouse.Y - ptC.Y, ptMouse.X - ptC.X);

compensation_0 = Auxi_Common .LimitedRadian (angleMouse - angle_0);

}

In case of using different context menus, the call to menu selection is included into the OnMouseUp() method; this is demonstrated in the Form_Medley.cs, where 10 (!) different context menus are used. But these are the small additions to the usually standard and extremely simple code of the three mouse events.

The moveable / resizable objects from figures 1 and 2 are of different shapes, but they have one common feature: all these objects move by themselves without any relation to other objects. In real applications, we often have much more complicated objects, consisting of different parts. These complicated objects must be involved in the movements, when all their parts move synchronously, but the parts themselves can be involved in the independent movements; such sample is demonstrated in the Form_RegPolyWithComments.cs, shown at figure 3.

Fig.3: The regular polygon can be moved synchronously with all its comments, but each comment can also be moved and rotated individually.

The polygon can be involved in forward movement, resizing, and rotation. As usual, the forward movement and rotation can be started at any inner point of a polygon; the resizing can be started by any border point. There can be an arbitrary number of comments to this polygon; the new comments can be added with the New comment group; any comment can be moved forward or rotated by its inner points.

The comments are shown on top of their “parent” polygon. When two or more objects are positioned at the same place of the screen, users expect that the upper object is selected for moving, when the mouse is pressed at this point. As the objects are selected for moving according with their order in the mover’s List, then all the comments must precede their “parent” polygon in this queue. To get the correct results, the polygon and the comments are not included into the queue manually, but instead the polygon.IntoMover(…) method is used. This will guarantee the correct order of polygon’s parts in the mover’s List regardless of the number of comments.

public void IntoMover (Mover mover, int iPos)

{

mover .Insert (iPos, this);

for (int i = comments .Count - 1; i >= 0; i--)

{

mover .Insert (iPos, comments [i]);

}

}

The New comment group at figure 3 looks very similar to the standard GroupBox object, but in reality it is much more powerful. The forms of our applications are populated with the graphical objects, controls, and groups of controls. If the graphical objects can be moved and resized, but the controls and groups of controls can't, then it would be a big flaw in the algorithm. Certainly, the algorithm was designed in such a way that any object, regardless of its origin, can be moved and resized. The standard GroupBox can be moved only by its border, but not by its inner points. To make things much better, several classes for organizing moveable / resizable groups were developed; the group at figure 3 belongs to the Group class. More on this item is described in the article Forms’ (dialogues’) customization, based on moveable / resizable elements, which was published at the CodeProject last month.

The easy to use algorithm for moving / resizing of the arbitrary objects is in great demand in nearly every branch of programming, but I am going to demonstrate only two of them here. The area, in which this algorithm must be of an extraordinary value, is the design of the scientific and engineering programs.

When such applications are designed not for the inner use, but for the market, then the number of users is many times bigger than the number of developers. There are no chances that all the users would be satisfied with any proposed design, and the process of development is often accompanied by the discussions, hot discussions or even quarrels between the developers and users. Not a rare situation, when users have the opposite interface requirements; this is a dead end for any fixed interface, but for the user-driven applications it’s not a problem at all. Moveable / resizable objects simply eliminate this whole problem; there is nothing to argue about any more; any user can rearrange the view of an application in any way he wants. The problem of annoying interface must be closed.

The Form_GraphsAndComments.cs demonstrates the idea of how such applications can be organized on the basis of moveable / resizable objects (figure 4). The scientific applications often show different plotting areas with the calculated results and additional information (often in the form of tables), which is used throughout the analysis. In the result of the fixed design, the number of plots and their positions are discussed at the beginning of the work and nearly never changed later. This is a very strict limitation on the research work. The use of the moveable / resizable objects changes the whole idea of the design and use of such applications. The developers now are responsible only for correct calculations and the easy way to get out all and any of these results. What, where, and how to show at the screen is now decided by the users and only by the users. The mentioned form is kept simple in order to make it easier for you to understand, how it works, but even my most complicated scientific applications are organized in a similar way.

Fig.4: Scientific applications usually consist of the plotting areas and additional controls with the auxiliary data

In this demonstration program, the selection of data is organized via the selection of functions in the list, so this process is simple and obvious. Everything else is exactly the same as in any other user-driven scientific application.

- Arbitrary form sizes

- Any number of plotting areas in the form

- An arbitrary number of functions in each plotting area

- An unrestricted moving and resizing of the areas

- An easy way of changing all the visualization parameters

- An easy way of adding, deleting, modifying, and moving the comments

- An easy way and many variations of saving and storing any piece of visualized information

There is not a single restriction from the designer’s side. It’s an absolutely user-driven application. Do whatever you need and want to.

The previous sample of the polygon with comments demonstrated the two level system of the synchronous / individual movements (polygon – comments). The plotting areas of this sample belongs to the three level system (main graphical area – scales - comments), but otherwise it’s exactly the same; the plots also have their IntoMover() method. The plotting areas can have multiple scales (both vertical and horizontal); the scales can be positioned anywhere in relation to their “parent” plotting areas; any plotting area or scale can have any number of the arbitrary positioned comments, each one of which can have its own visualizing parameters. With all these “any, any, any” the code for those three mouse events are as simple, as they were before. The complexity of the involved objects doesn't increase the complexity of the whole algorithm. It is still very easy to use and implement. The area of the scientific applications demands the very high level of the researchers’ manual adjustment of the results on different stages of analysis. The design of such applications on the basis of the moveable / resizable elements allows to give the full control to the users, which for these applications are often much better specialists in the area of research than the developers of the programs that are used. The full control must be given from the lesser specialists to the better ones. And to do this, the same three mouse events are used in a very simple way.

The MouseDown event is used to grab any object.

private void OnMouseDown (object sender, MouseEventArgs mea)

{

ptMouse_Down = mea .Location;

mover .Catch (mea .Location, mea .Button, bShowAngle);

ContextMenuStrip = null;

}

The MouseMove event is used for moving objects:

private void OnMouseMove (object sender, MouseEventArgs e)

{

if (mover .Move (e .Location))

{

Invalidate ();

}

}

The MouseUp event is used to release any object that was caught before. Because different context menus can be opened depending on the object, which was touched, the selection of the appropriate menu is called from inside this method.

private void OnMouseUp (object sender, MouseEventArgs mea)

{

ptMouse_Up = mea .Location;

double nDist = Auxi_Geometry .Distance (ptMouse_Down, ptMouse_Up);

if (mea .Button == MouseButtons .Left)

{

if (mover .Release ())

{

int iInMover = mover .WasCaughtObject;

if (mover [iInMover] .Source is MSPlot)

{

Identification (iInMover);

if (nDist <= 3 && iAreaTouched > 0)

{

MoveScreenAreaOnTop (iAreaTouched);

}

}

}

}

else if (mea .Button == MouseButtons .Right)

{

if (mover .Release ())

{

if (nDist <= 3)

{

MenuSelection (mover .WasCaughtObject);

}

}

else

{

if (nDist <= 3)

{

iAreaTouched = -1;

ContextMenuStrip = contextMenuOnEmpty;

}

}

}

}

The menu selection itself is very primitive. The identification is simple, as any object receives a unique ID on initialization.

private void MenuSelection (int iInMover)

{

Identification (iInMover);

if (iAreaTouched >= 0)

{

if (mover [iInMover] .Source is MSPlot)

{

ContextMenuStrip = contextMenuOnPlot;

}

else if (mover [iInMover] .Source is Scale)

{

ContextMenuStrip = contextMenuOnScale;

}

else if (mover [iInMover] .Source is TextMR)

{

ContextMenuStrip = contextMenuOnComment;

}

}

}

The applications for the financial analysis (Form_Medley.cs, figure 5) are of a similar type.

Fig.5: Medley of the financial graphics

There can be more different types of financial graphics; there can be more rotations needed than for the scientific plotting, but generally the design is the same. Because of the bigger number of different graphical classes, the number of context menus is bigger (there are 10 menus in this form), so the MenuSelection() is a bit longer, but as simple, as before. The general ideas of even the most complicated applications on the basis of moveable / resizable elements are the same.

- The number of objects is determined only by the user’s wish; objects can be added or deleted at any moment via a context menu.

- Any object is moved by grabbing it at any inner point.

- Any object is resized by any of its border points. For a set of the coaxial rings, this is also applied to the borders of each ring.

- Each class of financial graphics has its system of textual information; some of the texts are associated with the whole object, others – with the parts of objects. Each piece of textual information can be moved and rotated individually; it is also involved in synchronous movement with its “parent”, when the last one either moved or rotated.

- All the visualization parameters, and there are a lot of them, can be easily tuned. The tuning can be done on an individual basis, or the parameters can be spread from one, used as a sample, on all the siblings, or the parameters can be spread to all the “children”.

All these things are demonstrated in the code of Form_Medley.cs.

Conclusion

This article is about some aspects of the algorithm, which is used to turn any object into moveable / resizable, and about the design of the applications on the basis of such objects. There is a much more detailed explanation at www.sourceforge.net in the MoveableGraphics project (name is case-sensitive!). A lot of different samples are demonstrated in the Test_MoveGraphLibrary application (all the codes for this project are available) and described in the Moveable_Resizable_Objects.doc. The samples in that application are aimed at explaining each aspect of the algorithm step by step and from the simple examples to the most complicated.

History

- 30th September, 2009: Initial version

This member has not yet provided a Biography. Assume it's interesting and varied, and probably something to do with programming.

General

General  News

News  Suggestion

Suggestion  Question

Question  Bug

Bug  Answer

Answer  Joke

Joke  Praise

Praise  Rant

Rant  Admin

Admin

.

.