Introduction

Recently I came across a situation where I needed to solve a 4th degree polynomial equation in .NET, and to my surprise I couldn't find any code written in C# or VB .NET that contained either the explicit algebraic formulas, or the numerical algorithm Jenkins-Traub. (I did, however, find several translations of the Jenkins-Traub algorithm written by Jenkins himelf in FORTRAN (Can still be downloaded here Netlib). I did however manage to mix up on of the implementations done in C++ that I translated to C# and VB.NET. In my defense I did translate the one written by Laurent Bartholdi too but that was not included in the article. The ones that I did use is one C++ algorithm translated by David Binner for the real coefficients only. Please accept my sincere apology for this mistake. The C++ I relied on for complex coefficients was written by Henrik Vestermark. It is both of these application that are converted from C++ and C into C # and VB code by me.

NB: There might be a licence attached to the Jerkins-Traub algorithm for commercial use, please check

this before you use it in a program that you want to sell to others. For rearch and personal use there is no issue.

The explicid algebraic formulas that is also implemented are nasty, and would definitely clog up your day if you ever had a need for one, so I decided to share it with you. I made some corrections in the formula thanks to the comment below by BenoitAndrieu.

I also have a rather lengthy story to follow it, as I have read "The equation that couldn’t be solved" by Mario Livio. The title of the book refers to the story of the insolvability of the 5th degree polynomial equation, but it also goes through the historical development of the solutions to lower degree polynomial equations.

I won’t give any explanations of how these formulas were derived, as the formulas get quite lengthy, so long that in fact that even Tartaglia (one of the people that had a part in solving the cubic equation) had problems in remembering all the rules he had discovered.

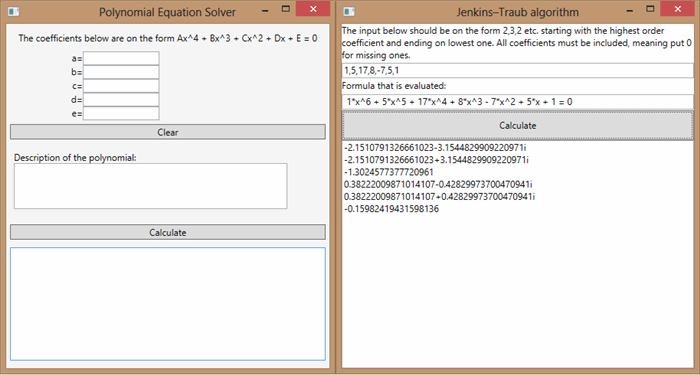

As for the numerical algoritm Jenkins-Traub, it has been completely translated from a C++and C version into VB.NET and C# by me, and as far as I have tested it, it seems to be working fine. It should be mentioned that the Jenkins-Traub algorithm generally uses explicit formulas for 1st and 2nd degree, while using the numerical approximation on 3rd degree and above.

The 1st degree polynomial equation

This is fairly easy to solve mathematically so if you are experiencing trouble here, you should probably not download the code. I am of course talking about equations on the form 2x + 3 = 7, and the simple equation is called linear equations, as they can be represented by lines when graphed (or drawn).

However the story behind equations is quite interesting, so I’m going to take you back to 2000 BC - 600 BC to the Babylonian civilization in Mesopotamia. The word equation should be used with care in this context, as the Babylonians didn’t actually use algebra to solve these equations, but instead they ventured into length debates and logic to solve the problems. This might be a way for making math even more difficult and incomprehensible than we have ever had, espesially when dealing with higher order polynomial equations.

The result of this way of solving mathematical problems without using algebra meant that the Babylonians could not find any general patterns or formulas behind the various mathematical problems. Despite the cumbersome formulations of the mathematical problems they did manage to solve a pairs (meaning equations with both x and y as unknown) of linear equations .

Babylonians didn't seem that keen on producing many text with the 1st degree equation, as for instance the Egyptians, as it seems that the Babylonians thought it too elementary for any detailed discussion. In Egypt however there exists large manuscripts on the subject that presents mathematical "recipes" with the solution to some problems, as kind of a cookbook.

It should be mentioned that the Chinese collection Nine chapters on Mathematical Art (Jiu zhang suan shu), one can find the solutions to none fewer than three linear equation with three unknowns, which is quite a feat consider how cumbersome the procedure was.

The 2nd degree polynomial equation

The origin of the famous solution to the second degree polynomial equation is actually not known, but it is known that Babylon’s actually made a great deal of detailed account on how to solve the equation, but not where they had got it from or how they discovered it. The Babylonian understood how to solve a quadratic equation on the form x^2 - x = 870 and could find the positive solution, as the solution were intended to be used in for instance land measurements and problems like that. They did however ignore equations of this type that had two positive solutions, as these were seen to be illogical solutions. This is also true about the very early Greek mathematician Euclid, as he solves the quadratic equation using geometry, and not by algebra. To compare the mathematical knowledge in the ancient world, the Egyptians on the other hand only knew how to solve equations on the form of x^2 = 4 and not how to solve x^2 + x = 4, and they could also only find the positive solution.

The Greek civilization soon managed to resolve some of the issues with the help of the brilliant mathematician Diaphanous. He effectively advanced the way solutions are presented, as a halfway point between the Babylonian wordings of the equations to the modern way of using algebra. His book Arithmetica shows solutions to three different types of the quadratic equations, as well as the famous Diaphanous equations, which Fermat's Last Theorem is an example of. Fermat in fact read Aritmethica and it was in this very book by Diaphanous that he wrote his famous last theorem in the margin. As for Diaphanous himself, we actually know very little of him, one can't even be curtain when he actually lived, except that it probably was in Alexandria in the period between A.D. 200 and 214 to 284 or 298.

With the fall of the Greek civilization the mathematical progress in the west came to a halt, and went into hibernation for nearly a millennium. The progress of mathematics now turned east, and one of the great mathematicians of his age, Brahmagupta from India, who managed to solve some of Diaphanous equations, as well as being the first to give solutions of the 2nd degree polynomial equations that also involved negative numbers. He realized that negative numbers could be seen as “debts” and positive numbers as “fortune”, very much like an accountant of today would, and thus making a hugh breakthrough in mathematics.

The next greate step in solving equations was done by the development of algebra, and got its name from the Arab mathematician Muhammad ibn Musa al-Khwarizmi, or rather his book “Kitab al-jabr wa al-muqabalah”, referring to the word al-jabr as the basis of the modern word algebra. Al-jabr means “restoration” or “completion” which is quite fitting concerning the importance of the mathematical development this would entail. His books weren’t ground breaking in new material; instead it was the systematic treatment of the solutions to the quadratic equation that was the real genius in it. However, the complete set of solutions of the quadratic equation didn’t appear into Europe until the twelfth century in Spain.

The implementation of the quadratic formula on a computer is however not so straight forward as one would initially think. We assume the equation on the form:

The solution is the well known formula, and I'm ofcource thinking about this one:

However, you'll be asking for trouble if you actually implemented the formula this way on a computer. The reason is that if the coefficients a or c are very close to zero, you could get hugh truncation errors. The coerrect implementation to find the roots on the computer are:

Even if the coefficients are complex the computer formula still holds, although one needs to take into account on how to take the sign of the square root:

In the formula above the Re stands for the real part of the solution and the asterisk is the complex conjugate of the complex number.

The 3rd and 4th degree polynomial equation

This is often referred to as the cubic or the quartic equation or function and, not surprisingly it turns up when we want to find the volume of something. The actual general equation wasn’t solved until the sixteen century, although a few special cases were solved by the Babylonians and a few more was given by the Persian poet Omar Khayyam in the twelfth century. However, there wasnt a real tangible need for the solution to the cubic equation. No one was waiting for it to be discovered, it was more of a mental challenge, sort of the Olympic games of mathematics that would determine the greates intellect of his day.

The first partial solution to the cubic equation would come from the oldest currently open university, the University of Bologna, which has been open since its establishment in 1088. After having been presented with a problem in 1501 that involved third degree polynomial equations, Scipione del Ferro decided to work on the problem. And around 1515 he managed to find a method for solving the cubic equations that had the form x3+mx = n. Del Ferro did not publish his result, which unfortunatly was quite normal in those days, and only told his student Antonio Fiore and his son-in-law about his discovery on his own death bed. Fiore then seem to think that the formula was his to use as he pleases from that point on, but did not publish it right away, but instead waited for the right moment to appear.

So when Niccolò Tartaglia in 1530 announced that he could solve some problems concerning the cubic equations, Fiore decided that his moment had come and challenged Tartaglia to a mathematical dispute. Each contestant would give the other one 30 problems to solve, and the loser would pay a monetary price to the winner. Each of them would have forty or fifty days to solve the problems.

By the time the problems were handed over to Tartaglia, he managed to solve all of them within a space of two hours! And Fiore could not solve any of the problems given to him and he did not know the equations on the form x3 + mx2 = n so Tartaglia won the competition.

In 1539, after a massive preservation campaign, a character by the name of Gerolamo Cardano (he actually earned his allowance by gambling when he was a student, and was known for beiing load and rude to the people around him) managed to persuade Tartaglia to reveal the formula, under the condition that Cardano would not reveal it. However Cardano found out about del Ferro's solutions from del Ferro's son-in-law, and decided that he was not bounded by the agreement with Tartaglia, as he would present del Ferro's solution and not Tartaglia s, so he published the result in his book Ars Magna. This book is by many contemporary mathematicians considered to be the start of the modern algebra, and it involves solutions with complex numbers, although Cardano did not understand this in detail, as some part of this was from Tartaglia solutions involved square root of a negative number . (Rafael Bombelli is often considered as the discoverer of complex numbers, as he did far more studies of the subject.) After the book was published, Tartaglia immediately posed a challenge to Cardano, which were a rather poor mathematician by the standard of Tartaglia, and he promptly refused. However, Cardano’s student, Lodovico Ferrari, send numerous public challenges to Tartaglia, which Tartaglia denied. Eventually Tartaglia was offered a job at a university, given that he would beat Ferrari in a dispute. Ferrari had discovered a general way of solving the cubic equation, which was not known to Tartaglia, he had also found the solution to the quartic equation as early as 1540, but this required the solution of the cubic equation so it wasnt published until Cardano found out about del Ferro's solutions.

Ferrari won the dispute with Tartaglia, whom left just before the first day into the dispute was over. (Ferrari was quite a character, as he had lost all his fingers on his right hand at the age of seventeen in a brawl). His career skyrocketed from this point on, though he was allegedly later poisoned by his sister and died.

The real discoverer of the third degree formula is quite complicated as you understand, although the solution to the 4th degree equation seems to be Ferrari's work alone.

The formulas are not usually implemented on a computer, as the solutions found by numerical technique is nearly always better with them. If you would still like to use the explicit formulas, you should implement Viete's formula that uses trigonometric functions instead of Ferrari's solutions.

Higher degree polynomial equations

After the Quadratic equation was solved using algebra, many tried to solved Quintic or 5th degree polynomial equation, and they all failed. It wasn't proved until Abel found a general proof of it in 1823 that stated that you actually couldn't. The theorem is known today as the Abel–Ruffini theorem or as Abel's impossibility theorem. The reason for the double name is that Ruffini gave an incomplete proof of the theorem in 1799, which Abel didn't know about until 1826. After reading it and studying it he said that Ruffini's work was so complicated to understand that he wasn't sure if it were correct.

It is however important to know what the proof actually means, and crucially, what it dosen't mean. Abel's proof simply states that one can not find a general solution to all the roots in a Quintic or any higher order polynomial equation by the use of algebra. Abel used a generalization of the Euler integrals to prove it, and the German mathematician Jacobi was beside himself that this discovery had gone unnoticed by the mathematical community.

One can however find the solution to 5th degree equations by the use of numerical methods (Newton-Rapson) or by the use of elliptic integrals. If one uses Évariste Galois theory one can also find out what type of solutions one would find. Évariste Galois also proved that the 5th degree polynomial equation could not be solved independently of Abel and Ruffini's work, and his proof was published posthumously in 1846

The problem with explicit formulas

There are problems with the explicit formulas that have to do with the finite storage space on the computer, which can in some instances give such a high error that it would render the calculated solutions far from the true values. In practice, solutions for the 3rd and higher is almost always better with the Jenkins-Traub algorithm or other similar techniques, than the solutions calculated with the explicit formulas.

There are however problems with the numerical estimation of polynomial, as any person with sufficient understanding of the algorithms behind them, easily could construct an example that would fail to converge. Take the equation (1) below:

Given that it is a 5th degree polynomial solutions could only be found using a numerical algorithm, but we know, from the Fundamental theorem of algebra, that the equation will have exactly 5 real or complex solutions, as Gauss and others have proved. The exact solutions to the equation 1 are:  The problem with this example is that the implemented Jenkins-Traub in C # and VB would not converge (most likely to the double precision that is chosen for the storage of data, as well as the epsilon, min and max values that were "exported" from the float library in VC++). In fact, it can always be problematic for an algorithm of this kind to converge, given the number of multiple roots. The reason is that the algorithm extracts the found roots from the original equation, and with a too large numerical error it can result in that no other solutions are found. So make a note of the fact that any method which uses Newton type iterations, could have problems with multiple roots. This also apply to the circumstances were the roots are closely together (or very far apart) from each other.

The problem with this example is that the implemented Jenkins-Traub in C # and VB would not converge (most likely to the double precision that is chosen for the storage of data, as well as the epsilon, min and max values that were "exported" from the float library in VC++). In fact, it can always be problematic for an algorithm of this kind to converge, given the number of multiple roots. The reason is that the algorithm extracts the found roots from the original equation, and with a too large numerical error it can result in that no other solutions are found. So make a note of the fact that any method which uses Newton type iterations, could have problems with multiple roots. This also apply to the circumstances were the roots are closely together (or very far apart) from each other.

Jenkins-Traub indeed uses a Newton type method to find the roots, and this could also have other problems. If a polynomial with no linear term is zero at zero, the iterative technique would fail to converge, given that both the function and its derivative is zero at zero.

There is another quite surprising thing about polynomials that have the multiple roots. it is that the derivative polynomial will have one fewer of the same roots. Take the example form equation 1, if we derivative the equation we will get:

And when we try to solve this equation using the same Jenkins-Traub algorithm as previously, we find that indeed the same roots are still there, with an additional that wan't. The solutions could easily be checked by inserting the values into the original equation (1):

The actual code has turned so complex that it is hard, if not impossible, to explain it all. Instead Im going to refer you to the procedure of the Jenkins-Traub described on Wikipedia.

It is however important to know that the Jenkins-Traub Algorithm is considered de facto way of numerically calculating the roots of a polynomial. It is extensively tested and it is also implemented in many products that are in commercial use (i.e. Matematica and others).

As for the explicit formulas, they should be used with care, and cannot be assumed to give the correct results for any real coefficients you put in, though the description given in the program should indicate if it is a recognized type, and if the output description matches the calculated roots. Several different kinds of methods are employed to find the roots, among them is Ferrari's equation, the depressed bi quadratic equation and many others.

References

The Wolfram site that provides some formulas used, and other links to the numerical algorithm that is used:

C++ source code:

Books:

Other links: