Here we are going to build a chatbot that talks like a movie and responds to us appropriately. And to make this work, we will use a TensorFlow library called Universal Sentence Encoder (USE) to figure out the best response to messages we type in.

TensorFlow + JavaScript. The most popular, cutting-edge AI framework now supports the most widely used programming language on the planet. So let’s make text and NLP (Natural Language Processing) chatbot magic happen through Deep Learning right in our web browser, GPU-accelerated via WebGL using TensorFlow.js!

You are welcome to download the project code.

Have you ever wondered what it’s like to be in a movie? To play a scene and be a part of the dialogue? Let’s make it happen with AI!

In this project, we are going to build a chatbot that talks like a movie and responds to us appropriately. And to make this work, we will use a TensorFlow library called Universal Sentence Encoder (USE) to figure out the best response to messages we type in.

Setting UpTensorFlow.js Code

This project runs within a single web page. We’ll include TensorFlow.js and USE, which is a pre-trained transformer-based language processing model. We’ll add a couple of input elements for the user to type messages to our chatbot and read its responses. Two additional utility functions, dotProduct and zipWith, from the USE readme example, will help us determine sentence similarity.

<html>

<head>

<title>AI Chatbot from Movie Quotes: Chatbots in the Browser with TensorFlow.js</title>

<script src="https://cdn.jsdelivr.net/npm/@tensorflow/tfjs@2.0.0/dist/tf.min.js"></script>

<script src="https://cdn.jsdelivr.net/npm/@tensorflow-models/universal-sentence-encoder"></script>

</head>

<body>

<h1 id="status">Movie Dialogue Bot</h1>

<p id="bot-text"></p>

<input id="question" type="text" />

<button id="submit">Send</button>

<script>

function setText( text ) {

document.getElementById( "status" ).innerText = text;

}

const dotProduct = (xs, ys) => {

const sum = xs => xs ? xs.reduce((a, b) => a + b, 0) : undefined;

return xs.length === ys.length ?

sum(zipWith((a, b) => a * b, xs, ys))

: undefined;

}

const zipWith =

(f, xs, ys) => {

const ny = ys.length;

return (xs.length <= ny ? xs : xs.slice(0, ny))

.map((x, i) => f(x, ys[i]));

}

(async () => {

})();

</script>

</body>

</html>

Cornell Movie Quotes Dataset

Our chatbot will learn to respond with movie quotes from the Cornell movie quotes dataset. It consists of over 200 thousand conversational messages. For better performance, we’ll select a random subset to choose from to respond to each message. The two files we need to parse are movie_lines.txt and movie_conversations.txt, so that we can create a collection of quotes and matching responses.

Let’s go through every conversation to fill a question/prompt array and a matching response array. Both files use the string " +++$+++ " as the delimiter, and the code looks like this:

let movie_lines = await fetch( "web/movie_lines.txt" ).then( r => r.text() );

let lines = {};

movie_lines.split( "\n" ).forEach( l => {

let parts = l.split( " +++$+++ " );

lines[ parts[ 0 ] ] = parts[ 4 ];

});

let questions = [];

let responses = [];

let movie_conversations = await fetch( "web/movie_conversations.txt" ).then( r => r.text() );

movie_conversations.split( "\n" ).forEach( c => {

let parts = c.split( " +++$+++ " );

if( parts[ 3 ] ) {

let phrases = parts[ 3 ].replace(/[^L0-9 ]/gi, "").split( " " ).filter( x => !!x );

for( let i = 0; i < phrases.length - 1; i++ ) {

questions.push( lines[ phrases[ i ] ] );

responses.push( lines[ phrases[ i + 1 ] ] );

}

}

});

Universal Sentence Encoder

The Universal Sentence Encoder (USE) is "a [pre-trained] model that encodes text into 512-dimensional embeddings." For a complete description of the USE and its architecture, please see the Improved Emotion Detection article earlier in this series.

The USE is easy and straightforward to work with. Let’s load it up in our code before we define our network model, and use its QnA dual encoder that will give us full-sentence embeddings across all queries and all answers. This should perform better than word embeddings. We can use this to determine the most appropriate quote and response.

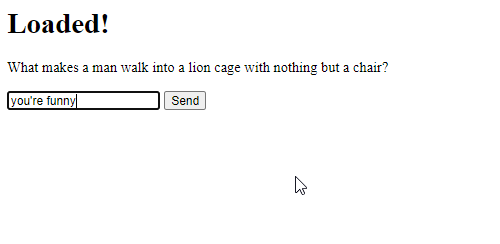

setText( "Loading USE..." );

let encoder = await use.load();

setText( "Loaded!" );

const model = await use.loadQnA();

Movie Chatbot in Action

Because these sentence embeddings already encode similarity into its vectors, we don’t need to train a separate model. All we need to do is figure out which of the movie quotes is most similar to the user’s submitted message, so that we can get a response. This is done through the use of the QnA encoder. Putting all of the quotes into the encoder could take a long time, or overload our computer. So for now, we’ll get around this by choosing a random subset of 200 quotes for each chat message.

document.getElementById( "submit" ).addEventListener( "click", async function( event ) {

let text = document.getElementById( "question" ).value;

document.getElementById( "question" ).value = "";

const numSamples = 200;

let randomOffset = Math.floor( Math.random() * questions.length );

const input = {

queries: [ text ],

responses: questions.slice( randomOffset, numSamples )

};

let embeddings = await model.embed( input );

tf.tidy( () => {

const embed_query = embeddings[ "queryEmbedding" ].arraySync();

const embed_responses = embeddings[ "responseEmbedding" ].arraySync();

let scores = [];

embed_responses.forEach( response => {

scores.push( dotProduct( embed_query[ 0 ], response ) );

});

let id = scores.indexOf( Math.max( ...scores ) );

document.getElementById( "bot-text" ).innerText = responses[ randomOffset + id ];

});

embeddings.queryEmbedding.dispose();

embeddings.responseEmbedding.dispose();

});

And just like that, you’ve got a chatbot that can talk to you.

Finish Line

Here is the code that makes this chatbot tick:

<html>

<head>

<title>AI Chatbot from Movie Quotes: Chatbots in the Browser with TensorFlow.js</title>

<script src="https://cdn.jsdelivr.net/npm/@tensorflow/tfjs@2.0.0/dist/tf.min.js"></script>

<script src="https://cdn.jsdelivr.net/npm/@tensorflow-models/universal-sentence-encoder"></script>

</head>

<body>

<h1 id="status">Movie Dialogue Bot</h1>

<p id="bot-text"></p>

<input id="question" type="text" />

<button id="submit">Send</button>

<script>

function setText( text ) {

document.getElementById( "status" ).innerText = text;

}

const dotProduct = (xs, ys) => {

const sum = xs => xs ? xs.reduce((a, b) => a + b, 0) : undefined;

return xs.length === ys.length ?

sum(zipWith((a, b) => a * b, xs, ys))

: undefined;

}

const zipWith =

(f, xs, ys) => {

const ny = ys.length;

return (xs.length <= ny ? xs : xs.slice(0, ny))

.map((x, i) => f(x, ys[i]));

}

(async () => {

let movie_lines = await fetch( "web/movie_lines.txt" ).then( r => r.text() );

let lines = {};

movie_lines.split( "\n" ).forEach( l => {

let parts = l.split( " +++$+++ " );

lines[ parts[ 0 ] ] = parts[ 4 ];

});

let questions = [];

let responses = [];

let movie_conversations = await fetch( "web/movie_conversations.txt" ).then( r => r.text() );

movie_conversations.split( "\n" ).forEach( c => {

let parts = c.split( " +++$+++ " );

if( parts[ 3 ] ) {

let phrases = parts[ 3 ].replace(/[^L0-9 ]/gi, "").split( " " ).filter( x => !!x );

for( let i = 0; i < phrases.length - 1; i++ ) {

questions.push( lines[ phrases[ i ] ] );

responses.push( lines[ phrases[ i + 1 ] ] );

}

}

});

setText( "Loading USE..." );

let encoder = await use.load();

setText( "Loaded!" );

const model = await use.loadQnA();

document.getElementById( "question" ).addEventListener( "keyup", function( event ) {

if( event.keyCode === 13 ) {

event.preventDefault();

document.getElementById( "submit" ).click();

}

});

document.getElementById( "submit" ).addEventListener( "click", async function( event ) {

let text = document.getElementById( "question" ).value;

document.getElementById( "question" ).value = "";

const numSamples = 200;

let randomOffset = Math.floor( Math.random() * questions.length );

const input = {

queries: [ text ],

responses: questions.slice( randomOffset, numSamples )

};

let embeddings = await model.embed( input );

tf.tidy( () => {

const embed_query = embeddings[ "queryEmbedding" ].arraySync();

const embed_responses = embeddings[ "responseEmbedding" ].arraySync();

let scores = [];

embed_responses.forEach( response => {

scores.push( dotProduct( embed_query[ 0 ], response ) );

});

let id = scores.indexOf( Math.max( ...scores ) );

document.getElementById( "bot-text" ).innerText = responses[ randomOffset + id ];

});

embeddings.queryEmbedding.dispose();

embeddings.responseEmbedding.dispose();

});

})();

</script>

</body>

</html>

What’s Next?

In this article, we put together a chatbot that can hold a conversation with someone. But what’s better than a dialogue? What about… a monologue?

In the final article of this series, we’ll build a Shakespearean Monologue Generator in the Browser with TensorFlow.js.

Raphael Mun is a tech entrepreneur and educator who has been developing software professionally for over 20 years. He currently runs Lemmino, Inc and teaches and entertains through his Instafluff livestreams on Twitch building open source projects with his community.