Here we'll write the Python code for detecting persons in images using SSD models.

In the previous article of this series, we’ve selected for further work two SSD models, one based on MobileNet and another one based on SqueezeNet. In this article, we’ll develop some Python code that will enable us to detect humans in images using these models.

The selected DNNs are realized as Caffe models. A Caffe model consists of two parts: the model structure (.prototxt) file and the trained model (.caffemodel). The Caffe model structure is written in a format similar to JSON. The trained model is a binary serialization of the CNN kernels and other trained data. In the first article of the series, we’ve mentioned that we’ll use the Python OpenCV library with Caffe. What does it mean? Should we install both frameworks, OpenCV and Caffe? Fortunately, no, only OpenCV library. This framework includes the DNN module that directly supports network models developed with TensorFlow, Caffe, Torch, Darknet, and some others. So – lucky us! – the OpenCV framework allows working with both the computer vision algorithms and the deep neural networks. And this is all we need.

Let’s start our Python code with two utility classes:

import cv2

import numpy as np

import os

class CaffeModelLoader:

@staticmethod

def load(proto, model):

net = cv2.dnn.readNetFromCaffe(proto, model)

return net

class FrameProcessor:

def __init__(self, size, scale, mean):

self.size = size

self.scale = scale

self.mean = mean

def get_blob(self, frame):

img = frame

(h, w, c) = frame.shape

if w>h :

dx = int((w-h)/2)

img = frame[0:h, dx:dx+h]

resized = cv2.resize(img, (self.size, self.size), cv2.INTER_AREA)

blob = cv2.dnn.blobFromImage(resized, self.scale, (self.size, self.size), self.mean)

return blob

The CaffeModelLoader class has one static method to load the Caffe model from the disk. The latter FrameProcessor class aims to convert data from an image to a specific format intended for DNN. The constructor of the class receives three parameters. The size parameter defines the size of the input data for DNN processing. Convolutional networks for image processing almost always use square images as input, so we specify only one value for both width and height. The scale and mean parameters are used for scaling data to the value range that was used for training the SSD. The only method of the class is get_blob, which receives an image and returns a blob – a special structure for the neural network processing. To receive the blob, the image is first resized to the specified square. Then the blob is created from the resized image using the blobFromImage method from OpenCV’s DNN module with the specified scale, size, and mean values.

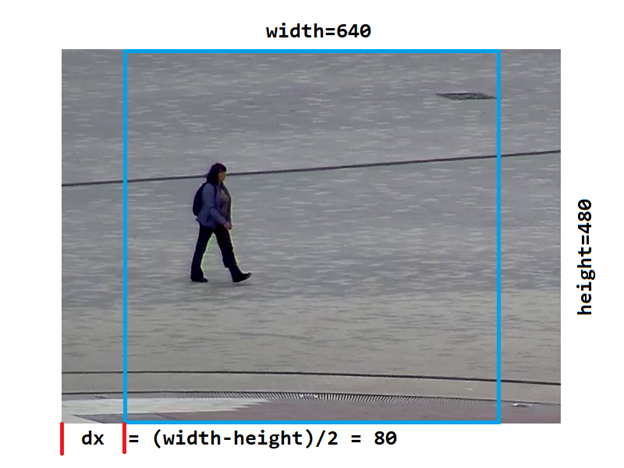

Note the code at the beginning of the get_blob method. This code implements a little "trick": we trim the non-square images to get only the center square part of the image, as shown in the picture below:

This trick is intended to keep the aspect ratio of the image constant. If the width/height ratio changed, the image would get distortion, and the precision of object detection would decrease. One disadvantage of this trick is that we’ll detect persons only in the central square part of the image (shown in blue in the above picture).

Let’s now have a look at the main class for person detection with SSD models:

class SSD:

def __init__(self, frame_proc, ssd_net):

self.proc = frame_proc

self.net = ssd_net

def detect(self, frame):

blob = self.proc.get_blob(frame)

self.net.setInput(blob)

detections = self.net.forward()

k = detections.shape[2]

obj_data = []

for i in np.arange(0, k):

obj = detections[0, 0, i, :]

obj_data.append(obj)

return obj_data

def get_object(self, frame, data):

confidence = int(data[2]*100.0)

(h, w, c) = frame.shape

r_x = int(data[3]*h)

r_y = int(data[4]*h)

r_w = int((data[5]-data[3])*h)

r_h = int((data[6]-data[4])*h)

if w>h :

dx = int((w-h)/2)

r_x = r_x+dx

obj_rect = (r_x, r_y, r_w, r_h)

return (confidence, obj_rect)

def get_objects(self, frame, obj_data, class_num, min_confidence):

objects = []

for (i, data) in enumerate(obj_data):

obj_class = int(data[1])

obj_confidence = data[2]

if obj_class==class_num and obj_confidence>=min_confidence :

obj = self.get_object(frame, data)

objects.append(obj)

return objects

The constructor of the above class has two arguments: frame_proc for converting images to blobs and ssd_net to detect objects. The main method, detect, receives a frame (image) as input and gets a blob from the frame using the specified frame processor. The blob is used as input for the network, and we get the detections with the forward method. These detections are presented as a 4-rank array (tensor). We won’t analyze the entire tensor; we only need the 2nd dimension of the array. We’ll extract it from the detections and return the result – a list of object data.

The object data is a real array. Here is an example:

[array([ 0. , 15. , 0.90723044, 0.56916684, 0.6017439 ,

0.68543154, 0.93739873], dtype=float32)]

The array contains seven numbers:

- The number of the detected objects

- The number of its class

- The confidence that the object belongs to the given class

- Four left-top and the right-bottom coordinates of the object ROI (the coordinates are relative to the blob size)

The second method of the class converts the detection data to a simpler format for further use. It converts the relative confidence to the percentage value and the relative ROI coordinates to the integer data – pixel coordinates in the original image. This method takes into account the fact that the blob data was extracted from the center square of the original frame.

And finally, the get_objects method extracts from the detection data only the objects with the specified class and sufficient confidence. Because DNN models can detect objects of twenty different classes, we must filter the detections for the person class only to be sure that the detected object is really a human, so we specify a high confidence threshold.

One more utility class – for drawing detected objects into images to visualize the results:

class Utils:

@staticmethod

def draw_object(obj, label, color, frame):

(confidence, (x1, y1, w, h)) = obj

x2 = x1+w

y2 = y1+h

cv2.rectangle(frame, (x1, y1), (x2, y2), color, 2)

y3 = y1-12

text = label + " " + str(confidence)+"%"

cv2.putText(frame, text, (x1, y3), cv2.FONT_HERSHEY_SIMPLEX, 0.6, color, 1, cv2.LINE_AA)

@staticmethod

def draw_objects(objects, label, color, frame):

for (i, obj) in enumerate(objects):

Utils.draw_object(obj, label, color, frame)

Now we can write the code to launch the person detection algorithm:

proto_file = r"C:\PI_RPD\mobilenet.prototxt"

model_file = r"C:\PI_RPD\mobilenet.caffemodel"

ssd_net = CaffeModelLoader.load(proto_file, model_file)

print("Caffe model loaded from: "+model_file)

proc_frame_size = 300

ssd_proc = FrameProcessor(proc_frame_size, 1.0/127.5, 127.5)

person_class = 15

ssd = SSD(ssd_proc, ssd_net)

im_dir = r"C:\PI_RPD\test_images"

im_name = "woman_640x480_01.png"

im_path = os.path.join(im_dir, im_name)

image = cv2.imread(im_path)

print("Image read from: "+im_path)

obj_data = ssd.detect(image)

persons = ssd.get_objects(image, obj_data, person_class, 0.5)

person_count = len(persons)

print("Person count on the image: "+str(person_count))

Utils.draw_objects(persons, "PERSON", (0, 0, 255), image)

res_dir = r"C:\PI_RPD\results"

res_path = os.path.join(res_dir, im_name)

cv2.imwrite(res_path, image)

print("Result written to: "+res_path)

The code implements a frame processor with size=300 because the models we use work with images sized 300 x 300 pixels. The scale and mean parameters have the same values that were used for the MobileNet model training. These values must always be assigned to the model’s training values, else the precision of the model decreases. The person_class value is 15 because human is the 15-th class in the model context.

Running the code on sample images produces the results below:

We used very simple cases to detect persons. The goal was just to check that our code worked correctly and the DNN model could predict a person’s presence in an image. And it worked!

Next Steps

The next step is to launch our code on a Raspberry Pi device. In the next article, we’ll see how you can install Python-OpenCV on the device and run the code.