Introduction

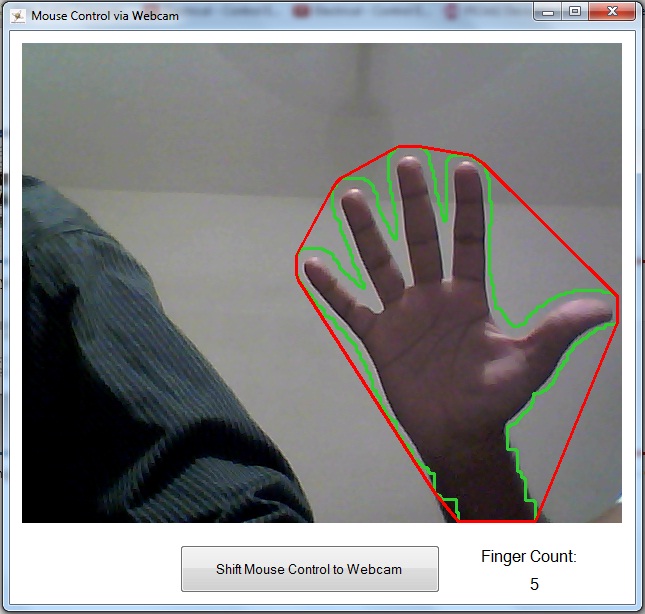

This application uses emguCV, a .NET wrapper for openCV, to perform image processing, through which we try to recognize hand gestures and control mouse using these gestures. In this app, cursor movement is controlled by the movement of hand and click events are triggered using hand gestures.

Background

I always wanted to have one such handy app to control mouse. So when, Sir Sajid Hussain, our teacher at Institute of Industrial Electronic Engineering IIEE, asked us to make an application using C#, we (I, Sajjad Idrees and Saad Hafeez) decided it to be the one. There are many applications made before that perform a similar task, but they usually use kinect or something else, very few of them used simple webcam for input. Though the application is not so perfect and needs a lot of work to perfect it, it does work.

Using the Code

The code uses a well known library for image processing, known as, openCV in the form of emguCV which is a .NET wrapper of it. The code is really simple and easy to understand.

First of all, the application tries to catch a video input device. If it is successful in doing so, it calls the function ProcessFramAndUpdateGUI(); where all the processing is done.

private void Form1_Load(object sender, EventArgs e)

{

try

{

CapWebCam = new Capture();

}

catch ()

{

}

Application.Idle += ProcessFramAndUpdateGUI;

}

The processing code is divided into three main parts:

- Image Filtering to obtain biggest skin colored contour

- Finding convexity defects to count number of fingers, for mouse click events

- Finding center of palm and do necessary noise filtering to move cursor position

Filtering to Obtain Skin Colored Contour

First of all, YCbCr filter is applied, with required thresholds to obtain skin colored parts of the image.

int Finger_num = 0;

Double Result1 = 0;

Double Result2 = 0;

imgOrignal = CapWebCam.QueryFrame();

if (imgOrignal == null) return;

Image<Ycc, Byte> currentYCrCbFrame = imgOrignal.Convert<Ycc, byte>();

Image<Gray, byte> skin = new Image<Gray, byte>(imgOrignal.Width, imgOrignal.Height);

skin = currentYCrCbFrame.InRange(new Ycc(0, 131, 80), new Ycc(255, 185, 135));

StructuringElementEx rect_12 =

new StructuringElementEx(10, 10, 5, 5, Emgu.CV.CvEnum.CV_ELEMENT_SHAPE.CV_SHAPE_RECT);

Now eroding and dilation of the filtered image is done using cv.erode() and cv.dilate() functions.

StructuringElementEx rect_12 =

new StructuringElementEx(10, 10, 5, 5, Emgu.CV.CvEnum.CV_ELEMENT_SHAPE.CV_SHAPE_RECT);

CvInvoke.cvErode(skin, skin, rect_12, 1);

StructuringElementEx rect_6 =

new StructuringElementEx(6, 6, 3, 3, Emgu.CV.CvEnum.CV_ELEMENT_SHAPE.CV_SHAPE_RECT);

CvInvoke.cvDilate(skin, skin, rect_6, 2);

Finally, smoothing of the image is done using Gaussian filter and from the resulting image, the biggest contour is extracted.

skin = skin.SmoothGaussian(9);

Contour<Point> contours = skin.FindContours();

Contour<Point> biggestContour = null;

while (contours != null)

{

Result1 = contours.Area;

if (Result1 >

Counting Fingers using Convexity Defects

Now to determine the number of fingers, I used a very popular method available known as convexity defects. This method determines all of the defects in our contour, which tells us the number of fingers in the image. The code is as follows:

if (biggestContour != null)

{

Finger_num = 0;

biggestContour = biggestContour.ApproxPoly((0.00025));

imgOrignal.Draw(biggestContour, new Bgr(Color.LimeGreen), 2);

Hull = biggestContour.GetConvexHull(ORIENTATION.CV_CLOCKWISE);

defects = biggestContour.GetConvexityDefacts(storage, ORIENTATION.CV_CLOCKWISE);

imgOrignal.DrawPolyline(Hull.ToArray(), true, new Bgr(0, 0, 256), 2);

box = biggestContour.GetMinAreaRect();

defectArray = defects.ToArray();

for (int i = 0; i < defects.Total; i++)

{

PointF startPoint = new PointF((float)defectArray[i].StartPoint.X,

(float)defectArray[i].StartPoint.Y);

PointF depthPoint = new PointF((float)defectArray[i].DepthPoint.X,

(float)defectArray[i].DepthPoint.Y);

PointF endPoint = new PointF((float)defectArray[i].EndPoint.X,

(float)defectArray[i].EndPoint.Y);

CircleF startCircle = new CircleF(startPoint, 5f);

CircleF depthCircle = new CircleF(depthPoint, 5f);

CircleF endCircle = new CircleF(endPoint, 5f);

if ( (startCircle.Center.Y < box.center.Y || depthCircle.Center.Y < box.center.Y) &&

(startCircle.Center.Y < depthCircle.Center.Y) &&

(Math.Sqrt(Math.Pow(startCircle.Center.X - depthCircle.Center.X, 2) +

Math.Pow(startCircle.Center.Y - depthCircle.Center.Y, 2)) >

box.size.Height / 6.5) )

{

Finger_num++;

}

With finger count known, I associated mouse click events with it. For the user to click mouse left button, he/she opens his/her palm, as soon as the finger count goes greater than four, mouse left button is clicked.

Finding the Center of Contour

Finally, we try to find the center of our contour, using moments of contour. As soon as we were able to get the coordinates of the center, we noticed there was too much fluctuation in the center of contour, as the hand continuously flickered. For this problem, we divided the coordinates of center by 10 to remove unit part of them as there was no fluctuation in the tenths of the coordinates. Here is the code:

MCvMoments moment = new MCvMoments();

moment = biggestContour.GetMoments();

CvInvoke.cvMoments(biggestContour, ref moment, 0);

double m_00 = CvInvoke.cvGetSpatialMoment(ref moment, 0, 0);

double m_10 = CvInvoke.cvGetSpatialMoment(ref moment, 1, 0);

double m_01 = CvInvoke.cvGetSpatialMoment(ref moment, 0, 1);

int current_X = Convert.ToInt32(m_10 / m_00) / 10;

int current_Y = Convert.ToInt32(m_01 / m_00) / 10;

We also faced another problem, the center shifted when the palm was open and closed. For this problem, we placed the conditions that mouse cursor position will only be moved if the palm is closed, i.e., finger count is zero, else mouse will remain where it was. The code below also shows mouse click event:

if (Finger_num == 0 || Finger_num == 1)

{

Cursor.Position = new Point(current_X * 20, current_Y * 20);

}

if (Finger_num >= 4)

{

DoMouseClick();

}

The function definition for DoMouseClick() is as follows, however system32 function mouse_event() is now obsolete but we used it anyway.

[DllImport("user32.dll")]

static extern void mouse_event(uint dwFlags, uint dx, uint dy, uint dwData,

int dwExtraInfo);

private const int MOUSEEVENTF_LEFTDOWN = 0x02;

private const int MOUSEEVENTF_LEFTUP = 0x04;

private const int MOUSEEVENTF_RIGHTDOWN = 0x08;

private const int MOUSEEVENTF_RIGHTUP = 0x10;

public void DoMouseClick()

{

uint X = Convert.ToUInt32(Cursor.Position.X);

uint Y =Convert.ToUInt32(Cursor.Position.Y);

mouse_event(MOUSEEVENTF_LEFTDOWN | MOUSEEVENTF_LEFTUP, X, Y, 0, 0);

}

That's all about using the code, hope it helps you. There are many things yet to be done to perfect this application. We hope suggestions from viewers may help us improve it.

Note: We have not included emguCV DLLs in the attached file, one must install emguCV and copy all DLLs to run the project.

Run this Application on Your PC

There is a three step process to run this application on your PC:

- Download and install

emguCV library from here. - Copy all files from "C:\Emgu\emgucv-windows-x86 2.4.0.1717\bin\x86\" into your system32 folder.

- Download and install the setup of application from the following link:

Points of Interest

As Windows 8 is now available in the market, and it provides much of a tablet touch to the PC, we are thinking of improving this application to be able to use hand movements to provide touch effect to a laptop.