Introduction

Implementation of this filter is based on my BaseClasses.NET library which described in my previous post (Pure .NET DirectShow Filters in C#). As people were queried for such filter I decide to make it, and put it into separate article as here, I think, necessary some implementation notes and code description.

Background

Most capture devices in system are present as WDM drivers and that drivers are handled in system via WDM Video Capture Filter, which is proxy from Kernel Streaming into Microsoft DirectShow. That proxy filter refer to every capture device driver and registered with the device name in specified DirectShow Filters category (Video Capture Sources). In this article I describe how to make virtual and non-WDM Video Capture Source in C#.

Implementation

Core functionality of the filter will be capturing screen and provide that data as a video stream, it works same way as in previous post. We use BaseSourceFilter and SourceStream as a base classes for our filter and output pin. Virtual Video Capture Source necessary to be registered in the Video Capture Sources category. In additional the output pin should implement at least IKsPropertySet and IAMStreamConfig interfaces and filter should implement IAMFilterMiscFlags interface.

[ComVisible(false)]

public class VirtualCamStream: SourceStream

, IAMStreamControl

, IKsPropertySet

, IAMPushSource

, IAMLatency

, IAMStreamConfig

, IAMBufferNegotiation

.........

[ComVisible(true)]

[Guid("9100239C-30B4-4d7f-ABA8-854A575C9DFB")]

[AMovieSetup(Merit.Normal, "860BB310-5D01-11d0-BD3B-00A0C911CE86")]

[PropPageSetup(typeof(AboutForm))]

public class VirtualCamFilter : BaseSourceFilter

, IAMFilterMiscFlags

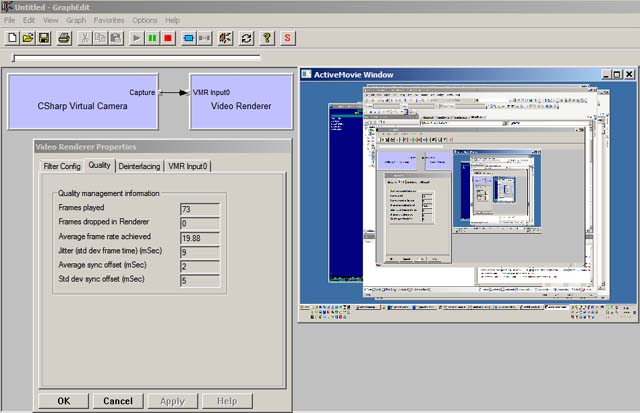

As you can see I have made support of other interfaces for output pin, actually you can implement all same interfaces as the real WDM Proxy Filter, but I made only most useful interfaces for applications. IAMStreamControl is used for specify start and stop notifications (sometime cameras require time from 0.5 to 3 seconds for calibration and application can use this interface to skip startup samples or other needs). IAMPushSource controls media samples timing offset and latency (mostly used for configuring audio video sync). IAMBufferNegotiation allows configure allocator settings. More details abt that interfaces you can find in MSDN. Registered filter looks next way in GraphEdit

IKsPropertySet interface

Using this interface we specify pin category guid. This necessary in case you specify category while rendering pin with ICaptureGraphBuilder2 interface or looking for pin with specified category. And as requirements one pin of such filter should have Capture category. Implementation looks next way and it simple:

public int Set(Guid guidPropSet, int dwPropID, IntPtr pInstanceData, int cbInstanceData, IntPtr pPropData, int cbPropData)

{

return E_NOTIMPL;

}

public int Get(Guid guidPropSet, int dwPropID, IntPtr pInstanceData, int cbInstanceData, IntPtr pPropData, int cbPropData, out int pcbReturned)

{

pcbReturned = Marshal.SizeOf(typeof(Guid));

if (guidPropSet != PropSetID.Pin)

{

return E_PROP_SET_UNSUPPORTED;

}

if (dwPropID != (int)AMPropertyPin.Category)

{

return E_PROP_ID_UNSUPPORTED;

}

if (pPropData == IntPtr.Zero)

{

return NOERROR;

}

if (cbPropData < Marshal.SizeOf(typeof(Guid)))

{

return E_UNEXPECTED;

}

Marshal.StructureToPtr(PinCategory.Capture, pPropData, false);

return NOERROR;

}

public int QuerySupported(Guid guidPropSet, int dwPropID, out KSPropertySupport pTypeSupport)

{

pTypeSupport = KSPropertySupport.Get;

if (guidPropSet != PropSetID.Pin)

{

return E_PROP_SET_UNSUPPORTED;

}

if (dwPropID != (int)AMPropertyPin.Category)

{

return E_PROP_ID_UNSUPPORTED;

}

return S_OK;

}

IAMStreamConfig interface

This is major interface which allowing applications to configure output pin format and resolution. Via this interface filter returns all available resolutions and formats by index in VideoStreamConfigCaps structure. Along with that structure this interface can returns the mediatype (actual or available by index). Note you can return only one media type but proper specify settings in configuration structure - applications are also should handle that (not all applications doing so - but most professional software works properly). Note values in VideoStreamConfigCaps structure also can be different at any index, this means that filter can have output in different aspect ratio or other parameters which are also beloned to returned MediaType. For example filter can expose different Width and Height granularity for RGB24 and RGB32. Note the MediaTypes returned by this interface also can be different from types retrieved via IEnumMediaTypes interface (application also should handle that). For Example if capture source supports few colorspaces on output it can return media types with different colorspaces via IAMStreamConfig interface and only with one "active" colorspace via IEnumMediaTypes. An "active" colorspace in that case will be depends on SetFormat call. In that filter I made simple way: I return all MediaTypes in both ways and I have only one configuration. Let's declare configuration constants:

private const int c_iDefaultWidth = 1024;

private const int c_iDefaultHeight = 756;

private const int c_nDefaultBitCount = 32;

private const int c_iDefaultFPS = 20;

private const int c_iFormatsCount = 8;

private const int c_nGranularityW = 160;

private const int c_nGranularityH = 120;

private const int c_nMinWidth = 320;

private const int c_nMinHeight = 240;

private const int c_nMaxWidth = c_nMinWidth + c_nGranularityW * (c_iFormatsCount - 1);

private const int c_nMaxHeight = c_nMinHeight + c_nGranularityH * (c_iFormatsCount - 1);

private const int c_nMinFPS = 1;

private const int c_nMaxFPS = 30;

Getting formats count and structure size:

public int GetNumberOfCapabilities(out int iCount, out int iSize)

{

iCount = 0;

AMMediaType mt = new AMMediaType();

while (GetMediaType(iCount, ref mt) == S_OK) { mt.Free(); iCount++; };

iSize = Marshal.SizeOf(typeof(VideoStreamConfigCaps));

return NOERROR;

}

Filling VideoStreamConfigCaps structure:

public int GetDefaultCaps(int nIndex, out VideoStreamConfigCaps _caps)

{

_caps = new VideoStreamConfigCaps();

_caps.guid = FormatType.VideoInfo;

_caps.VideoStandard = AnalogVideoStandard.None;

_caps.InputSize.Width = c_iDefaultWidth;

_caps.InputSize.Height = c_iDefaultHeight;

_caps.MinCroppingSize.Width = c_nMinWidth;

_caps.MinCroppingSize.Height = c_nMinHeight;

_caps.MaxCroppingSize.Width = c_nMaxWidth;

_caps.MaxCroppingSize.Height = c_nMaxHeight;

_caps.CropGranularityX = c_nGranularityW;

_caps.CropGranularityY = c_nGranularityH;

_caps.CropAlignX = 0;

_caps.CropAlignY = 0;

_caps.MinOutputSize.Width = _caps.MinCroppingSize.Width;

_caps.MinOutputSize.Height = _caps.MinCroppingSize.Height;

_caps.MaxOutputSize.Width = _caps.MaxCroppingSize.Width;

_caps.MaxOutputSize.Height = _caps.MaxCroppingSize.Height;

_caps.OutputGranularityX = _caps.CropGranularityX;

_caps.OutputGranularityY = _caps.CropGranularityY;

_caps.StretchTapsX = 0;

_caps.StretchTapsY = 0;

_caps.ShrinkTapsX = 0;

_caps.ShrinkTapsY = 0;

_caps.MinFrameInterval = UNITS / c_nMaxFPS;

_caps.MaxFrameInterval = UNITS / c_nMinFPS;

_caps.MinBitsPerSecond = (_caps.MinOutputSize.Width * _caps.MinOutputSize.Height * c_nDefaultBitCount) * c_nMinFPS;

_caps.MaxBitsPerSecond = (_caps.MaxOutputSize.Width * _caps.MaxOutputSize.Height * c_nDefaultBitCount) * c_nMaxFPS;

return NOERROR;

}

Retreiving Caps and Media Type:

public int GetStreamCaps(int iIndex,out AMMediaType ppmt, out VideoStreamConfigCaps _caps)

{

ppmt = null;

_caps = null;

if (iIndex < 0) return E_INVALIDARG;

ppmt = new AMMediaType();

HRESULT hr = (HRESULT)GetMediaType(iIndex, ref ppmt);

if (FAILED(hr)) return hr;

if (hr == VFW_S_NO_MORE_ITEMS) return S_FALSE;

hr = (HRESULT)GetDefaultCaps(iIndex, out _caps);

return hr;

}

Implementing GetMediaType Method:

public int GetMediaType(int iPosition, ref AMMediaType pMediaType)

{

if (iPosition < 0) return E_INVALIDARG;

VideoStreamConfigCaps _caps;

GetDefaultCaps(0, out _caps);

int nWidth = 0;

int nHeight = 0;

if (iPosition == 0)

{

if (Pins.Count > 0 && Pins[0].CurrentMediaType.majorType == MediaType.Video)

{

pMediaType.Set(Pins[0].CurrentMediaType);

return NOERROR;

}

nWidth = _caps.InputSize.Width;

nHeight = _caps.InputSize.Height;

}

else

{

iPosition--;

nWidth = _caps.MinOutputSize.Width + _caps.OutputGranularityX * iPosition;

nHeight = _caps.MinOutputSize.Height + _caps.OutputGranularityY * iPosition;

if (nWidth > _caps.MaxOutputSize.Width || nHeight > _caps.MaxOutputSize.Height)

{

return VFW_S_NO_MORE_ITEMS;

}

}

pMediaType.majorType = DirectShow.MediaType.Video;

pMediaType.formatType = DirectShow.FormatType.VideoInfo;

VideoInfoHeader vih = new VideoInfoHeader();

vih.AvgTimePerFrame = m_nAvgTimePerFrame;

vih.BmiHeader.Compression = BI_RGB;

vih.BmiHeader.BitCount = (short)m_nBitCount;

vih.BmiHeader.Width = nWidth;

vih.BmiHeader.Height = nHeight;

vih.BmiHeader.Planes = 1;

vih.BmiHeader.ImageSize = vih.BmiHeader.Width * Math.Abs(vih.BmiHeader.Height) * vih.BmiHeader.BitCount / 8;

if (vih.BmiHeader.BitCount == 32)

{

pMediaType.subType = DirectShow.MediaSubType.RGB32;

}

if (vih.BmiHeader.BitCount == 24)

{

pMediaType.subType = DirectShow.MediaSubType.RGB24;

}

AMMediaType.SetFormat(ref pMediaType, ref vih);

pMediaType.fixedSizeSamples = true;

pMediaType.sampleSize = vih.BmiHeader.ImageSize;

return NOERROR;

}

In that code on position 0 if we have media type set to an output pin we returns it otherwise we return default output width and height. In other position value we calculate output resolution.

Implementing SetFormat Method:

public int SetFormat(AMMediaType pmt)

{

if (m_Filter.IsActive) return VFW_E_WRONG_STATE;

HRESULT hr;

AMMediaType _newType = new AMMediaType(pmt);

AMMediaType _oldType = new AMMediaType(m_mt);

hr = (HRESULT)CheckMediaType(_newType);

if (FAILED(hr)) return hr;

m_mt.Set(_newType);

if (IsConnected)

{

hr = (HRESULT)Connected.QueryAccept(_newType);

if (SUCCEEDED(hr))

{

hr = (HRESULT)m_Filter.ReconnectPin(this, _newType);

if (SUCCEEDED(hr))

{

hr = (HRESULT)(m_Filter as VirtualCamFilter).SetMediaType(_newType);

}

else

{

m_mt.Set(_oldType);

m_Filter.ReconnectPin(this, _oldType);

}

}

}

else

{

hr = (HRESULT)(m_Filter as VirtualCamFilter).SetMediaType(_newType);

}

return hr;

}

Here the _newType variable is the setted media type, but it can be partially configured by caller, for example only changed colorspace (example: RGB32 to YUY2 should recalc image size and reconfig BitsPerPixel) or modified resolution without changing image size. I didn't made handling that situations but you can do configuring this new type before passing in to CheckMediaType, as in case of partially initialize, type will be rejected in that method. Most application puts proper types but situations with partially initialized type is possible.

Pitch correction and mediatype negotiation

Pitch correction required for improving perfomance. If we not support that then our filter will be connected to Video Renderer via Colorspace Converter and inside Colorspace converter the data will be just copy from one sample to another according pitch provided by renderer. That happend because video renderer uses Direct3D or DirectDraw for rendering samples and it has own allocator which provides media samples as host allocated Direct3D surfaces or textures which may require different resolutions (all resolutions you can find in DirectX Caps Viewer tool). Another example of this is that most of encoders uses SSE, MMX for improving performance which requires that width and height to be aligned in memory for 16 or 32. To make that available in our filter we should handle CheckMediaType method and checking MediaType value of MediaSample returned from allocator. Usually MediaType set at first requested sample more about that you can find in MSDN: QueryAccept (Upstream). CheckMediaType method:

public int CheckMediaType(AMMediaType pmt)

{

if (pmt == null) return E_POINTER;

if (pmt.formatPtr == IntPtr.Zero) return VFW_E_INVALIDMEDIATYPE;

if (pmt.majorType != MediaType.Video)

{

return VFW_E_INVALIDMEDIATYPE;

}

if (

pmt.subType != MediaSubType.RGB24

&& pmt.subType != MediaSubType.RGB32

&& pmt.subType != MediaSubType.ARGB32

)

{

return VFW_E_INVALIDMEDIATYPE;

}

BitmapInfoHeader _bmi = pmt;

if (_bmi == null)

{

return E_UNEXPECTED;

}

if (_bmi.Compression != BI_RGB)

{

return VFW_E_TYPE_NOT_ACCEPTED;

}

if (_bmi.BitCount != 24 && _bmi.BitCount != 32)

{

return VFW_E_TYPE_NOT_ACCEPTED;

}

VideoStreamConfigCaps _caps;

GetDefaultCaps(0, out _caps);

if (

_bmi.Width < _caps.MinOutputSize.Width

|| _bmi.Width > _caps.MaxOutputSize.Width

)

{

return VFW_E_INVALIDMEDIATYPE;

}

long _rate = 0;

{

VideoInfoHeader _pvi = pmt;

if (_pvi != null)

{

_rate = _pvi.AvgTimePerFrame;

}

}

{

VideoInfoHeader2 _pvi = pmt;

if (_pvi != null)

{

_rate = _pvi.AvgTimePerFrame;

}

}

if (_rate < _caps.MinFrameInterval || _rate > _caps.MaxFrameInterval)

{

return VFW_E_INVALIDMEDIATYPE;

}

return NOERROR;

}

Code which perform checking MediaType changes in VirtualCamStream.FillBuffer method:

AMMediaType pmt;

if (S_OK == _sample.GetMediaType(out pmt))

{

if (FAILED(SetMediaType(pmt)))

{

ASSERT(false);

_sample.SetMediaType(null);

}

pmt.Free();

}

Delivering samples

I have seen a lot of samples of directshow filters, probably even here too, which mentions that they have Live Source and only implement the simple Push Source filter which looks like one of example from my previous article - This is totally wrong. As an example you can connect such filter to AVI mux and mux output to file writer, press start and wait for 30-60 seconds. After cheking resulted file you can compare time in there with time you were waiting - it will not be same (time in file will be more than 30 seconds if you were waiting for 30 seconds to be written). This happend bcs your source isn't live it just set the timestamps without waiting. As we have Only one input connected to AVI muxer filter - muxer does not performs the synchronization and just writing incoming samples. This will not happening in case of connection to video renderer as renderer waits for sample time and present it according timestamp. Hope you see an issue. If you think: "Just necessary to specify Sleep" - this is also not correct solution. For beginners or those who like to use Sleep in multithreading application: avoid to use Sleep (best way if Sleep even not present) in your applications - Thread should wait and not to sleep - for that there are good functions such as WaitForSingleObject and WaitMultipleObjects, well review of multithreading not part of that article but let it be here as advice. To handle our situation we should use the Clock. We have clock provided by IGraphBuilder to our filter by calling SetSyncSource method of IBaseFilter interface. Note: can be situation that filter graph does not use sync source and in that case we should have create our own Clock (It is the CSystemClock class in Microsoft native BaseClasses, in .NET version I didn't make that class so far). In that example I not handle situation without clock - so it is up to you. Ok, let's see how to implement that. First we should override pin's Active and Inactive methods for initialize and shutdown clock variables:

public override int Active()

{

m_rtStart = 0;

m_bStartNotified = false;

m_bStopNotified = false;

{

lock (m_Filter.FilterLock)

{

m_pClock = m_Filter.Clock;

if (m_pClock.IsValid)

{

m_pClock._AddRef();

m_hSemaphore = new Semaphore(0,0x7FFFFFFF);

}

}

}

return base.Active();

}

public override int Inactive()

{

HRESULT hr = (HRESULT)base.Inactive();

if (m_pClock != null)

{

if (m_dwAdviseToken != 0)

{

m_pClock.Unadvise(m_dwAdviseToken);

m_dwAdviseToken = 0;

}

m_pClock._Release();

m_pClock = null;

if (m_hSemaphore != null)

{

m_hSemaphore.Close();

m_hSemaphore = null;

}

}

return hr;

}

After we should make the samples scheduling. As we have fixed frame rate we can use AdvicePeriodic method.

HRESULT hr = NOERROR;

long rtLatency;

if (FAILED(GetLatency(out rtLatency)))

{

rtLatency = UNITS / 30;

}

if (m_dwAdviseToken == 0)

{

m_pClock.GetTime(out m_rtClockStart);

hr = (HRESULT)m_pClock.AdvisePeriodic(m_rtClockStart + rtLatency, rtLatency, m_hSemaphore.Handle, out m_dwAdviseToken);

hr.Assert();

}

And wait for next sample time occure and setting sample time:

m_hSemaphore.WaitOne();

hr = (HRESULT)(m_Filter as VirtualCamFilter).FillBuffer(ref _sample);

if (FAILED(hr) || S_FALSE == hr) return hr;

m_pClock.GetTime(out m_rtClockStop);

_sample.GetTime(out _start, out _stop);

if (rtLatency > 0 && rtLatency * 3 < m_rtClockStop - m_rtClockStart)

{

m_rtClockStop = m_rtClockStart + rtLatency;

}

_stop = _start + (m_rtClockStop - m_rtClockStart);

m_rtStart = _stop;

lock (m_csPinLock)

{

_start -= m_rtStreamOffset;

_stop -= m_rtStreamOffset;

}

_sample.SetTime(_start, _stop);

m_rtClockStart = m_rtClockStop;

Filter Overview

The resulted filter works as virtual video capture source. It expose RGB32 output media types with VideoInfoHeader format and next resolutions: 1024x756 (Default), 320x240, 480x360, 640x480, 800x600, 960x720, 1120x840, 1280x960, 1440x1080. Resolution checked only for low and high with values so you can specify any other within the range. FPS range from 1 to 30, default is 20. Filter can be used in certain applications if they are not checking WDM/non-WDM devices.

Example of filter usage in Skype:

Example of filter usage in Adobe Live Flash Encoder:

Notes

If you need any example of filter such as network streaming, video/audio renderers, muxers, demuxers, encoders, decoders post in forum and next time maybe I post it as I had made hundreds of filters.

History

09-08-2012 - Initial version.

13-10-2012 - Fixed allocator and EnumMediaTypes issues in Adobe Flash Player.