Introduction

In this article, we’ll explain the principles of RNN and Long Short-Term Memory networks, which are a variation of RNN. We’ll also share our experience in video image target monitoring based on RNN-LSTM. This article will be helpful for developers who are working on image classification projects as well as for those who are only considering using neural networks for video processing.

Background

In one of our previous posts, we discussed the problem of classifying separate images. When we tried to separate a commercial from a football game in a video recording, we faced the need to make a neural network remember the state of the previous frames while analyzing the current frame.

Fortunately, this problem can be solved with a Recurrent Neural Network, or RNN. These neural networks contain recurrence relations: each further output depends on a combination of previous outputs. RNNs are applied to a wide range of tasks including speech recognition, language modeling, translation, and music generation.

Contents

Unlike traditional neural networks, where all input data is independent of the output data, recurrent neural networks (RNNs) use the output from the previous step as input to the current step. An RNN also provides the opportunity to work with sequences of vectors both in the input and output. This feature allows an RNN to remember a sequence of data. You can find more details on the effectiveness of RNNs and what can be achieved with them in the article, The Unreasonable Effectiveness of Recurrent Neural Networks by Andrej Karpathy.

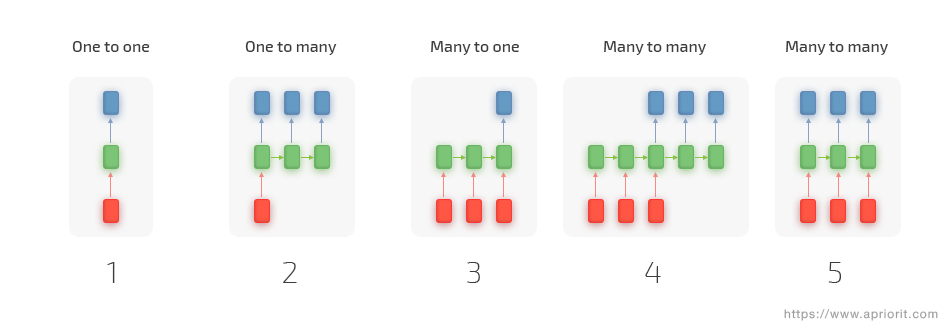

Let’s consider how RNNs are applied. In a basic case with traditional neural networks, a fixed-sized input is given to the neural network and output is got for this set of data. For instance, we feed a network with a single image and receive a classification result only for this image. An RNN allows us to operate with sequences of data vectors, where the output depends on the previous results of classification. Here’s an example of how recurrent networks operate:

Each rectangle is a vector, and arrows represent functions. Input vectors are red, while output vectors are blue, and green vectors hold the RNN’s state.

- This is an example of a convolutional neural network that maps a fixed-sized input to a fixed-sized output. It can be used for image classification. For instance, we can provide photos of cats and dogs as the input and get the same number of images labeled as cats and dogs as the output.

- The second case is an example of an RNN that maps one input to multiple outputs. For example, we can provide an image with text as the input and get a sequence of words as the output.

- The third example is an RNN that maps multiple inputs to one output. It can be used for sentiment analysis. Here, we can provide a sentence as the input and receive words that reflect emotions triggered by this sentence as the output.

- In the fourth example, an RNN maps many inputs to many outputs. This type of RNN can be used for translating a text from one language to another. For instance, we can provide a sentence in English as the input and receive a sentence in French as the output.

- The last case represents an RNN that maps a synced sequence of inputs and outputs. It can be applied for recognizing the movements of objects in a video. We provide an image sequence as the input and get a sequence of processed images as the output.

However, an RNN tends to lose its effectiveness in proportion to the increase in the gap between analyzed data and the previous outputs. This means that although an RNN is effective for processing sequence data for predictions, recurrent networks have only short-term memory. That’s why along with RNN, researchers have developed the Long Short-Term Memory (LSTM) architecture that solves the problem of missing long-term dependencies. This challenge is overcome by complicating the mathematical model of computing the update equation and through more appealing back propagation dynamics.

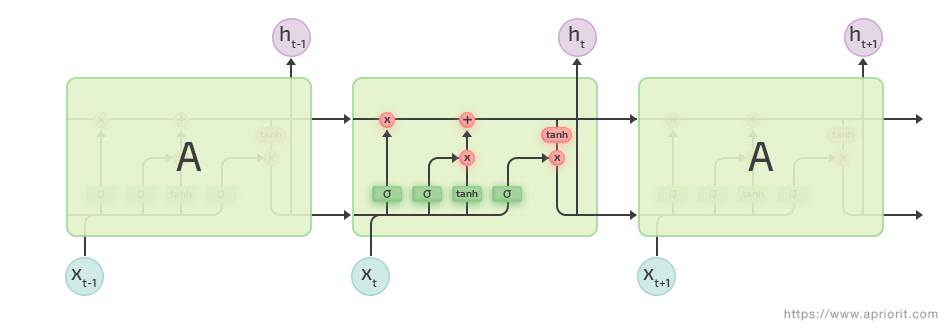

In simple words, in LSTM, the challenge of missing long-term dependencies is solved by making each neuron in the hidden layer perform four operations instead of one. Let’s look at how LSTM works:

LSTM has internal mechanisms called gates that regulate the flow of information. During training, these gates learn which data in a sequence is important to keep and which they can forget. This allows the network to pass relevant information down the long chain of sequences to make predictions. You can find more details on the principle of LSTM operations here.

Let’s now see how you can train an LSTM on your sequences of data. We’ll take this repository where the performance of various recurrent networks, including LSTM, is investigated. We’ll use Python 3, TensorFlow, and Keras — as well as ffmpeg — in order to form a dataset for using the repository and implementing our classifier. We recommend conducting all investigations in Google Colab, as they require lots of hardware resources.

All scripts and examples provided in this article can be found here.

We’ll continue investigating the video sample we looked at in our previous article, where we need to classify the football match recording into commercials and football match episodes.

For cutting a set of short video sequences, you can use this ffmpeg command:

ffmpeg -i Football.mp4 -ss 00:00:00 -t 00:00:03 Football_train_1.mp4

This command extracts the first three seconds from the beginning of Football.mp4 and creates a new video file called Football_train_1.mp4. It’s better to cut a video into equal time intervals in order to get the same number of frames in each sequence. When your dataset is ready, place the video files in the following folder structure:

/data

--- /train

| |

| --- /Football

| | Football_train_1.mp4

| | Football_train_2.mp4

| | ...

| |

| --- /Commercial

| Commercial_train_1.mp4

| Commercial_train_2.mp4

| ...

--- /test

| |

| --- /Football

| Football_test_1.mp4

| Football_test_2.mp4

| ...

| |

| --- /Commercial

| Commercial_test_1.mp4

| Commercial_test_2.mp4

| ...

Create two subfolders that reflect the type of data: testing or training. After you’ve prepared the data set, run the extract_files.py script, which extracts a sequence of video frames from each media file and saves them as images with corresponding names. This script takes the extension of the media file as a parameter:

python extract_files.py mp4

After running this script, the data directory will contain a data_file.csv file that shows the number of frames in each video.

In order to start training the LSTM network, run the train.py script with arguments for the length of the frame sequence, class limit, and frame height and width. For instance, say we want to train our network on a sequence of 75 frames for two classes from Football.mp4 with a resolution of 1280х720.

python train.py 75 2 720 1280

After training, the data/checkpoints directory will contain the weight files. The last iteration of training will provide the .hdf5 weight file with the highest level of accuracy. Let’s move this file to the folder with the clasify.py script and name it lstm-features.hdf5. Before we start using the pretrained network, let’s take a look at the scripts we’ll use and see how they work at the training stage:

- data.py – a set of auxiliary functions for working with training data; downloads images, maps classes, provides frame-by-frame sequences, etc.

- extractor.py – a script that provides an interface for creating an InceptionV3 model from the Keras library and implements it for feature extraction. We’ll collect data from the

avg_pool neuron layer of the InceptionV3 model that was pre-trained on the ImageNet dataset. - extract_features.py – a script for using the

Extractor object, extracting features from each image in the sequence and combining them in the sequence files in the data/sequences folder - models.py – a script that implements the model object from the Keras library for working with the LSTM network

- train.py – a script for training the LSTM network on the sequence files from extracted features

- clasify.py – a script that classifies a separate video file using a pretrained LSTM model

During the training stage, the Extractor object creates an InceptionV3 model that was preliminarily trained on the ImageNet dataset and applies it to each image from the video sequence. As a result of the image recognition process, it extracts features from each image, which are then collected into a new sequence file with the extension .npy. In the end, the data/sequences folder will contain a new file of feature sequences for each video file.

The next step is training the new ResearchModel() object, which is an LSTM object from Keras. The intermediate results of training are collected into weight files and saved in the data/checkpoints folder. The model is trained until the classification accuracy rate improves over the next five iterations.

After our network is trained, we can start classifying a frame sequence. To do this, we’ll use the clasify.pyscript with the following parameters:

- length of the frame sequence

- number of classes for recognition

- weight file of the trained LSTM model

- video file for classification

python clasify.py 75 2 lstm-features.hdf5 video_file.mp4

The results of our classification will be saved in the result.avi file.

Let’s see how the script for video file classification works. It acts similarly to the train.py script.

The video file is analyzed with the OpenCV library. After analysis, we receive the frame sizes and initialize OpenCV for reading and recording/writing the video frames.

capture = cv2.VideoCapture(os.path.join(video_file))

width = capture.get(cv2.CAP_PROP_FRAME_WIDTH) # float

height = capture.get(cv2.CAP_PROP_FRAME_HEIGHT) # float

fourcc = cv2.VideoWriter_fourcc(*'XVID')

video_writer = cv2.VideoWriter("result.avi", fourcc, 15, (int(width), int(height)))

After that, we read a sequence of the specified number of frames from the file, frame by frame, and apply the recognition by InceptionV3. As the output, we get a sequence of basic features in the sequence object.

# get the model.

extract_model = Extractor(image_shape=(height, width, 3))

saved_LSTM_model = load_model(saved_model)

frames = []

frame_count = 0

while True:

ret, frame = capture.read()

# Bail out when the video file ends

if not ret:

break

# Save each frame of the video to a list

frame_count += 1

frames.append(frame)

if frame_count < seq_length:

continue # capture frames until you get the required number for sequence

else:

frame_count = 0

# For each frame, extract feature and prepare it for classification

sequence = []

for image in frames:

features = extract_model.extract_image(image)

sequence.append(features)

...

After getting the sequence of basic features, we can apply our pretrained LSTM network for sequence classification.

.

# Classify sequence

prediction = saved_LSTM_model.predict(np.expand_dims(sequence, axis=0))

print(prediction)

values = data.print_class_from_prediction(np.squeeze(prediction, axis=0))

...

Now we specify the classification results in the upper left corner of each image and compile a new video file:

for image in frames:

for i in range(len(values)):

cv2.putText(image, values[i], (40, 40 * i + 40),

cv2.FONT_HERSHEY_SIMPLEX, 1.0, (255, 255, 255), lineType=cv2.LINE_AA)

video_writer.write(image)

...

Repeat this process until all frames in the video file are classified.

Here you can watch what we got in the end.

There are a lot of challenges to overcome with regard to using neural networks for video recognition and classification. However, Apriorit experts aren’t afraid of these challenges and are always in search of better, easier, and more efficient solutions.

In this article, we offered an approach that allows you to make a neural network analyze the current frame while remembering the state of previous frames. We discussed the LSTM and RNN architectures needed to realize this approach. We also explained how to use LSTM objects and InceptionV3 from Keras. Finally, we showed you how to train the LSTM network with custom classes of sequences and apply them to video classification.

ApriorIT is a software research and development company specializing in cybersecurity and data management technology engineering. We work for a broad range of clients from Fortune 500 technology leaders to small innovative startups building unique solutions.

As Apriorit offers integrated research&development services for the software projects in such areas as endpoint security, network security, data security, embedded Systems, and virtualization, we have strong kernel and driver development skills, huge system programming expertise, and are reals fans of research projects.

Our specialty is reverse engineering, we apply it for security testing and security-related projects.

A separate department of Apriorit works on large-scale business SaaS solutions, handling tasks from business analysis, data architecture design, and web development to performance optimization and DevOps.

Official site: https://www.apriorit.com

Clutch profile: https://clutch.co/profile/apriorit

This member has not yet provided a Biography. Assume it's interesting and varied, and probably something to do with programming.