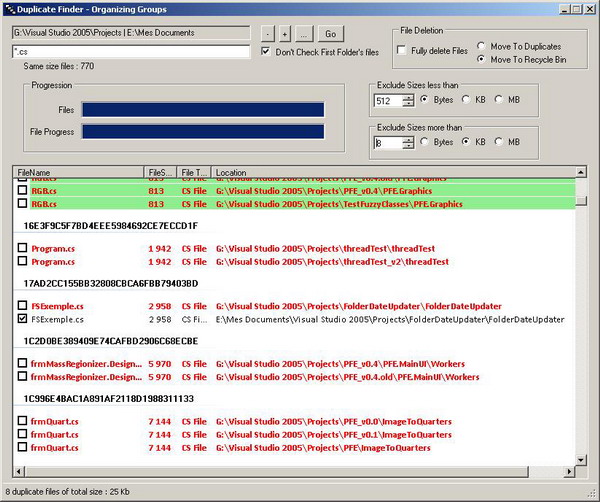

Search results

File deleted

Introduction

Once a year, I do that terrific job of cleaning files I created or downloaded on my drives. The last time I tried to do it, it was such a fastidious task that I thought of doing that thing semi-automatically. I needed some free utility that could find duplicate files, but I found none that corresponded to my needs. I decided to write one.

Background

The CRC calculation method is available here. I use the MD5 hashing provided by the standard libraries. I added an event to the MD5 computing method so as to get a hashing progression, it is a thread that reads the stream position while the MD5 computing method is reading the same stream.

Using the code

The utility uses two main classes, DirectoryCrawler and Comparers. The use is obvious :) Please notice that instead of iterating through a list list.count X list.count times, DuplicateFinder uses a Hashtable that contains the pair <size,count>. Once populated, all files with count =1 will be removed: (Very much faster!!!!)

int len = filesToCompare.Length;

List<long> alIdx = new List<long>();

System.Collections.Hashtable HLengths = new System.Collections.Hashtable();

foreach (FileInfo fileInfo in filesToCompare)

{

if (!HLengths.Contains(fileInfo.Length))

HLengths.Add(fileInfo.Length, 1);

else

HLengths[fileInfo.Length] = (int)HLengths[fileInfo.Length] + 1;

}

foreach (DictionaryEntry hash in HLengths)

if ((int)hash.Value == 1)

{

alIdx.Add((long)hash.Key);

setText(stsMain, string.Format("Will remove File with size {0}", hash.Key));

}

FileInfo[] fiZ = new FileInfo[len - alIdx.Count];

int j = 0;

for (int i = 0; i < len; i++)

{

if (!alIdx.Contains(filesToCompare[i].Length))

fiZ[j++] = filesToCompare[i];

}

return fiZ;

Points of interest

- (Done) Optimizes file moving, UI may be unresponsive while moving big files :(.

- (Useless, my MD5 is better ^_^) Add options to choose between CRC32 and MD5 hashing.

- Maybe use an XML configuration file. At this time, moving duplicate files to D:\DuplicateFiles (which is hard coded, viva Microsoft!) and skipping that folder during scanning is sufficient to me.

- Don't forget that your posts make POIs.

- (Done): Code an event enabled MD5 hashing class that would report hashing progression, imagine hashing a 10 GB file!

History

- v0.2

- Optimized duplicates retrieving (duplicate sizes and duplicate hashes).

- Added Move to Recycle Bin.

- Added file size criteria.

- Files to delete info updated for every check/uncheck in listview.

- Added colors and fonts to UI.

- Debug enabled sources (

#if DEBUG synchronous #else threaded). - Added

List<Fileinfo> and List<string[]> instead of using array lists. - MD5 hashing is used instead of CRC32 (supercat9).

- Added Skip Source Folder option.

- Added Drop SubFolder.

- Some optimizations...

- v0.1

- First time publishing. Waiting for bug reports :)